-

-

Notifications

You must be signed in to change notification settings - Fork 71

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

RAM usage in 0.9.0 #582

Comments

|

I'm also seeing high memory usage in the latest version. Have ran a routinator instance for 18 months on a 1GB VM. I re-deployed it yesterday with the latest version (and used the Debian package which is now available) and bumped it to 2GB while I was there. A few hours later and it's alerting for high memory usage. |

|

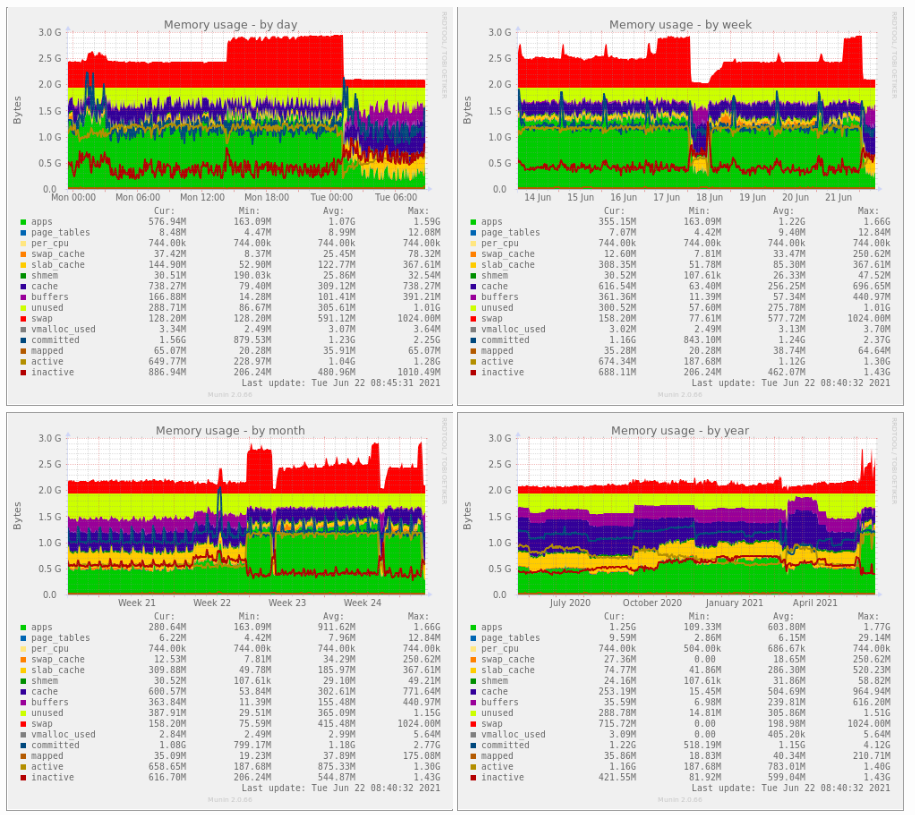

It looks like 2GB is cutting it real close. We have an instance with 2GB that is barely scraping by but seems to be surviving so far: |

|

I increased the RAM to 4GB and it was fine for a few days, but is now alerting again :( That really doesn't seem right, when I've ran the older version for over 18 months on a 1GB without it alerting. Ian |

|

It certainly isn’t right and the PR for a fix – #590 – is coming along nicely. We expect it to be complete some time next week and a release to follow in due time after that. |

|

After running 0.10.0-dev for about a week, memory use consistently floats around 450MB, with the High Water Mark at 525MB. |

|

Excellent! - will there be a release coming up any time soon which will incorporate those changes? |

|

I had to restart it ~48 hours ago because after a week or so our monitoring started alerting because the VM was at 90%+ memory usage, even though i'd increased it to have 4GB RAM. So huge difference to your stats @AlexanderBand, even after only 2 days! |

|

A release candidate should be out next week. |

|

The dichotomy between high memory and disk IO is a fact. There are scenarios where a bit more of RAM and almost zero of disk IO is good... On the other hand, low RAM footprint is good on large Virtualization Environments without any issues on Disk Speed and durability. I couldn't read all the issues related to that, so I don't know if it isn't a duplicated suggestion, but: |

|

I believe @ties has a setup that keeps the repository data on a tmpfs file system which I feel does the trick of not using disks at all. In ‘traditional’ deployments, you probably want to persist the local copy of the repository between restarts, so using the disk and letting the kernel optimize access patterns via its buffers seems a good strategy to me. |

I have an operational issue with the RAM usage of the last release (0.9.0), it jumped from some megs to more than 1G. It’s OOM-killed by the kernel every now and them. As a quick fix, I’m back on 0.8.3.

The upgrade has been done at the end of week 22.

Originally posted by @alarig in #333 (comment)

The text was updated successfully, but these errors were encountered: