If you use the code of this repo and you find this project useful,

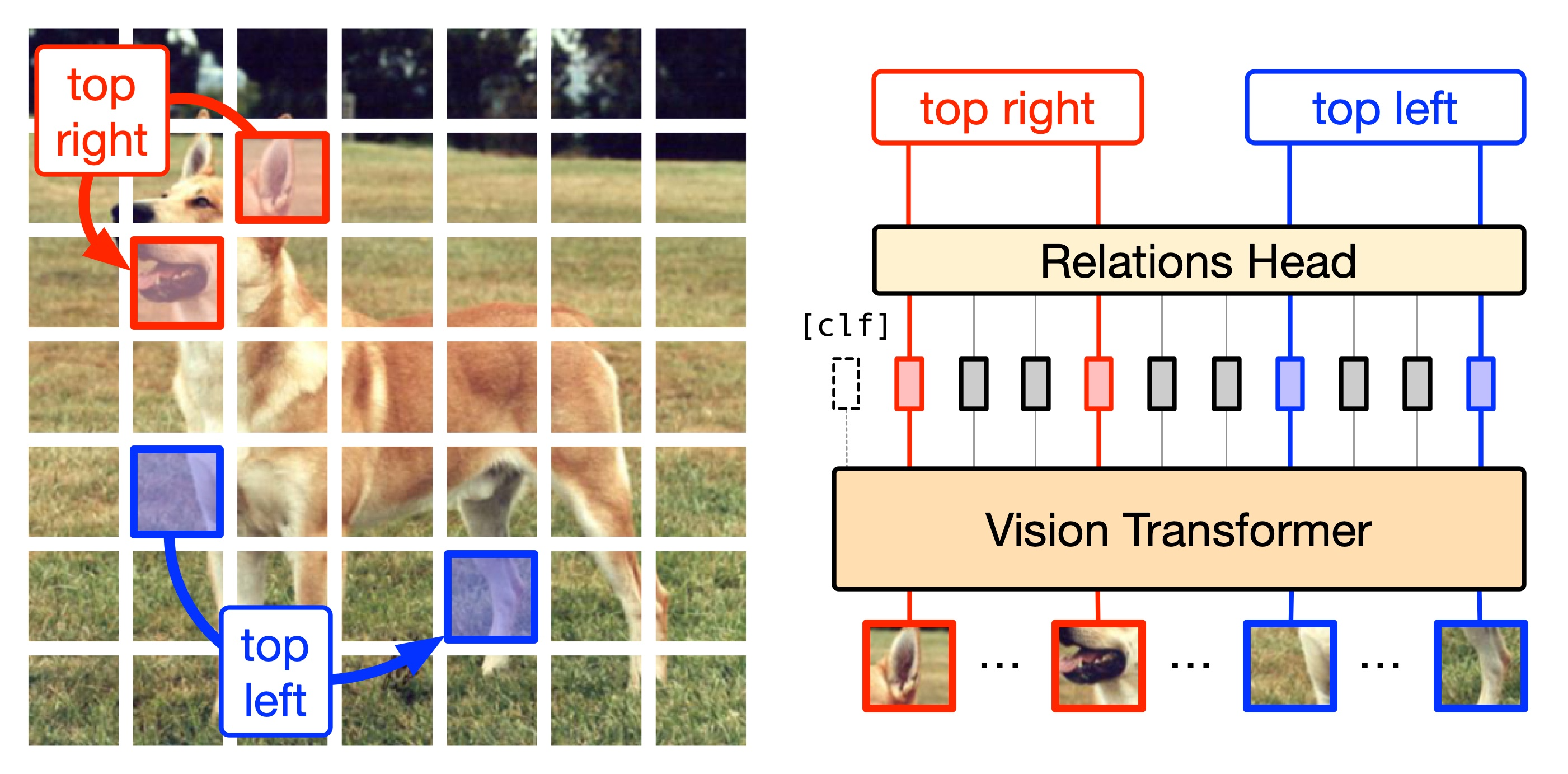

please consider to give a star ⭐!This repository hosts the official code related to the paper "Where are my Neighbors? Exploiting Patches Relations in Self-Supervised Vision Transformer", Guglielmo Camporese, Elena Izzo, Lamberto Ballan - BMVC 2022. [arXiv] [video]

@inproceedings{Camporese2022WhereAM,

title = {Where are my Neighbors? Exploiting Patches Relations in Self-Supervised Vision Transformer},

author = {Guglielmo Camporese, Elena Izzo, Lamberto Ballan},

booktitle = {British Machine Vision Conference (BMVC)},

year = {2022}

}- [22/10/13] Our paper has been accepted to BMVC 2022 (oral spotlight)!

- [22/06/02] Our paper is on arXiv! Here you can find the link.

- [22/05/24] Our paper has been selected for a spotlight oral presentation at the CVPR 2022 "T4V: Transformers for Vision" workshop!

- [22/05/23] Our paper just got accepted at the CVPR 2022 "T4V: Transformers for Vision" workshop!

Install

# clone the repo

git clone https://github.com/guglielmocamporese/relvit.git

# install and activate the conda env

cd relvit

conda env create -f env.yml

conda activate relvitAll the commands are based on the training scripts in the scripts folder.

Self-Supervised Pre-Training + Supervised Finetuning

Here you can find the commands for:

- Running the self-supervised learning pre-training

# SSL upstream pre-training

bash scripts/upstream.sh \

--exp_id upstream_cifar10 --backbone vit \

--model_size small --num_gpus 1 --epochs 100 --dataset cifar10 \

--weight_decay 0.1 --drop_path_rate 0.1 --dropout 0.0- Running the supervised finetuning using the checkpoint obtained in the previous step.

After running the upstream pre-training, the directory

tmp/relvitwill contain the file checkpointcheckpoints/best.ckptfile that has to be passed to the finetuning script in the--model_checkpointargument.

# supervised downstream

bash scripts/downstream.sh \

--exp_id downstream_cifar10 --backbone vit --num_gpus 1 \

--epochs 100 --dataset cifar10 --weight_decay 0.1 --drop_path_rate 0.1 \

--model_size small --dropout 0.0 --model_checkpoint checkpoint_pathDownstream-Only Experiment

Here you can find the commands for training the ViT, Swin, and T2T models for the downstram-only supervised task.

# ViT downstream-only

bash scripts/downstream-only.sh \

--seed 2022 --exp_id downstream-only_vit_cifar10 \

--backbone vit --dataset cifar10 --weight_decay 0.1 --drop_path_rate 0.1 \

--model_size small --dropout 0.0 --patch_trans colJitter:0.8-grayScale:0.2

# Swin downstream-only

bash scripts/downstream-only.sh \

--seed 2022 --exp_id downstream-only_swin_cifar10 \

--backbone swin --dataset cifar10_224 --batch_size 64 --weight_decay 0.1 \

--drop_path_rate 0.1 --model_size tiny --dropout 0.0 \

--patch_trans colJitter:0.8-grayScale:0.2

# T2T downstream-only

bash scripts/downstream-only.sh \

--seed 2022--exp_id downstream-only_t2t_cifar10 \

--backbone t2t_vit --dataset cifar10_224 --batch_size 64 --weight_decay 0.1 \

--drop_path_rate 0.1 --model_size 14 --dropout 0.0Mega-Patches Ablation

Here you can find the experiments with the use of the mega-patches described in the paper. Also in this case, you can find the commands for the SSL upstream with the mega-patches and the subsequent supervised finetuning.

# SSL upstream pre-training with 6x6 megapatches

bash scripts/upstream_MEGApatch.sh \

--exp_id upstream_megapatch_imagenet100 \

--backbone vit --model_size small --dataset imagenet100 \

--batch_size 256 --weight_decay 0.1 --drop_path_rate 0.1 \

--dropout 0.0 --side_megapatches 6After running the upstream pre-training, the directory

tmp/relvitwill contain the file checkpointcheckpoints/best.ckptfile that has to be passed to the finetuning script in the--model_checkpointargument.

# downstream finetuning

bash scripts/downstream.sh \

--exp_id downstream_imagenet100 --backbone vit \

--dataset imagenet100 --weight_decay 0.1 --drop_path_rate 0.1 \

--model_size small --dropout 0.0 --model_checkpoint checkpoint_pathSupported Datasets

Here you can find the list of all the supported datasets in the repo that can be specified using the --datasets input argument in the previous commands.

- CIFAR10

- CIFAR100

- Flower102

- SVHN

- Tiny ImageNet

- ImageNet100

Validation Scripts

# validation on the upstream task

bash scripts/upstream.sh --dataset cifar10 --mode validation

# validation on the downstream task

bash scripts/downstream.sh --dataset cifar10 --mode validation