-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

How to cite uPheno¶

+Papers¶

+uPheno 2¶

+-

+

- Matentzoglu N, Osumi-Sutherland D, Balhoff JP, Bello S, Bradford Y, Cardmody L, Grove C, Harris MA, Harris N, Köhler S, McMurry J, Mungall C, Munoz-Torres M, Pilgrim C, Robb S, Robinson PN, Segerdell E, Vasilevsky N, Haendel M. uPheno 2: Framework for standardised representation of phenotypes across species. 2019 Apr 8. http://dx.doi.org/10.7490/f1000research.1116540.1 +

Original uPheno¶

+-

+

- Sebastian Köhler, Sandra C Doelken, Barbara J Ruef, Sebastian Bauer, Nicole Washington, Monte Westerfield, George Gkoutos, Paul Schofield, Damian Smedley, Suzanna E Lewis, Peter N Robinson, Christopher J Mungall (2013) Construction and accessibility of a cross-species phenotype ontology along with gene annotations for biomedical research F1000Research +

Entity-Quality definitions and phenotype modelling¶

+-

+

- C J Mungall, Georgios Gkoutos, Cynthia Smith, Melissa Haendel, Suzanna Lewis, Michael Ashburner (2010) Integrating phenotype ontologies across multiple species Genome Biology 11 (1) +

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

How to add the uPheno direct relation extension¶

+EQ definitions are powerful tools for reconciling phenotypes across species and driving reasoning. However, they are not all that useful for many "normal" users of our ontologies.

+We have developed a little workflow extension to take care of that.

+-

+

- As usual please follow the steps to install the custom uPheno Makefile extension first. +

- Now add a new component to your ont-odk.yaml file (e.g.

src/ontology/mp-odk.yaml): +

+ - We can now choose if we want to add the component to your edit file as well. To do that, follow the instructions on adding an import (i.e. adding the component to the edit file and catalog file). The IRI of the component is

http://purl.obolibrary.org/obo/YOURONTOLOGY/components/eq-relations.owl. For example, for MP, the IRI ishttp://purl.obolibrary.org/obo/mp/components/eq-relations.owl.

+ - Now we can generate the component:

+

+This command will be run automatically during a release (

prepare_release).

+

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Add custom uPheno Makefile¶

+The custom uPheno Makefile is an extension to your normal custom Makefile (for example, hp.Makefile, mp.Makefile, etc), located in the src/ontology directory of your ODK set up.

+To install it:

+(1) Open your normal custom Makefile and add a line in the very end:

+ +(2) Now download the custom Makefile:

+https://raw.githubusercontent.com/obophenotype/upheno/master/src/ontology/config/pheno.Makefile

+and save it in your src/ontology directory.

Feel free to use, for example, wget:

+cd src/ontology

+wget https://raw.githubusercontent.com/obophenotype/upheno/master/src/ontology/config/pheno.Makefile -O pheno.Makefile

+From now on you can simply run

+ +whenever you wish to synchronise the Makefile with the uPheno repo.

+(Note: it would probably be good to add a GitHub action that does that automatically.)

+ + + + + + +-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Phenotype Ontology Editors' Workflow¶

+Useful links¶

+-

+

- Phenotype Ontology Working Group Meetings agenda and minutes gdoc. +

- phenotype-ontologies slack channel: to send meeting reminders; ask for agenda items; questions; discussions etc. +

- Dead simple owl design pattern (DOS-DP) Documentation + +

- Validate DOS-DP yaml templates:

-

+

- yamllint: yaml syntax validator

-

+

- Installing yamllint:

brew install yamllint

+

+ - Installing yamllint:

- Congiguring yamllint

+ You can ignore the

error line too longyaml syntax errors for dos-dp yaml templates. + You can create a custom configuration file for yamllint in your home folder: + + The content of the config file should look like this: ++ The custom config should turn the# Custom configuration file for yamllint +# It extends the default conf by adjusting some options. + +extends: default + +rules: + line-length: + max: 80 # 80 chars should be enough, but don't fail if a line is longer +# max: 140 # allow long lines + level: warning + allow-non-breakable-words: true + allow-non-breakable-inline-mappings: true +error line too longerrors to warnings.

+ - DOS-DP validator:: DOS-DP format validator

-

+

- Installing :

pip install dosdp

+

+ - Installing :

+ - yamllint: yaml syntax validator

Patternisation is the process of ensuring that all entity quality (EQ) descriptions from textual phenotype term definitions have a logical definition pattern. A pattern is a standard format for describing a phenotype that includes a quality and an entity. For example, "increased body size" is a pattern that includes the quality "increased" and the entity "body size." The goal of patternisation is to make the EQ descriptions more uniform and machine-readable, which facilitates downstream analysis.

+1. Identify a group of related phenotypes from diverse organisms¶

+The first step in the Phenotype Ontology Editors' Workflow is to identify a group of related phenotypes from diverse organisms. This can be done by considering proposals from phenotype editors or by using the pattern suggestion pipeline. +The phenotype editors may propose a group of related phenotypes based on their domain knowledge, while the pattern suggestion pipeline uses semantic similarity and shared Phenotype And Trait Ontology (PATO) quality terms to identify patterns in phenotype terms from different organism-specific ontologies.

+2. Propose a phenotype pattern¶

+Once a group of related phenotypes is identified, the editors propose a phenotype pattern. To do this, they create a Github issue to request the phenotype pattern template in the uPheno repository. +Alternatively, a new template can be proposed at a phenotype editors' meeting which can lead to the creation of a new term request as a Github issue. +Ideally, the proposed phenotype pattern should include an appropriate PATO quality term for logical definition, use cases, term examples, and a textual definition pattern for the phenotype terms.

+3. Discuss the new phenotype pattern draft at the regular uPheno phenotype editors meeting¶

+The next step is to discuss the new phenotype pattern draft at the regular uPheno phenotype editors meeting. During the meeting, the editors' comments and suggestions for improvements are collected as comments on the DOS-DP yaml template in the corresponding Github pull request. Based on the feedback and discussions, a consensus on improvements should be achieved.

+The DOS-DP yaml template is named should start with a lower case letter, should be informative, and must include the PATO quality term.

+A Github pull request is created for the DOS-DP yaml template.

-

+

- A DOS-DP phenotype pattern template example: +

---

+pattern_name: ??pattern_and_file_name

+

+pattern_iri: http://purl.obolibrary.org/obo/upheno/patterns-dev/??pattern_and_file_name.yaml

+

+description: 'A description that helps people chose this pattern for the appropriate scenario.'

+

+# examples:

+# - example_IRI-1 # term name

+# - example_IRI-2 # term name

+# - example_IRI-3 # term name

+# - http://purl.obolibrary.org/obo/XXXXXXXXXX # XXXXXXXX

+

+contributors:

+ - https://orcid.org/XXXX-XXXX-XXXX-XXXX # Yyy Yyyyyyyyy

+

+classes:

+ process_quality: PATO:0001236

+ abnormal: PATO:0000460

+ anatomical_entity: UBERON:0001062

+

+relations:

+ characteristic_of: RO:0000052

+ has_modifier: RO:0002573

+ has_part: BFO:0000051

+

+annotationProperties:

+ exact_synonym: oio:hasExactSynonym

+ related_synonym: oio:hasRelatedSynonym

+ xref: oio:hasDbXref

+

+vars:

+ var??: "'anatomical_entity'" # "'variable_range'"

+

+name:

+ text: "trait ?? %s"

+ vars:

+ - var??

+

+annotations:

+ - annotationProperty: exact_synonym

+ text: "? of %s"

+ vars:

+ - var??

+

+ - annotationProperty: related_synonym

+ text: "? %s"

+ vars:

+ - var??

+

+ - annotationProperty: xref

+ text: "AUTO:patterns/patterns/chemical_role_attribute"

+

+def:

+ text: "A trait that ?? %s."

+ vars:

+ - var??

+

+equivalentTo:

+ text: "'has_part' some (

+ 'XXXXXXXXXXXXXXXXX' and

+ ('characteristic_of' some %s) and

+ ('has_modifier' some 'abnormal')

+ )"

+ vars:

+ - var??

+...

+4. Review the candidate phenotype pattern¶

+Once a consensus on the improvements for a particular template is achieved, they are incorporated into the DOS-DP yaml file. Typically, the improvements are applied to the template some time before a subsequent ontology editor's meeting. There should be enough time for off-line review of the proposed pattern to allow community feedback.

+The improved phenotype pattern candidate draft should get approval from the community at one of the regular ontology editors' call or in a Github comment.

+The ontology editors who approve the pattern provide their ORCIDs and they are credited as contributors in an appropriate field of the DOS-DP pattern template.

5. Add the community-approved phenotype pattern template to uPheno¶

+Once the community-approved phenotype pattern template is created, it is added to the uPheno Github repository.

+The approved DOS-DP yaml phenotype pattern template should pass quality control (QC) steps.

+1. Validate yaml syntax: yamllint

+2. Validate DOS-DP

+Use DOSDP Validator.

+* To validate a template using the command line interface, execute:

+```sh

+yamllint

After successfully passing QC, the responsible editor merges the approved pull request, and the phenotype pattern becomes part of the uPheno phenotype pattern template collection.

+ + + + + + +-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Pattern merge - replace workflow¶

+This document is on how to merge new DOSDP design patterns into an ODK ontology and then how to replace the old classes with the new ones.

+1. You need the tables in tsv format with the DOSDP filler data. Download the tsv tables to¶

+$ODK-ONTOLOGY/src/patterns/data/default/

+Make sure that the tsv filenames match that of the relevant yaml DOSDP pattern files.

+2. Add the new matching pattern yaml filename to¶

+$ODK-ONTOLOGY/src/patterns/dosdp-patterns/external.txt

+3. Import the new pattern templates that you have just added to the external.txt list from external sources into the current working repository¶

+cd ODK-ONTOLOGY/src/ontology

+sh run.sh make update_patterns

+4. make definitions.owl¶

+cd ODK-ONTOLOGY/src/ontology

+sh run.sh make ../patterns/definitions.owl IMP=false

+5. Remove old classes and replace them with the equivalent and patternised new classes¶

+cd ODK-ONTOLOGY/src/ontology

+sh run.sh make remove_patternised_classes

+6. Announce the pattern migration in an appropriate channel, for example on the phenotype-ontologies Slack channel.¶

+For example:

+++ + + + + + +I have migrated the ... table and changed the tab colour to blue. +You can delete the tab if you wish.

+

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

How to run a uPheno 2 release¶

+In order to run a release you will have to have completed the steps to set up s3.

+-

+

- Clone https://github.com/obophenotype/upheno-dev +

cd src/scripts

+sh upheno_pipeline.sh

+cd ../ontology

+make prepare_upload S3_VERSION=2022-06-19

+make deploy S3_VERSION=2022-06-19

+

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

How to set yourself up for S3¶

+To be able to upload new uPheno release to the uPheno S3 bucket, you need to set yourself up for S3 first.

+ +1. Download and install AWS CLI¶

+The most convenient way to interact with S3 is the AWS Command Line Interface (CLI). You can find the installers and install instructions on that page (different depending on your Operation System): +- For Mac +- For Windows

+2. Obtain secrets from BBOP¶

+Next, you need to ask someone at BBOP (such as Chris Mungall or Seth Carbon) to provide you with an account that gives you access to the BBOP s3 buckets. You will have to provide a username. You will receive: +- User name +- Access key ID- +- Secret access key +- Console link to sign into bucket

+3. Add configuration for secrets¶

+You will now have to set up your local system. You will create two files:

+ +and

+ +in ~/.aws/credentials make sure you add the correct keys as provided above.

4. Write to your bucket¶

+Now, you should be set up to write to your s3 bucket. Note that in order for your data to be accessible through https after your upload, you need to add --acl public read.

aws s3 sync --exclude "*.DS_Store*" my/data-dir s3://bbop-ontologies/myproject/data-dir --acl public-read

+If you have previously pushed data to the same location, you wont be able to set it to "publicly readable" by simply rerunning the sync command. If you want to publish previously private data, follow the instructions here, e.g.:

+ + + + + + + +-

+

+

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Introduction to Continuous Integration Workflows with ODK¶

+Historically, most repos have been using Travis CI for continuous integration testing and building, but due to +runtime restrictions, we recently switched a lot of our repos to GitHub actions. You can set up your repo with CI by adding +this to your configuration file (src/ontology/upheno-odk.yaml):

+ +When updateing your repo, you will notice a new file being added: .github/workflows/qc.yml.

This file contains your CI logic, so if you need to change, or add anything, this is the place!

+Alternatively, if your repo is in GitLab instead of GitHub, you can set up your repo with GitLab CI by adding +this to your configuration file (src/ontology/upheno-odk.yaml):

+ +This will add a file called .gitlab-ci.yml in the root of your repo.

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Editors Workflow¶

+The editors workflow is one of the formal workflows to ensure that the ontology is developed correctly according to ontology engineering principles. There are a few different editors workflows:

+-

+

- Local editing workflow: Editing the ontology in your local environment by hand, using tools such as Protégé, ROBOT templates or DOSDP patterns. +

- Completely automated data pipeline (GitHub Actions) +

- DROID workflow +

This document only covers the first editing workflow, but more will be added in the future

+Local editing workflow¶

+Workflow requirements:

+-

+

- git +

- github +

- docker +

- editing tool of choice, e.g. Protégé, your favourite text editor, etc +

1. Create issue¶

+Ensure that there is a ticket on your issue tracker that describes the change you are about to make. While this seems optional, this is a very important part of the social contract of building an ontology - no change to the ontology should be performed without a good ticket, describing the motivation and nature of the intended change.

+2. Update main branch¶

+In your local environment (e.g. your laptop), make sure you are on the main (prev. master) branch and ensure that you have all the upstream changes, for example:

3. Create feature branch¶

+Create a new branch. Per convention, we try to use meaningful branch names such as: +- issue23removeprocess (where issue 23 is the related issue on GitHub) +- issue26addcontributor +- release20210101 (for releases)

+On your command line, this looks like this:

+ +4. Perform edit¶

+Using your editor of choice, perform the intended edit. For example:

+Protégé

+-

+

- Open

src/ontology/upheno-edit.owlin Protégé

+ - Make the change +

- Save the file +

TextEdit

+-

+

- Open

src/ontology/upheno-edit.owlin TextEdit (or Sublime, Atom, Vim, Nano)

+ - Make the change +

- Save the file +

Consider the following when making the edit.

+-

+

- According to our development philosophy, the only places that should be manually edited are:

-

+

src/ontology/upheno-edit.owl

+- Any ROBOT templates you chose to use (the TSV files only) +

- Any DOSDP data tables you chose to use (the TSV files, and potentially the associated patterns) +

- components (anything in

src/ontology/components), see here.

+

+ - Imports should not be edited (any edits will be flushed out with the next update). However, refreshing imports is a potentially breaking change - and is discussed elsewhere. +

- Changes should usually be small. Adding or changing 1 term is great. Adding or changing 10 related terms is ok. Adding or changing 100 or more terms at once should be considered very carefully. +

4. Check the Git diff¶

+This step is very important. Rather than simply trusting your change had the intended effect, we should always use a git diff as a first pass for sanity checking.

+In our experience, having a visual git client like GitHub Desktop or sourcetree is really helpful for this part. In case you prefer the command line:

+ +5. Quality control¶

+Now it's time to run your quality control checks. This can either happen locally (5a) or through your continuous integration system (7/5b).

+5a. Local testing¶

+If you chose to run your test locally:

+ +This will run the whole set of configured ODK tests on including your change. If you have a complex DOSDP pattern pipeline you may want to addPAT=false to skip the potentially lengthy process of rebuilding the patterns.

+

+6. Pull request¶

+When you are happy with the changes, you commit your changes to your feature branch, push them upstream (to GitHub) and create a pull request. For example:

+git add NAMEOFCHANGEDFILES

+git commit -m "Added biological process term #12"

+git push -u origin issue23removeprocess

+Then you go to your project on GitHub, and create a new pull request from the branch, for example: https://github.com/INCATools/ontology-development-kit/pulls

+There is a lot of great advise on how to write pull requests, but at the very least you should:

+- mention the tickets affected: see #23 to link to a related ticket, or fixes #23 if, by merging this pull request, the ticket is fixed. Tickets in the latter case will be closed automatically by GitHub when the pull request is merged.

+- summarise the changes in a few sentences. Consider the reviewer: what would they want to know right away.

+- If the diff is large, provide instructions on how to review the pull request best (sometimes, there are many changed files, but only one important change).

7/5b. Continuous Integration Testing¶

+If you didn't run and local quality control checks (see 5a), you should have Continuous Integration (CI) set up, for example: +- Travis +- GitHub Actions

+More on how to set this up here. Once the pull request is created, the CI will automatically trigger. If all is fine, it will show up green, otherwise red.

+8. Community review¶

+Once all the automatic tests have passed, it is important to put a second set of eyes on the pull request. Ontologies are inherently social - as in that they represent some kind of community consensus on how a domain is organised conceptually. This seems high brow talk, but it is very important that as an ontology editor, you have your work validated by the community you are trying to serve (e.g. your colleagues, other contributors etc.). In our experience, it is hard to get more than one review on a pull request - two is great. You can set up GitHub branch protection to actually require a review before a pull request can be merged! We recommend this.

+This step seems daunting to some hopefully under-resourced ontologies, but we recommend to put this high up on your list of priorities - train a colleague, reach out!

+9. Merge and cleanup¶

+When the QC is green and the reviews are in (approvals), it is time to merge the pull request. After the pull request is merged, remember to delete the branch as well (this option will show up as a big button right after you have merged the pull request). If you have not done so, close all the associated tickets fixed by the pull request.

+10. Changelog (Optional)¶

+It is sometimes difficult to keep track of changes made to an ontology. Some ontology teams opt to document changes in a changelog (simply a text file in your repository) so that when release day comes, you know everything you have changed. This is advisable at least for major changes (such as a new release system, a new pattern or template etc.).

+ + + + + + +-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Manage automated tests

+ +Constraint violation checks¶

+We can define custom checks using SPARQL. SPARQL queries define bad modelling patterns (missing labels, misspelt URIs, and many more) in the ontology. If these queries return any results, then the build will fail. Custom checks are designed to be run as part of GitHub Actions Continuous Integration testing, but they can also run locally.

+Steps to add a constraint violation check:¶

+-

+

- Add the SPARQL query in

src/sparql. The name of the file should end with-violation.sparql. Please give a name that helps to understand which violation the query wants to check.

+ - Add the name of the new file to odk configuration file

src/ontology/uberon-odk.yaml:-

+

- Include the name of the file (without the

-violation.sparqlpart) to the list inside the keycustom_sparql_checksthat is insiderobot_reportkey.

+ -

+

If the

+ +3. Update the repository so your new SPARQL check will be included in the QC. +robot_reportorcustom_sparql_checkskeys are not available, please add this code block to the end of the file.

+

+ - Include the name of the file (without the

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Updating the Documentation¶

+The documentation for UPHENO is managed in two places (relative to the repository root):

+-

+

- The

docsdirectory contains all the files that pertain to the content of the documentation (more below)

+ - the

mkdocs.yamlfile contains the documentation config, in particular its navigation bar and theme.

+

The documentation is hosted using GitHub pages, on a special branch of the repository (called gh-pages). It is important that this branch is never deleted - it contains all the files GitHub pages needs to render and deploy the site. It is also important to note that the gh-pages branch should never be edited manually. All changes to the docs happen inside the docs directory on the main branch.

Editing the docs¶

+Changing content¶

+All the documentation is contained in the docs directory, and is managed in Markdown. Markdown is a very simple and convenient way to produce text documents with formatting instructions, and is very easy to learn - it is also used, for example, in GitHub issues. This is a normal editing workflow:

-

+

- Open the

.mdfile you want to change in an editor of choice (a simple text editor is often best). IMPORTANT: Do not edit any files in thedocs/odk-workflows/directory. These files are managed by the ODK system and will be overwritten when the repository is upgraded! If you wish to change these files, make an issue on the ODK issue tracker.

+ - Perform the edit and save the file +

- Commit the file to a branch, and create a pull request as usual. +

- If your development team likes your changes, merge the docs into master branch. +

- Deploy the documentation (see below) +

Deploy the documentation¶

+The documentation is not automatically updated from the Markdown, and needs to be deployed deliberately. To do this, perform the following steps:

+-

+

- In your terminal, navigate to the edit directory of your ontology, e.g.: + +

- Now you are ready to build the docs as follows:

+

+ Mkdocs now sets off to build the site from the markdown pages. You will be asked to

-

+

- Enter your username +

- Enter your password (see here for using GitHub access tokens instead) + IMPORTANT: Using password based authentication will be deprecated this year (2021). Make sure you read up on personal access tokens if that happens! +

+

If everything was successful, you will see a message similar to this one:

+ +3. Just to double check, you can now navigate to your documentation pages (usually https://obophenotype.github.io/upheno/). + Just make sure you give GitHub 2-5 minutes to build the pages! + + + + + + +-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

The release workflow¶

+The release workflow recommended by the ODK is based on GitHub releases and works as follows:

+-

+

- Run a release with the ODK +

- Review the release +

- Merge to main branch +

- Create a GitHub release +

These steps are outlined in detail in the following.

+Run a release with the ODK¶

+Preparation:

+-

+

- Ensure that all your pull requests are merged into your main (master) branch +

- Make sure that all changes to master are committed to GitHub (

git statusshould say that there are no modified files)

+ - Locally make sure you have the latest changes from master (

git pull)

+ - Checkout a new branch (e.g.

git checkout -b release-2021-01-01)

+ - You may or may not want to refresh your imports as part of your release strategy (see here) +

- Make sure you have the latest ODK installed by running

docker pull obolibrary/odkfull

+

To actually run the release, you:

+-

+

- Open a command line terminal window and navigate to the src/ontology directory (

cd upheno/src/ontology)

+ - Run release pipeline:

sh run.sh make prepare_release -B. Note that for some ontologies, this process can take up to 90 minutes - especially if there are large ontologies you depend on, like PRO or CHEBI.

+ - If everything went well, you should see the following output on your machine:

Release files are now in ../.. - now you should commit, push and make a release on your git hosting site such as GitHub or GitLab.

+

This will create all the specified release targets (OBO, OWL, JSON, and the variants, ont-full and ont-base) and copy them into your release directory (the top level of your repo).

+Review the release¶

+-

+

- (Optional) Rough check. This step is frequently skipped, but for the more paranoid among us (like the author of this doc), this is a 3 minute additional effort for some peace of mind. Open the main release (upheno.owl) in you favourite development environment (i.e. Protégé) and eyeball the hierarchy. We recommend two simple checks:

-

+

- Does the very top level of the hierarchy look ok? This means that all new terms have been imported/updated correctly. +

- Does at least one change that you know should be in this release appear? For example, a new class. This means that the release was actually based on the recent edit file. +

+ - Commit your changes to the branch and make a pull request +

- In your GitHub pull request, review the following three files in detail (based on our experience):

-

+

upheno.obo- this reflects a useful subset of the whole ontology (everything that can be covered by OBO format). OBO format has that speaking for it: it is very easy to review!

+upheno-base.owl- this reflects the asserted axioms in your ontology that you have actually edited.

+- Ideally also take a look at

upheno-full.owl, which may reveal interesting new inferences you did not know about. Note that the diff of this file is sometimes quite large.

+

+ - Like with every pull request, we recommend to always employ a second set of eyes when reviewing a PR! +

Merge the main branch¶

+Once your CI checks have passed, and your reviews are completed, you can now merge the branch into your main branch (don't forget to delete the branch afterwards - a big button will appear after the merge is finished).

+Create a GitHub release¶

+-

+

- Go to your releases page on GitHub by navigating to your repository, and then clicking on releases (usually on the right, for example: https://github.com/obophenotype/upheno/releases). Then click "Draft new release" +

- As the tag version you need to choose the date on which your ontologies were build. You can find this, for example, by looking at the

upheno.obofile and check thedata-version:property. The date needs to be prefixed with av, so, for examplev2020-02-06.

+ - You can write whatever you want in the release title, but we typically write the date again. The description underneath should contain a concise list of changes or term additions. +

- Click "Publish release". Done. +

Debugging typical ontology release problems¶

+Problems with memory¶

+When you are dealing with large ontologies, you need a lot of memory. When you see error messages relating to large ontologies such as CHEBI, PRO, NCBITAXON, or Uberon, you should think of memory first, see here.

+Problems when using OBO format based tools¶

+Sometimes you will get cryptic error messages when using legacy tools using OBO format, such as the ontology release tool (OORT), which is also available as part of the ODK docker container. In these cases, you need to track down what axiom or annotation actually caused the breakdown. In our experience (in about 60% of the cases) the problem lies with duplicate annotations (def, comment) which are illegal in OBO. Here is an example recipe of how to deal with such a problem:

-

+

- If you get a message like

make: *** [cl.Makefile:84: oort] Error 255you might have a OORT error.

+ - To debug this, in your terminal enter

sh run.sh make IMP=false PAT=false oort -B(assuming you are already in the ontology folder in your directory)

+ - This should show you where the error is in the log (eg multiple different definitions) +WARNING: THE FIX BELOW IS NOT IDEAL, YOU SHOULD ALWAYS TRY TO FIX UPSTREAM IF POSSIBLE +

- Open

upheno-edit.owlin Protégé and find the offending term and delete all offending issue (e.g. delete ALL definition, if the problem was "multiple def tags not allowed") and save. +*While this is not idea, as it will remove all definitions from that term, it will be added back again when the term is fixed in the ontology it was imported from and added back in.

+ - Rerun

sh run.sh make IMP=false PAT=false oort -Band if it all passes, commit your changes to a branch and make a pull request as usual.

+

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Managing your ODK repository¶

+Updating your ODK repository¶

+Your ODK repositories configuration is managed in src/ontology/upheno-odk.yaml. Once you have made your changes, you can run the following to apply your changes to the repository:

There are a large number of options that can be set to configure your ODK, but we will only discuss a few of them here.

+NOTE for Windows users:

+You may get a cryptic failure such as Set Illegal Option - if the update script located in src/scripts/update_repo.sh

+was saved using Windows Line endings. These need to change to unix line endings. In Notepad++, for example, you can

+click on Edit->EOL Conversion->Unix LF to change this.

Managing imports¶

+You can use the update repository workflow described on this page to perform the following operations to your imports:

+-

+

- Add a new import +

- Modify an existing import +

- Remove an import you no longer want +

- Customise an import +

We will discuss all these workflows in the following.

+Add new import¶

+To add a new import, you first edit your odk config as described above, adding an id to the product list in the import_group section (for the sake of this example, we assume you already import RO, and your goal is to also import GO):

Note: our ODK file should only have one import_group which can contain multiple imports (in the products section). Next, you run the update repo workflow to apply these changes. Note that by default, this module is going to be a SLME Bottom module, see here. To change that or customise your module, see section "Customise an import". To finalise the addition of your import, perform the following steps:

-

+

- Add an import statement to your

src/ontology/upheno-edit.owlfile. We suggest to do this using a text editor, by simply copying an existing import declaration and renaming it to the new ontology import, for example as follows: +

+ - Add your imports redirect to your catalog file

src/ontology/catalog-v001.xml, for example: +

+ - Test whether everything is in order:

-

+

- Refresh your import +

- Open in your Ontology Editor of choice (Protege) and ensure that the expected terms are imported. +

+

Note: The catalog file src/ontology/catalog-v001.xml has one purpose: redirecting

+imports from URLs to local files. For example, if you have

in your editors file (the ontology) and

+<uri name="http://purl.obolibrary.org/obo/upheno/imports/go_import.owl" uri="imports/go_import.owl"/>

+in your catalog, tools like robot or Protégé will recognize the statement

+in the catalog file to redirect the URL http://purl.obolibrary.org/obo/upheno/imports/go_import.owl

+to the local file imports/go_import.owl (which is in your src/ontology directory).

Modify an existing import¶

+If you simply wish to refresh your import in light of new terms, see here. If you wish to change the type of your module see section "Customise an import".

+Remove an existing import¶

+To remove an existing import, perform the following steps:

+-

+

- remove the import declaration from your

src/ontology/upheno-edit.owl.

+ - remove the id from your

src/ontology/upheno-odk.yaml, eg.- id: gofrom the list ofproductsin theimport_group.

+ - run update repo workflow +

- delete the associated files manually:

-

+

src/imports/go_import.owl

+src/imports/go_terms.txt

+

+ - Remove the respective entry from the

src/ontology/catalog-v001.xmlfile.

+

Customise an import¶

+By default, an import module extracted from a source ontology will be a SLME module, see here. There are various options to change the default.

+The following change to your repo config (src/ontology/upheno-odk.yaml) will switch the go import from an SLME module to a simple ROBOT filter module:

A ROBOT filter module is, essentially, importing all external terms declared by your ontology (see here on how to declare external terms to be imported). Note that the filter module does

+not consider terms/annotations from namespaces other than the base-namespace of the ontology itself. For example, in the

+example of GO above, only annotations / axioms related to the GO base IRI (http://purl.obolibrary.org/obo/GO_) would be considered. This

+behaviour can be changed by adding additional base IRIs as follows:

import_group:

+ products:

+ - id: go

+ module_type: filter

+ base_iris:

+ - http://purl.obolibrary.org/obo/GO_

+ - http://purl.obolibrary.org/obo/CL_

+ - http://purl.obolibrary.org/obo/BFO

+If you wish to customise your import entirely, you can specify your own ROBOT command to do so. To do that, add the following to your repo config (src/ontology/upheno-odk.yaml):

Now add a new goal in your custom Makefile (src/ontology/upheno.Makefile, not src/ontology/Makefile).

imports/go_import.owl: mirror/ro.owl imports/ro_terms_combined.txt

+ if [ $(IMP) = true ]; then $(ROBOT) query -i $< --update ../sparql/preprocess-module.ru \

+ extract -T imports/ro_terms_combined.txt --force true --individuals exclude --method BOT \

+ query --update ../sparql/inject-subset-declaration.ru --update ../sparql/postprocess-module.ru \

+ annotate --ontology-iri $(ONTBASE)/$@ $(ANNOTATE_ONTOLOGY_VERSION) --output $@.tmp.owl && mv $@.tmp.owl $@; fi

+Now feel free to change this goal to do whatever you wish it to do! It probably makes some sense (albeit not being a strict necessity), to leave most of the goal instead and replace only:

+ +to another ROBOT pipeline.

+Add a component¶

+A component is an import which belongs to your ontology, e.g. is managed by +you and your team.

+-

+

- Open

src/ontology/upheno-odk.yaml

+ - If you dont have it yet, add a new top level section

components

+ - Under the

componentssection, add a new section calledproducts. +This is where all your components are specified

+ - Under the

productssection, add a new component, e.g.- filename: mycomp.owl

+

Example

+ +When running sh run.sh make update_repo, a new file src/ontology/components/mycomp.owl will

+be created which you can edit as you see fit. Typical ways to edit:

-

+

- Using a ROBOT template to generate the component (see below) +

- Manually curating the component separately with Protégé or any other editor +

- Providing a

components/mycomp.owl:make target insrc/ontology/upheno.Makefile+and provide a custom command to generate the component-

+

WARNING: Note that the custom rule to generate the component MUST NOT depend on any other ODK-generated file such as seed files and the like (see issue).

+

+ - Providing an additional attribute for the component in

src/ontology/upheno-odk.yaml,source, +to specify that this component should simply be downloaded from somewhere on the web.

+

Adding a new component based on a ROBOT template¶

+Since ODK 1.3.2, it is possible to simply link a ROBOT template to a component without having to specify any of the import logic. In order to add a new component that is connected to one or more template files, follow these steps:

+-

+

- Open

src/ontology/upheno-odk.yaml.

+ - Make sure that

use_templates: TRUEis set in the global project options. You should also make sure thatuse_context: TRUEis set in case you are using prefixes in your templates that are not known torobot, such asOMOP:,CPONT:and more. All non-standard prefixes you are using should be added toconfig/context.json.

+ - Add another component to the

productssection.

+ - To activate this component to be template-driven, simply say:

use_template: TRUE. This will create an empty template for you in the templates directory, which will automatically be processed when recreating the component (e.g.run.bat make recreate-mycomp).

+ - If you want to use more than one component, use the

templatesfield to add as many template names as you wish. ODK will look for them in thesrc/templatesdirectory.

+ - Advanced: If you want to provide additional processing options, you can use the

template_optionsfield. This should be a string with option from robot template. One typical example for additional options you may want to provide is--add-prefixes config/context.jsonto ensure the prefix map of your context is provided torobot, see above.

+

Example:

+components:

+ products:

+ - filename: mycomp.owl

+ use_template: TRUE

+ template_options: --add-prefixes config/context.json

+ templates:

+ - template1.tsv

+ - template2.tsv

+Note: if your mirror is particularly large and complex, read this ODK recommendation.

+ + + + + + +-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Repository structure¶

+The main kinds of files in the repository:

+-

+

- Release files +

- Imports +

- Components +

Release files¶

+Release file are the file that are considered part of the official ontology release and to be used by the community. A detailed description of the release artefacts can be found here.

+Imports¶

+Imports are subsets of external ontologies that contain terms and axioms you would like to re-use in your ontology. These are considered "external", like dependencies in software development, and are not included in your "base" product, which is the release artefact which contains only those axioms that you personally maintain.

+These are the current imports in UPHENO

+| Import | +URL | +Type | +

|---|---|---|

| go | +https://raw.githubusercontent.com/obophenotype/pro_obo_slim/master/pr_slim.owl | +None | +

| nbo | +http://purl.obolibrary.org/obo/nbo.owl | +None | +

| uberon | +http://purl.obolibrary.org/obo/uberon.owl | +None | +

| cl | +http://purl.obolibrary.org/obo/cl.owl | +None | +

| pato | +http://purl.obolibrary.org/obo/pato.owl | +None | +

| mpath | +http://purl.obolibrary.org/obo/mpath.owl | +None | +

| ro | +http://purl.obolibrary.org/obo/ro.owl | +None | +

| omo | +http://purl.obolibrary.org/obo/omo.owl | +None | +

| chebi | +https://raw.githubusercontent.com/obophenotype/chebi_obo_slim/main/chebi_slim.owl | +None | +

| oba | +http://purl.obolibrary.org/obo/oba.owl | +None | +

| ncbitaxon | +http://purl.obolibrary.org/obo/ncbitaxon/subsets/taxslim.owl | +None | +

| pr | +https://raw.githubusercontent.com/obophenotype/pro_obo_slim/master/pr_slim.owl | +None | +

| bspo | +http://purl.obolibrary.org/obo/bspo.owl | +None | +

| ncit | +http://purl.obolibrary.org/obo/ncit.owl | +None | +

| fbbt | +http://purl.obolibrary.org/obo/fbbt.owl | +None | +

| fbdv | +http://purl.obolibrary.org/obo/fbdv.owl | +None | +

| hsapdv | +http://purl.obolibrary.org/obo/hsapdv.owl | +None | +

| wbls | +http://purl.obolibrary.org/obo/wbls.owl | +None | +

| wbbt | +http://purl.obolibrary.org/obo/wbbt.owl | +None | +

| plana | +http://purl.obolibrary.org/obo/plana.owl | +None | +

| zfa | +http://purl.obolibrary.org/obo/zfa.owl | +None | +

| xao | +http://purl.obolibrary.org/obo/xao.owl | +None | +

| hsapdv-uberon | +http://purl.obolibrary.org/obo/uberon/bridge/uberon-bridge-to-hsapdv.owl | +custom | +

| zfa-uberon | +http://purl.obolibrary.org/obo/uberon/bridge/uberon-bridge-to-zfa.owl | +custom | +

| zfs-uberon | +http://purl.obolibrary.org/obo/uberon/bridge/uberon-bridge-to-zfs.owl | +custom | +

| xao-uberon | +http://purl.obolibrary.org/obo/uberon/bridge/uberon-bridge-to-xao.owl | +custom | +

| wbbt-uberon | +http://purl.obolibrary.org/obo/uberon/bridge/uberon-bridge-to-wbbt.owl | +custom | +

| wbls-uberon | +http://purl.obolibrary.org/obo/uberon/bridge/uberon-bridge-to-wbls.owl | +custom | +

| fbbt-uberon | +http://purl.obolibrary.org/obo/uberon/bridge/uberon-bridge-to-fbbt.owl | +custom | +

| xao-cl | +http://purl.obolibrary.org/obo/uberon/bridge/cl-bridge-to-xao.owl | +custom | +

| wbbt-cl | +http://purl.obolibrary.org/obo/uberon/bridge/cl-bridge-to-wbbt.owl | +custom | +

| fbbt-cl | +http://purl.obolibrary.org/obo/uberon/bridge/cl-bridge-to-fbbt.owl | +custom | +

Components¶

+Components, in contrast to imports, are considered full members of the ontology. This means that any axiom in a component is also included in the ontology base - which means it is considered native to the ontology. While this sounds complicated, consider this: conceptually, no component should be part of more than one ontology. If that seems to be the case, we are most likely talking about an import. Components are often not needed for ontologies, but there are some use cases:

+-

+

- There is an automated process that generates and re-generates a part of the ontology +

- A part of the ontology is managed in ROBOT templates +

- The expressivity of the component is higher than the format of the edit file. For example, people still choose to manage their ontology in OBO format (they should not) missing out on a lot of owl features. They may choose to manage logic that is beyond OBO in a specific OWL component. +

These are the components in UPHENO

+| Filename | +URL | +

|---|---|

| phenotypes_manual.owl | +None | +

| upheno-mappings.owl | +None | +

| cross-species-mappings.owl | +None | +

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Setting up your Docker environment for ODK use¶

+One of the most frequent problems with running the ODK for the first time is failure because of lack of memory. This can look like a Java OutOfMemory exception,

+but more often than not it will appear as something like an Error 137. There are two places you need to consider to set your memory:

-

+

- Your src/ontology/run.sh (or run.bat) file. You can set the memory in there by adding

+

robot_java_args: '-Xmx8G'to your src/ontology/upheno-odk.yaml file, see for example here.

+ - Set your docker memory. By default, it should be about 10-20% more than your

robot_java_argsvariable. You can manage your memory settings +by right-clicking on the docker whale in your system bar-->Preferences-->Resources-->Advanced, see picture below.

+

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Update Imports Workflow¶

+This page discusses how to update the contents of your imports, like adding or removing terms. If you are looking to customise imports, like changing the module type, see here.

+Importing a new term¶

+Note: some ontologies now use a merged-import system to manage dynamic imports, for these please follow instructions in the section title "Using the Base Module approach".

+Importing a new term is split into two sub-phases:

+-

+

- Declaring the terms to be imported +

- Refreshing imports dynamically +

Declaring terms to be imported¶

+There are three ways to declare terms that are to be imported from an external ontology. Choose the appropriate one for your particular scenario (all three can be used in parallel if need be):

+-

+

- Protégé-based declaration +

- Using term files +

- Using the custom import template +

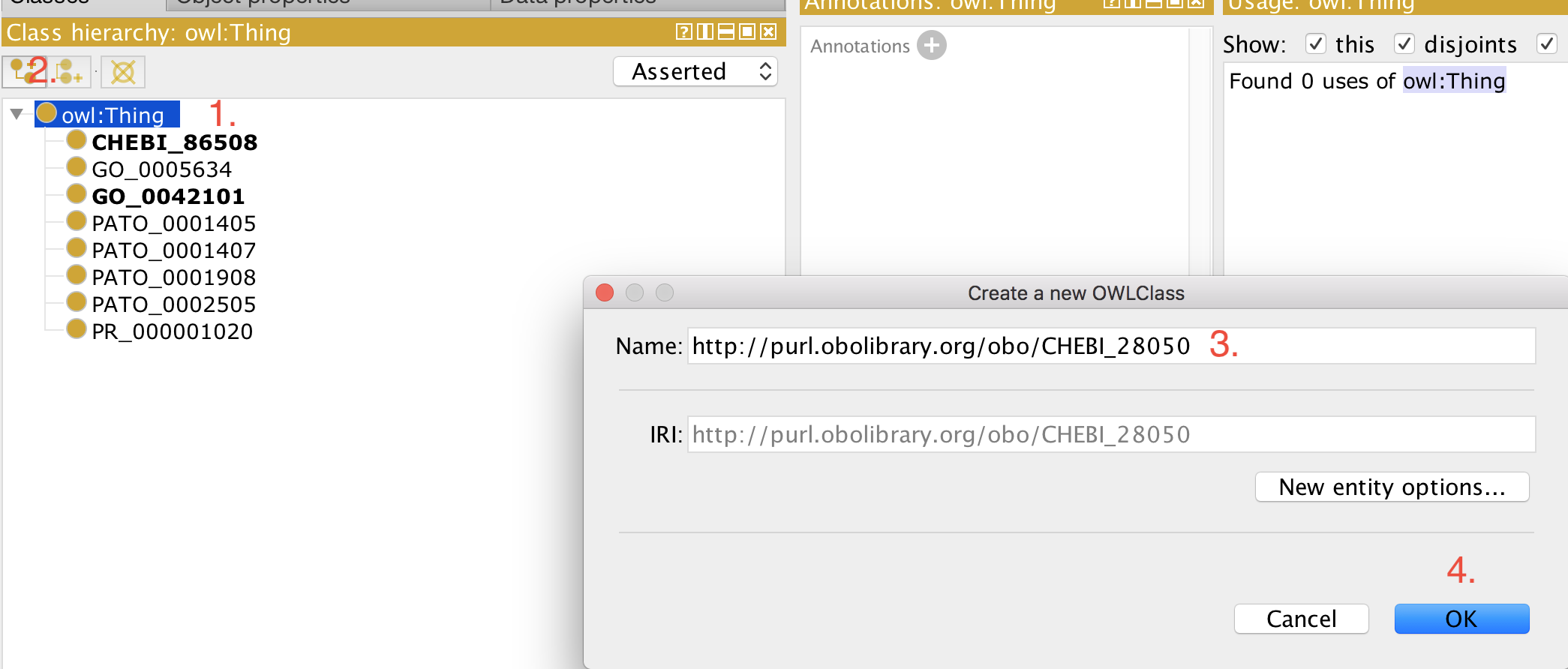

Protégé-based declaration¶

+This workflow is to be avoided, but may be appropriate if the editor does not have access to the ODK docker container. +This approach also applies to ontologies that use base module import approach.

+-

+

- Open your ontology (edit file) in Protégé (5.5+). +

- Select 'owl:Thing' +

- Add a new class as usual. +

- Paste the full iri in the 'Name:' field, for example, http://purl.obolibrary.org/obo/CHEBI_50906. +

- Click 'OK' +

Now you can use this term for example to construct logical definitions. The next time the imports are refreshed (see how to refresh here), the metadata (labels, definitions, etc.) for this term are imported from the respective external source ontology and becomes visible in your ontology.

+Using term files¶

+Every import has, by default a term file associated with it, which can be found in the imports directory. For example, if you have a GO import in src/ontology/go_import.owl, you will also have an associated term file src/ontology/go_terms.txt. You can add terms in there simply as a list:

Now you can run the refresh imports workflow) and the two terms will be imported.

+Using the custom import template¶

+This workflow is appropriate if:

+-

+

- You prefer to manage all your imported terms in a single file (rather than multiple files like in the "Using term files" workflow above). +

- You wish to augment your imported ontologies with additional information. This requires a cautionary discussion. +

To enable this workflow, you add the following to your ODK config file (src/ontology/upheno-odk.yaml), and update the repository:

Now you can manage your imported terms directly in the custom external terms template, which is located at src/templates/external_import.owl. Note that this file is a ROBOT template, and can, in principle, be extended to include any axioms you like. Before extending the template, however, read the following carefully.

The main purpose of the custom import template is to enable the management off all terms to be imported in a centralised place. To enable that, you do not have to do anything other than maintaining the template. So if you, say currently import APOLLO_SV:00000480, and you wish to import APOLLO_SV:00000532, you simply add a row like this:

When the imports are refreshed see imports refresh workflow, the term(s) will simply be imported from the configured ontologies.

+Now, if you wish to extend the Makefile (which is beyond these instructions) and add, say, synonyms to the imported terms, you can do that, but you need to (a) preserve the ID and ENTITY columns and (b) ensure that the ROBOT template is valid otherwise, see here.

WARNING. Note that doing this is a widespread antipattern (see related issue). You should not change the axioms of terms that do not belong into your ontology unless necessary - such changes should always be pushed into the ontology where they belong. However, since people are doing it, whether the OBO Foundry likes it or not, at least using the custom imports module as described here localises the changes to a single simple template and ensures that none of the annotations added this way are merged into the base file.

+Refresh imports¶

+If you want to refresh the import yourself (this may be necessary to pass the travis tests), and you have the ODK installed, you can do the following (using go as an example):

+First, you navigate in your terminal to the ontology directory (underneath src in your hpo root directory). +

+Then, you regenerate the import that will now include any new terms you have added. Note: You must have docker installed.

+ +Since ODK 1.2.27, it is also possible to simply run the following, which is the same as the above:

+ +Note that in case you changed the defaults, you need to add IMP=true and/or MIR=true to the command below:

If you wish to skip refreshing the mirror, i.e. skip downloading the latest version of the source ontology for your import (e.g. go.owl for your go import) you can set MIR=false instead, which will do the exact same thing as the above, but is easier to remember:

Using the Base Module approach¶

+Since ODK 1.2.31, we support an entirely new approach to generate modules: Using base files. +The idea is to only import axioms from ontologies that actually belong to it. +A base file is a subset of the ontology that only contains those axioms that nominally +belong there. In other words, the base file does not contain any axioms that belong +to another ontology. An example would be this:

+Imagine this being the full Uberon ontology:

+Axiom 1: BFO:123 SubClassOf BFO:124

+Axiom 1: UBERON:123 SubClassOf BFO:123

+Axiom 1: UBERON:124 SubClassOf UBERON 123

+The base file is the set of all axioms that are about UBERON terms:

+ +I.e.

+ +Gets removed.

+The base file pipeline is a bit more complex than the normal pipelines, because +of the logical interactions between the imported ontologies. This is solved by _first +merging all mirrors into one huge file and then extracting one mega module from it.

+Example: Let's say we are importing terms from Uberon, GO and RO in our ontologies. +When we use the base pipelines, we

+1) First obtain the base (usually by simply downloading it, but there is also an option now to create it with ROBOT)

+2) We merge all base files into one big pile

+3) Then we extract a single module imports/merged_import.owl

The first implementation of this pipeline is PATO, see https://github.com/pato-ontology/pato/blob/master/src/ontology/pato-odk.yaml.

+To check if your ontology uses this method, check src/ontology/upheno-odk.yaml to see if use_base_merging: TRUE is declared under import_group

If your ontology uses Base Module approach, please use the following steps:

+First, add the term to be imported to the term file associated with it (see above "Using term files" section if this is not clear to you)

+Next, you navigate in your terminal to the ontology directory (underneath src in your hpo root directory). +

+Then refresh imports by running

+ +Note: if your mirrors are updated, you can runsh run.sh make no-mirror-refresh-merged

+This requires quite a bit of memory on your local machine, so if you encounter an error, it might be a lack of memory on your computer. A solution would be to create a ticket in an issue tracker requesting for the term to be imported, and one of the local devs should pick this up and run the import for you.

+Lastly, restart Protégé, and the term should be imported in ready to be used.

+ + + + + + +-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Adding components to an ODK repo¶

+For details on what components are, please see component section of repository file structure document.

+To add custom components to an ODK repo, please follow the following steps:

+1) Locate your odk yaml file and open it with your favourite text editor (src/ontology/upheno-odk.yaml) +2) Search if there is already a component section to the yaml file, if not add it accordingly, adding the name of your component:

+ +3) Add the component to your catalog file (src/ontology/catalog-v001.xml)

+ <uri name="http://purl.obolibrary.org/obo/upheno/components/your-component-name.owl" uri="components/your-component-name.owl"/>

+4) Add the component to the edit file (src/ontology/upheno-edit.obo) +for .obo formats:

+ +for .owl formats:

+ +5) Refresh your repo by running sh run.sh make update_repo - this should create a new file in src/ontology/components.

+6) In your custom makefile (src/ontology/upheno.Makefile) add a goal for your custom make file. In this example, the goal is a ROBOT template.

$(COMPONENTSDIR)/your-component-name.owl: $(SRC) ../templates/your-component-template.tsv

+ $(ROBOT) template --template ../templates/your-component-template.tsv \

+ annotate --ontology-iri $(ONTBASE)/$@ --output $(COMPONENTSDIR)/your-component-name.owl

+(If using a ROBOT template, do not forget to add your template tsv in src/templates/)

+7) Make the file by running sh run.sh make components/your-component-name.owl

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

The Unified Phenotype Ontology (uPheno) meeting series¶

+The uPheno editors call is held every second Thursday (bi-weekly) on Zoom, provided by members of the Monarch Initiative and co-organised by members of the Alliance and Genome Resources. If you wish to join the meeting, you can open an issue on https://github.com/obophenotype/upheno/issues with the request to be added, or send an email to phenotype-ontologies-editors@googlegroups.com.

+The meeting coordinator (MC) is the person charged with organising the meeting. The current MC is Ray, @rays22.

+Meeting preparation¶

+-

+

- The MC prepares the agenda in advance: everyone on the call is very busy and our time is precious. +

- Every agenda item has an associated ticket on GitHub, and a clear set of action items should be added in GitHub Tasklist syntax to the first comment on the issue. +

- If there are issues for any subtasks (e.g. PATO or Uberon edits), the list should be edited to link these. +

- Any items that do not have a subissue but do involve changes to patterns) should be edited to link to implementing PR. +

- It does not matter who wrote the first issue comment, the uPheno team can simply add a tasklist underneath the original comment and refine it over time. +

- Tag all issues which need discussion with "upheno call" +

- It must be clear from the task list what the uPheno team should be doing during the call (discuss, decide, review). For example, one item on the task list may read: "uPheno team to decide on appropriate label for template". +

- Conversely, no issue should be added to the agenda that does not have a clear set of action items associated with it that should be addressed during the call. These actions may include making and documenting modelling decisions. +

- Go through up to 10 issues on the uPheno issue tracker before each meeting to determine how to progress on them, and add action items. Only if they need to be discussed, add the "upheno call" label. +

Meeting¶

+-

+

- Every meeting should start with a quick (max 5 min, ideally 3 min) overview of all the goals and how they processed. The MC should mention all blockers and goals, even the ones we did not make any progress on, to keep the focus on the priorities: +

- uPheno releases +

- uPheno documentation +

- Pattern creation +

- Patternisation: The process of ensuring that phenotype ontologies are using uPheno conformant templates to define their phenotypes. +

- Harmonisation: The process of ensuring that phenotype patterns are applied consistently across ontologies. +

- For new pattern discussions: +

- Every new pattern proposal should come with a new GitHub issue, appropriately tagged. +

- The issue text should detail the use cases for the pattern well, and these use cases should also be documented in the "description" part of the DOSDP YAML file. Uses cases should include expected classifications and why we want them (and potentially classifications to avoid). e.g. axis-specific dimension traits should classify under more abstractly defined dimension traits which in term should classify under Morphology. Add some examples of contexts where grouping along these classifications is useful. +

- Agenda items may include discussion and decisions about more general modelling issues that affect more than one pattern, but these should also be documented as tickets as described above. +

After the meeting¶

+-

+

- After every meeting, update all issues discussed on GitHub and, in particular, clarify the remaining action items. +

- Ensure that the highest priority issues are discussed first. +

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

The Outreach Programme of the Unified Phenotype Ontology (uPheno) development team¶

+Outreach-calls¶

+The uPheno organises an outreach call every four weeks to listen to external stakeholders describing their need for cross-species phenotype integration.

+Schedule¶

+| Date | +Lesson | +Notes | +Recordings | +

|---|---|---|---|

| 2024/04/05 | +TBD | +TBD | ++ |

| 2024/3/08 | +Computational identification of disease models through cross-species phenotype comparison | +Diego A. Pava, Pilar Cacheiro, Damian Smedley (IMPC) | +Recording | +

| 2024/02/09 | +Use cases for uPheno in the Alliance of Genome Resources and MGI | +Sue Bello (Alliance of Genome Resources, MGI) | +Recording | +

Possible topics¶

+-

+

- Cross-species inference in Variant and Gene Prioritisation algorithms (Exomiser). +

- Cross-species comparison of phenotypic profiles (Monarch Initiative Knowledge Graph) +

- Cross-species data in biomedical knowledge graphs (Kids First) +

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

Drosophila Phenotype Ontology

+ +-

+

- summary Drosophila Phenotype Ontology +

*The Drosophila phenotype

+ontologyOsumi-Sutherland et al, J Biomed Sem.

The DPO is formally a subset of FBcv, made available from +http://purl.obolibrary.org/obo/fbcv/dpo.owl

+Phenotypes in FlyBase may either by assigned to FBcv (dpo) classes, or +they may have a phenotype_manifest_in to FBbt (anatomy).

+For integration we generate the following ontologies:

+*http://purl.obolibrary.org/obo/upheno/imports/fbbt_phenotype.owl\

+*http://purl.obolibrary.org/obo/upheno/imports/uberon_phenotype.owl\

+*http://purl.obolibrary.org/obo/upheno/imports/go_phenotype.owl\

+*http://purl.obolibrary.org/obo/upheno/imports/cl_phenotype.owl

(see Makefile)

+This includes a phenotype class for every anatomy class - the IRI is +suffixed with "PHENOTYPE". Using these ontologies, Uberon and CL +phenotypes make the groupings.

+We include

+*http://purl.obolibrary.org/obo/upheno/dpo/dpo-importer.owl

Which imports dpo plus auto-generated fbbt phenotypes.

+The dpo-importer is included in the [MetazoanImporter]

+Additional Notes¶

+We create a local copy of fbbt that has "Drosophila " prefixed to all +labels. This gives us a hierarchy:

+* eye phenotype (defined using Uberon)\

+* compound eye phenotype (defined using Uberon)\

+* drosophila eye phenotype (defined using FBbt)

TODO¶

+*http://code.google.com/p/cell-ontology/issues/detail?id=115ensure all CL to FBbt equiv axioms are present (we have good coverage for Uberon)

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

-

+

- summary Fission Yeast Phenotype Ontology +

* project page -https://sourceforge.net/apps/trac/pombase/wiki/FissionYeastPhenotypeOntology\

+*FYPO: the fission yeast phenotype ontologyHarris et al, Bioinformatics

Note that the OWL axioms for FYPO are managed directly in the FYPO +project repo, we do not duplicate them here

+ + + + + + +-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

-

+

- summary Human Phenotype Ontology +

- labels Featured +

Links¶

+*http://www.human-phenotype-ontology.org/\

+* Köhler S, Doelken SC, Mungall CJ, Bauer S, Firth HV, Bailleul-Forestier I, Black GC, Brown DL, Brudno M, Campbell J, FitzPatrick DR, Eppig JT, Jackson AP, Freson K, Girdea M, Helbig I, Hurst JA, Jähn J, Jackson LG, Kelly AM, Ledbetter DH, Mansour S, Martin CL, Moss C, Mumford A, Ouwehand WH, Park SM, Riggs ER, Scott RH, Sisodiya S, Van Vooren S, Wapner RJ, Wilkie AO, Wright CF, Vulto-van Silfhout AT, de Leeuw N, de Vries BB, Washingthon NL, Smith CL, Westerfield M, Schofield P, Ruef BJ, Gkoutos GV, Haendel M, Smedley D, Lewis SE, Robinson PN. The Human Phenotype Ontology project: linking molecular biology and disease through phenotype data.Nucleic Acids Res.2014 Jan;42(Database issue):D966-74 [pubmed]

*HPO

+browser\

+*HP in

+OntoBee\

+*HP in OLSVis

OWL Axiomatization¶

+The OWL axioms for HP are in the +src/ontology/hp +directory on this site.

+The structure is analagous to that of the [MP].

+Status¶

+The OWL axiomatization is updated frequently to stay in sync with +changes in the MP

+Editing the axioms¶

+The edit file is currently:

+*http://purl.obolibrary.org/obo/hp/hp-equivalence-axioms-subq-ubr.owl

Edit this in protege.

+ + + + + + +-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

-

+

- summary Mouse Phenotype Ontology +

- labels Featured +

Links¶

+*The Mammalian Phenotype Ontology: enabling robust

+annotation and comparative

+analysisSmith CL, Eppig JT\

+*MP browser at

+MGI\

+*MP in

+OntoBee\

+*MP in OLSVis

OWL Axiomatization¶

+The OWL axioms for MP are in the +src/ontology/mp +directory on this site.

+*http://purl.obolibrary.org/obo/mp.owl- direct conversion of MGI-supplied obo file\

+*http://purl.obolibrary.org/obo/mp/mp-importer.owl- imports additional axioms, including the following ones below:\

+*http://purl.obolibrary.org/obo/mp.owl\

+*http://purl.obolibrary.org/obo/upheno/imports/chebi_import.owl\

+*http://purl.obolibrary.org/obo/upheno/imports/uberon_import.owl\

+*http://purl.obolibrary.org/obo/upheno/imports/pato_import.owl\

+*http://purl.obolibrary.org/obo/upheno/imports/go_import.owl\

+*http://purl.obolibrary.org/obo/upheno/imports/mpath_import.owl\

+*http://purl.obolibrary.org/obo/mp/mp-equivalence-axioms-subq-ubr.owl\

+\

Status¶

+The OWL axiomatization is updated frequently to stay in sync with +changes in the MP

+Editing the axioms¶

+The edit file is currently:

+*http://purl.obolibrary.org/obo/mp/mp-equivalence-axioms-edit.owl

Edit this in protege.

+The file mp-equivalence-axioms.obo is DEPRECATED!

+TermGenie¶

+*http://mp.termgenie.org/\

+*http://mp.termgenie.org/TermGenieFreeForm

-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +

- + + uPheno community effort + + + + + + + + + + + + + + + + + + +

- + + How-to guides + + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + + + + +

- + + Reference + + + + + + + + + + +

- + + Other Monarch Docs + + + + +

-

+

- summary Worm Phenotype Ontology +

- labels Featured +

Links¶

+* Schindelman, Gary, et al.Worm Phenotype Ontology:

+integrating phenotype data within and beyond the C.

+elegans

+community.BMC bioinformatics 12.1 (2011): 32.\

+*WBPhenotype in

+OntoBee\

+*WBPhenotype in

+OLSVis

OWL Axiomatization¶

+The OWL axioms for WBPhenotype are in the +src/ontology/wbphenotype +directory on this site.

+*http://purl.obolibrary.org/obo/wbphenotype.owl- direct conversion of WormBase-supplied obo file\

+*http://purl.obolibrary.org/obo/wbphenotype/wbphenotype-importer.owl- imports additional axioms.

The structure roughly follows that of the [MP]. The worm anatomy is +used.

+Editing the axioms¶

+Currently the source is wbphenotype/wbphenotype-equivalence-axioms.obo, +the OWL is generated from here. We are considering switching this +around, so the OWL is edited, using Protege.

+ + + + + + +-

+

+

+

+

+

+

+

- + + Getting started + + + + + + + + + +

- + + Cite + + + + + + + + + +

- + + About uPheno + + + + + + + + + + + + +