Our understanding of modern neural networks lags behind their practical successes. This growing gap poses a challenge to the pace of progress in machine learning because fewer pillars of knowledge are available to designers of models and algorithms (Hanie Sedghi). Inspired by the ICML 2019 workshop Identifying and Understanding Deep Learning Phenomena, I collect papers and related resources which present interesting empirical study and insight into the nature of deep learning.

- Empirical Study

- Neural Collapse

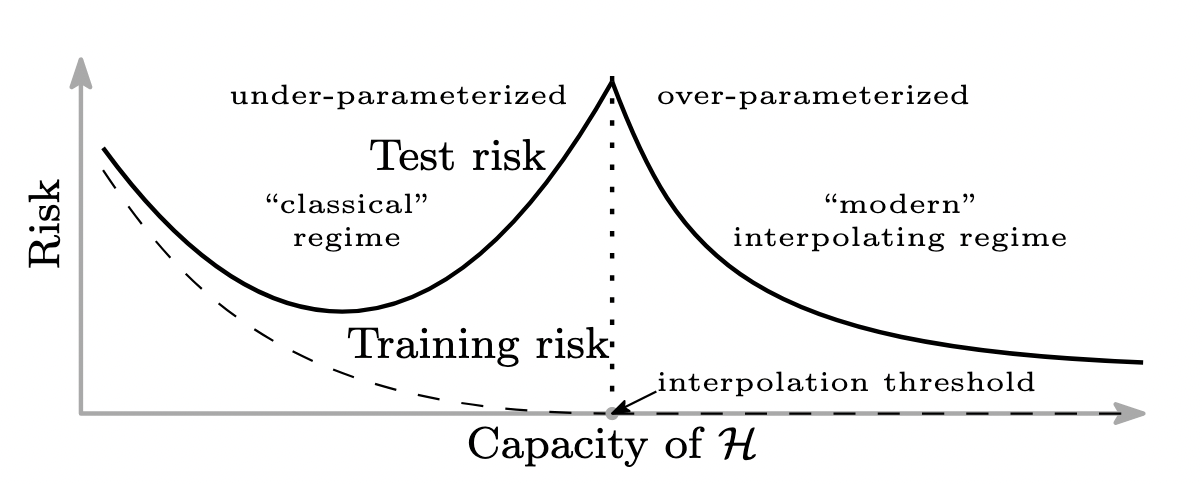

- Deep Double Descent

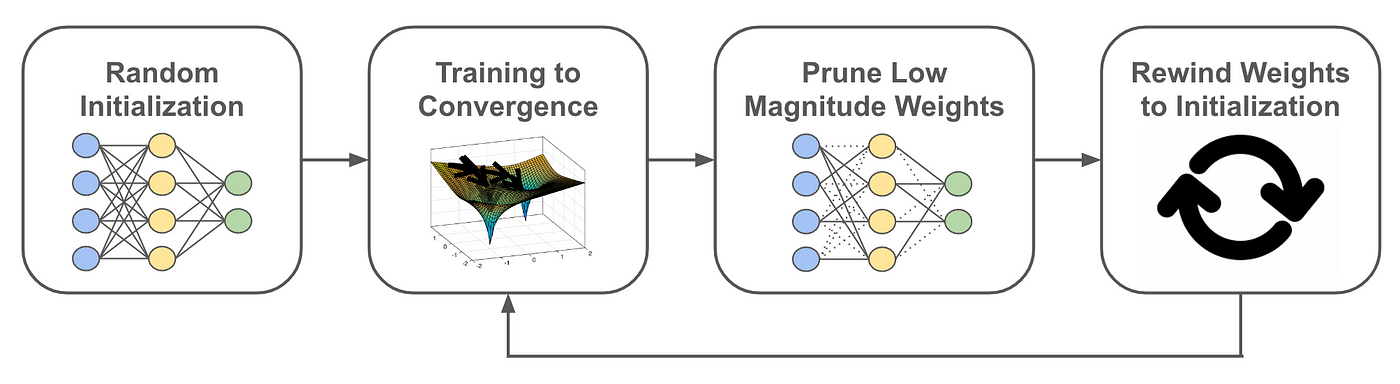

- Lottery Ticket Hypothesis

- Emergence and Phase Transitions

- Interactions with Neuroscience

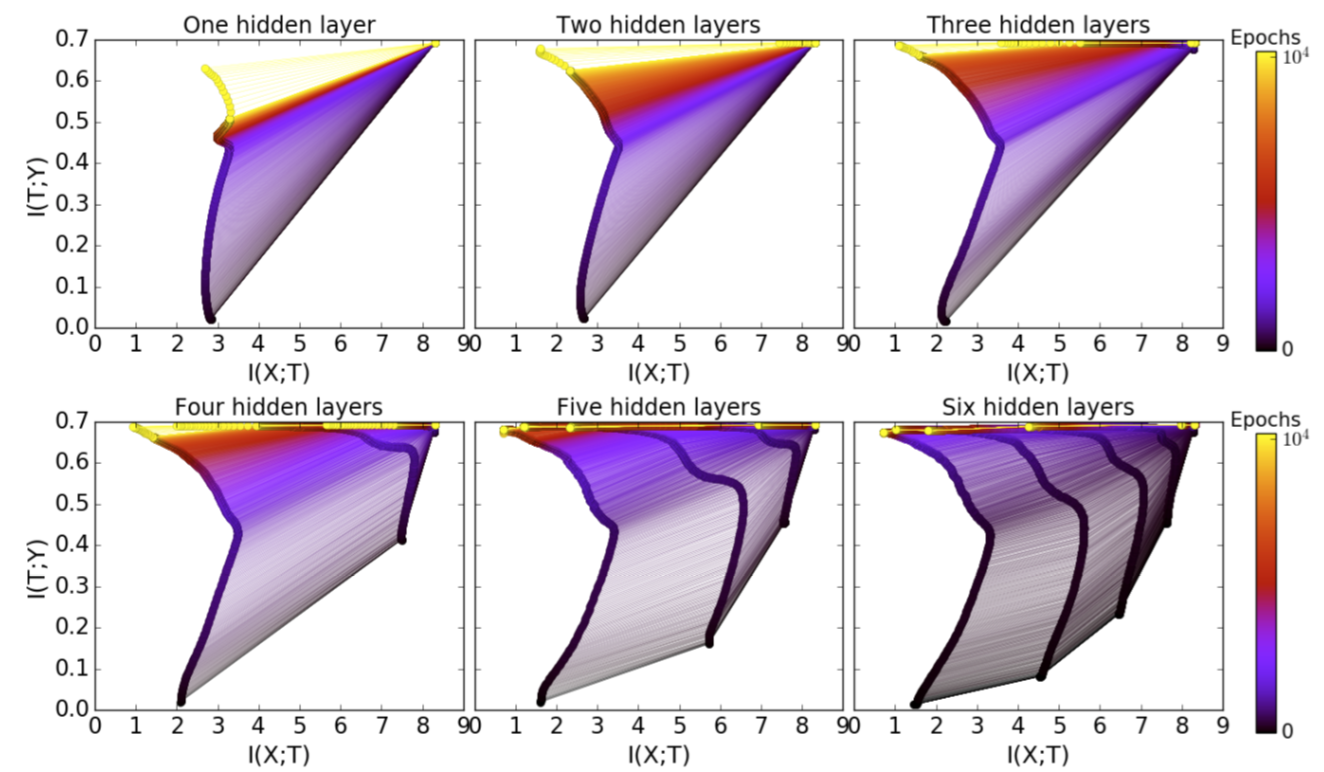

- Information Bottleneck

- Neural Tangent Kernel

- Other Papers

- Resources

-

Rethinking Conventional Wisdom in Machine Learning: From Generalization to Scaling. [paper]

- Lechao Xiao.

- Key Word: Regularization; Generalization; Neural Scaling Law.

-

Digest

This paper explores the shift in machine learning from focusing on minimizing generalization error to reducing approximation error, particularly in the context of large language models (LLMs) and scaling laws. It questions whether traditional regularization principles, like L2 regularization and small batch sizes, remain relevant in this new paradigm. The authors introduce the concept of “scaling law crossover,” where techniques effective at smaller scales may fail as model size increases. The paper raises two key questions: what new principles should guide model scaling, and how can models be effectively compared at large scales where only single experiments are feasible?

-

AI models collapse when trained on recursively generated data. [paper]

- Ilia Shumailov, Zakhar Shumaylov, Yiren Zhao, Nicolas Papernot, Ross Anderson, Yarin Gal. Nature

- Key Word: Model Collapse; Generative Model.

-

Digest

The paper demonstrates that AI models trained on recursively generated data suffer from a collapse in performance. This finding highlights the critical need for diverse and high-quality data sources to maintain the robustness and reliability of AI systems.

-

Not All Language Model Features Are Linear. [paper]

- Joshua Engels, Isaac Liao, Eric J. Michaud, Wes Gurnee, Max Tegmark.

- Key Word: Large Language Model; Linear Representation Hypothesis.

-

Digest

This paper challenges the linear representation hypothesis in language models by proposing that some representations are inherently multi-dimensional. Using sparse autoencoders, the authors identify interpretable multi-dimensional features in GPT-2 and Mistral 7B, such as circular features for days and months, and demonstrate their computational significance through intervention experiments.

-

LoRA Learns Less and Forgets Less. [paper]

- Dan Biderman, Jose Gonzalez Ortiz, Jacob Portes, Mansheej Paul, Philip Greengard, Connor Jennings, Daniel King, Sam Havens, Vitaliy Chiley, Jonathan Frankle, Cody Blakeney, John P. Cunningham.

- Key Word: LoRA; Fine-Tuning; Learning-Forgetting Trade-off.

-

Digest

The study compares the performance of Low-Rank Adaptation (LoRA), a parameter-efficient finetuning method for large language models, with full finetuning in programming and mathematics domains. While LoRA generally underperforms compared to full finetuning, it better maintains the base model's performance on tasks outside the target domain, providing stronger regularization than common techniques like weight decay and dropout. Full finetuning learns perturbations with a rank that is 10-100X greater than typical LoRA configurations, which may explain some performance gaps. The study concludes with best practices for finetuning with LoRA.

-

The Platonic Representation Hypothesis. [paper]

- Minyoung Huh, Brian Cheung, Tongzhou Wang, Phillip Isola.

- Key Word: Foundation Models; Representational Convergence.

-

Digest

The paper argues that representations in AI models, especially deep networks, are converging. This convergence is observed across time, multiple domains, and different data modalities. As models get larger, they measure distance between data points in increasingly similar ways. The authors hypothesize that this convergence is moving towards a shared statistical model of reality, which they term the "platonic representation." They discuss potential selective pressures towards this representation and explore the implications, limitations, and counterexamples to their analysis.

-

The Unreasonable Ineffectiveness of the Deeper Layers. [paper]

- Andrey Gromov, Kushal Tirumala, Hassan Shapourian, Paolo Glorioso, Daniel A. Roberts.

- Key Word: Large Language Model; Pruning.

-

Digest

This study explores a straightforward layer-pruning approach on widely-used pretrained large language models (LLMs), showing that removing up to half of the layers results in only minimal performance decline on various question-answering benchmarks. The method involves selecting the best layers to prune based on layer similarity, followed by minimal finetuning to mitigate any loss in performance. Specifically, it employs parameter-efficient finetuning techniques like quantization and Low Rank Adapters (QLoRA), enabling experiments on a single A100 GPU. The findings indicate that layer pruning could both reduce finetuning computational demands and enhance inference speed and memory efficiency. Moreover, the resilience of LLMs to layer removal raises questions about the effectiveness of current pretraining approaches or highlights the significant knowledge-storing capacity of the models' shallower layers.

-

Unfamiliar Finetuning Examples Control How Language Models Hallucinate. [paper]

- Katie Kang, Eric Wallace, Claire Tomlin, Aviral Kumar, Sergey Levine.

- Key Word: Large Language Model; Hallucination; Supervised Fine-Tuning.

-

Digest

This study investigates the propensity of large language models (LLMs) to produce plausible but factually incorrect responses, focusing on their behavior with unfamiliar concepts. The research identifies a pattern where LLMs resort to hedged predictions for unfamiliar inputs, influenced by the supervision of such examples during fine-tuning. By adjusting the supervision of these examples, it's possible to direct LLM responses towards acknowledging their uncertainty (e.g., by saying "I don't know").

-

When Scaling Meets LLM Finetuning: The Effect of Data, Model and Finetuning Method. [paper]

- Biao Zhang, Zhongtao Liu, Colin Cherry, Orhan Firat.

- Key Word: Neural Scaling Laws; Large Language Model; Fine-Tuning.

-

Digest

This study investigates how scaling factors—model size, pretraining data size, finetuning parameter size, and finetuning data size—affect finetuning performance of large language models (LLMs) across two methods: full-model tuning (FMT) and parameter efficient tuning (PET). Experiments on bilingual LLMs for translation and summarization tasks reveal that finetuning performance scales multiplicatively with data size and other factors, favoring model scaling over pretraining data scaling, with PET parameter scaling showing limited effectiveness. These insights suggest the choice of finetuning method is highly task- and data-dependent, offering guidance for optimizing LLM finetuning strategies.

-

Rethink Model Re-Basin and the Linear Mode Connectivity. [paper]

- Xingyu Qu, Samuel Horvath.

- Key Word: Linear Mode Connectivity; Model Merging; Re-Normalization; Pruning.

-

Digest

The paper discusses the "model re-basin regime," where most solutions found by stochastic gradient descent (SGD) in sufficiently wide models converge to similar states, impacting model averaging. It identifies limitations in current strategies due to a poor understanding of the mechanisms involved. The study critiques existing matching algorithms for their inadequacies and proposes that proper re-normalization can address these issues. By adopting a more analytical approach, the paper reveals how matching algorithms and re-normalization interact, offering clearer insights and improvements over previous work. This includes a connection between linear mode connectivity and pruning, leading to a new lightweight post-pruning method that enhances existing pruning techniques.

-

How Good is a Single Basin? [paper]

- Kai Lion, Lorenzo Noci, Thomas Hofmann, Gregor Bachmann.

- Key Word: Linear Mode Connectivity; Deep Ensembles.

-

Digest

This paper investigates the assumption that the multi-modal nature of neural loss landscapes is key to the success of deep ensembles. By creating "connected" ensembles that are confined to a single basin, the study finds that this limitation indeed reduces performance. However, it also discovers that distilling knowledge from multiple basins into these connected ensembles can offset the performance deficit, effectively creating multi-basin deep ensembles within a single basin. This suggests that while knowledge from outside a given basin exists within it, it is not readily accessible without learning from other basins.

-

Truth is in There: Improving Reasoning in Language Models with Layer-Selective Rank Reduction. [paper] [code]

- Pratyusha Sharma, Jordan T. Ash, Dipendra Misra

- Key Word: Large Language Models; Reasoning.

-

Digest

Transformer-based Large Language Models (LLMs) have become a fixture in modern machine learning. Correspondingly, significant resources are allocated towards research that aims to further advance this technology, typically resulting in models of increasing size that are trained on increasing amounts of data. This work, however, demonstrates the surprising result that it is often possible to significantly improve the performance of LLMs by selectively removing higher-order components of their weight matrices. This simple intervention, which we call LAyer-SElective Rank reduction (LASER), can be done on a model after training has completed, and requires no additional parameters or data. We show extensive experiments demonstrating the generality of this finding across language models and datasets, and provide in-depth analyses offering insights into both when LASER is effective and the mechanism by which it operates.

-

The Transient Nature of Emergent In-Context Learning in Transformers. [paper]

- Aaditya K. Singh, Stephanie C.Y. Chan, Ted Moskovitz, Erin Grant, Andrew M. Saxe, Felix Hill. NeurIPS 2023

- Key Word: In-Context Learning.

-

Digest

This paper shows that in-context learning (ICL) in transformers, where models exhibit abilities not explicitly trained for, is often transient rather than persistent during training. The authors find ICL emerges then disappears, giving way to in-weights learning (IWL). This occurs across model sizes and datasets, raising questions around stopping training early for ICL vs later for IWL. They suggest L2 regularization may lead to more persistent ICL, removing the need for early stopping based on ICL validation. The transience may be caused by competition between emerging ICL and IWL circuits in the model.

-

What do larger image classifiers memorise? [paper]

- Michal Lukasik, Vaishnavh Nagarajan, Ankit Singh Rawat, Aditya Krishna Menon, Sanjiv Kumar.

- Key Word: Large Model; Memorization.

-

Digest

This paper explores the relationship between memorization and generalization in modern neural networks. It discusses Feldman's metric for measuring memorization and applies it to ResNet models for image classification. The paper then investigates whether larger neural models memorize more and finds that memorization trajectories vary across different training examples and model sizes. Additionally, it notes that knowledge distillation, a model compression technique, tends to inhibit memorization while improving generalization, particularly on examples with increasing memorization trajectories.

-

Can Neural Network Memorization Be Localized? [paper]

- Pratyush Maini, Michael C. Mozer, Hanie Sedghi, Zachary C. Lipton, J. Zico Kolter, Chiyuan Zhang. ICML 2023

- Key Word: Atypical Example Memorization; Location of Memorization; Task Specific Neurons.

-

Digest

The paper demonstrates that memorization in deep overparametrized networks is not limited to individual layers but rather confined to a small set of neurons across various layers of the model. Through experimental evidence from gradient accounting, layer rewinding, and retraining, the study reveals that most layers are redundant for example memorization, and the contributing layers are typically not the final layers. Additionally, the authors propose a new form of dropout called example-tied dropout, which allows them to selectively direct memorization to a pre-defined set of neurons, effectively reducing memorization accuracy while also reducing the generalization gap.

-

No Wrong Turns: The Simple Geometry Of Neural Networks Optimization Paths. [paper]

- Charles Guille-Escuret, Hiroki Naganuma, Kilian Fatras, Ioannis Mitliagkas.

- Key Word: Restricted Secant Inequality; Error Bound; Loss Landscape Geometry.

-

Digest

The paper explores the geometric properties of optimization paths in neural networks and reveals that the quantities related to the restricted secant inequality and error bound exhibit consistent behavior during training, suggesting that optimization trajectories encounter no significant obstacles and maintain stable dynamics, leading to linear convergence and supporting commonly used learning rate schedules.

-

Sharpness-Aware Minimization Leads to Low-Rank Features. [paper]

- Maksym Andriushchenko, Dara Bahri, Hossein Mobahi, Nicolas Flammarion.

- Key Word: Sharpness-Aware Minimization; Low-Rank Features.

-

Digest

Sharpness-aware minimization (SAM) is a method that minimizes the sharpness of the training loss of a neural network. It improves generalization and reduces the feature rank at different layers of a neural network. This low-rank effect occurs for different architectures and objectives. A significant number of activations get pruned by SAM, contributing to rank reduction. This effect can also occur in deep networks.

-

A surprisingly simple technique to control the pretraining bias for better transfer: Expand or Narrow your representation. [paper]

- Florian Bordes, Samuel Lavoie, Randall Balestriero, Nicolas Ballas, Pascal Vincent.

- Key Word: Pretraining; Fine-Tuning; Information Bottleneck.

-

Digest

A commonly used trick in SSL, shown to make deep networks more robust to such bias, is the addition of a small projector (usually a 2 or 3 layer multi-layer perceptron) on top of a backbone network during training. In contrast to previous work that studied the impact of the projector architecture, we here focus on a simpler, yet overlooked lever to control the information in the backbone representation. We show that merely changing its dimensionality -- by changing only the size of the backbone's very last block -- is a remarkably effective technique to mitigate the pretraining bias.

-

Why is the winner the best? [paper]

-

Author List

Matthias Eisenmann, Annika Reinke, Vivienn Weru, Minu Dietlinde Tizabi, Fabian Isensee, Tim J. Adler, Sharib Ali, Vincent Andrearczyk, Marc Aubreville, Ujjwal Baid, Spyridon Bakas, Niranjan Balu, Sophia Bano, Jorge Bernal, Sebastian Bodenstedt, Alessandro Casella, Veronika Cheplygina, Marie Daum, Marleen de Bruijne, Adrien Depeursinge, Reuben Dorent, Jan Egger, David G. Ellis, Sandy Engelhardt, Melanie Ganz, Noha Ghatwary, Gabriel Girard, Patrick Godau, Anubha Gupta, Lasse Hansen, Kanako Harada, Mattias Heinrich, Nicholas Heller, Alessa Hering, Arnaud Huaulmé, Pierre Jannin, Ali Emre Kavur, Oldřich Kodym, Michal Kozubek, Jianning Li, Hongwei Li, Jun Ma, Carlos Martín-Isla, Bjoern Menze, Alison Noble, Valentin Oreiller, Nicolas Padoy, Sarthak Pati, Kelly Payette, Tim Rädsch, Jonathan Rafael-Patiño, Vivek Singh Bawa, Stefanie Speidel, Carole H. Sudre, Kimberlin van Wijnen, Martin Wagner, Donglai Wei, Amine Yamlahi, Moi Hoon Yap, Chun Yuan, Maximilian Zenk, Aneeq Zia, David Zimmerer, Dogu Baran Aydogan, Binod Bhattarai, Louise Bloch, Raphael Brüngel, Jihoon Cho, Chanyeol Choi, Qi Dou, Ivan Ezhov, Christoph M. Friedrich, Clifton Fuller, Rebati Raman Gaire, Adrian Galdran, Álvaro García Faura, Maria Grammatikopoulou, SeulGi Hong, Mostafa Jahanifar, Ikbeom Jang, Abdolrahim Kadkhodamohammadi, Inha Kang, Florian Kofler, Satoshi Kondo, Hugo Kuijf, Mingxing Li, Minh Huan Luu, Tomaž Martinčič, Pedro Morais, Mohamed A. Naser, Bruno Oliveira, David Owen, Subeen Pang, Jinah Park, Sung-Hong Park, Szymon Płotka, Elodie Puybareau, Nasir Rajpoot, Kanghyun Ryu, Numan Saeed , Adam Shephard, Pengcheng Shi, Dejan Štepec, Ronast Subedi, Guillaume Tochon, Helena R. Torres, Helene Urien, João L. Vilaça, Kareem Abdul Wahid, Haojie Wang, Jiacheng Wang, Liansheng Wang, Xiyue Wang, Benedikt Wiestler, Marek Wodzinski, Fangfang Xia, Juanying Xie, Zhiwei Xiong, Sen Yang, Yanwu Yang, Zixuan Zhao, Klaus Maier-Hein, Paul F. Jäger, Annette Kopp-Schneider, Lena Maier-Hein. - Key Word: Benchmarking Competitions; Medical Imaging.

-

Digest

The article discusses the lack of investigation into what can be learned from international benchmarking competitions for image analysis methods. The authors conducted a multi-center study of 80 competitions conducted in the scope of IEEE ISBI 2021 and MICCAI 2021 to address this gap. Based on comprehensive descriptions of the submitted algorithms and their rankings, as well as participation strategies, statistical analyses revealed common characteristics of winning solutions. These typically include the use of multi-task learning and/or multi-stage pipelines, a focus on augmentation, image preprocessing, data curation, and postprocessing.

-

-

Sparks of Artificial General Intelligence: Early experiments with GPT-4. [paper]

- Sébastien Bubeck, Varun Chandrasekaran, Ronen Eldan, Johannes Gehrke, Eric Horvitz, Ece Kamar, Peter Lee, Yin Tat Lee, Yuanzhi Li, Scott Lundberg, Harsha Nori, Hamid Palangi, Marco Tulio Ribeiro, Yi Zhang.

- Key Word: Artificial General Intelligence; Benchmarking; GPT.

-

Digest

We discuss the rising capabilities and implications of these models. We demonstrate that, beyond its mastery of language, GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting. Moreover, in all of these tasks, GPT-4's performance is strikingly close to human-level performance, and often vastly surpasses prior models such as ChatGPT.

-

Is forgetting less a good inductive bias for forward transfer? [paper]

- Jiefeng Chen, Timothy Nguyen, Dilan Gorur, Arslan Chaudhry. ICLR 2023

- Key Word: Continual Learning; Catastrophic Forgetting; Forward Transfer; Inductive Bias.

-

Digest

One of the main motivations of studying continual learning is that the problem setting allows a model to accrue knowledge from past tasks to learn new tasks more efficiently. However, recent studies suggest that the key metric that continual learning algorithms optimize, reduction in catastrophic forgetting, does not correlate well with the forward transfer of knowledge. We believe that the conclusion previous works reached is due to the way they measure forward transfer. We argue that the measure of forward transfer to a task should not be affected by the restrictions placed on the continual learner in order to preserve knowledge of previous tasks.

-

Dropout Reduces Underfitting. [paper] [code]

- Zhuang Liu, Zhiqiu Xu, Joseph Jin, Zhiqiang Shen, Trevor Darrell.

- Key Word: Dropout; Overfitting.

-

Digest

In this study, we demonstrate that dropout can also mitigate underfitting when used at the start of training. During the early phase, we find dropout reduces the directional variance of gradients across mini-batches and helps align the mini-batch gradients with the entire dataset's gradient. This helps counteract the stochasticity of SGD and limit the influence of individual batches on model training.

-

The Role of Pre-training Data in Transfer Learning. [paper]

- Rahim Entezari, Mitchell Wortsman, Olga Saukh, M.Moein Shariatnia, Hanie Sedghi, Ludwig Schmidt.

- Key Word: Pre-training; Transfer Learning.

-

Digest

We investigate the impact of pre-training data distribution on the few-shot and full fine-tuning performance using 3 pre-training methods (supervised, contrastive language-image and image-image), 7 pre-training datasets, and 9 downstream datasets. Through extensive controlled experiments, we find that the choice of the pre-training data source is essential for the few-shot transfer, but its role decreases as more data is made available for fine-tuning.

-

The Dormant Neuron Phenomenon in Deep Reinforcement Learning. [paper] [code]

- Ghada Sokar, Rishabh Agarwal, Pablo Samuel Castro, Utku Evci.

- Key Word: Dormant Neuron; Deep Reinforcement Learning.

-

Digest

The paper identifies the dormant neuron phenomenon in deep reinforcement learning, where inactive neurons increase and hinder network expressivity, affecting learning. To address this, they propose a method called ReDo, which recycles dormant neurons during training. ReDo reduces the number of dormant neurons, maintains network expressiveness, and leads to improved performance.

-

Cliff-Learning. [paper]

- Tony T. Wang, Igor Zablotchi, Nir Shavit, Jonathan S. Rosenfeld.

- Key Word: Foundation Models; Fine-Tuning.

-

Digest

We study the data-scaling of transfer learning from foundation models in the low-downstream-data regime. We observe an intriguing phenomenon which we call cliff-learning. Cliff-learning refers to regions of data-scaling laws where performance improves at a faster than power law rate (i.e. regions of concavity on a log-log scaling plot).

-

ModelDiff: A Framework for Comparing Learning Algorithms. [paper] [code]

- Harshay Shah, Sung Min Park, Andrew Ilyas, Aleksander Madry.

- Key Word: Representation-based Comparison; Example-level Comparisons; Comparing Feature Attributions.

-

Digest

We study the problem of (learning) algorithm comparison, where the goal is to find differences between models trained with two different learning algorithms. We begin by formalizing this goal as one of finding distinguishing feature transformations, i.e., input transformations that change the predictions of models trained with one learning algorithm but not the other. We then present ModelDiff, a method that leverages the datamodels framework (Ilyas et al., 2022) to compare learning algorithms based on how they use their training data.

-

Overfreezing Meets Overparameterization: A Double Descent Perspective on Transfer Learning of Deep Neural Networks. [paper]

- Yehuda Dar, Lorenzo Luzi, Richard G. Baraniuk.

- Key Word: Transfer Learning; Deep Double Descent; Overfreezing.

-

Digest

We study the generalization behavior of transfer learning of deep neural networks (DNNs). We adopt the overparameterization perspective -- featuring interpolation of the training data (i.e., approximately zero train error) and the double descent phenomenon -- to explain the delicate effect of the transfer learning setting on generalization performance. We study how the generalization behavior of transfer learning is affected by the dataset size in the source and target tasks, the number of transferred layers that are kept frozen in the target DNN training, and the similarity between the source and target tasks.

-

How to Fine-Tune Vision Models with SGD. [paper]

- Ananya Kumar, Ruoqi Shen, Sébastien Bubeck, Suriya Gunasekar.

- Key Word: Fine-Tuning; Out-of-Distribution Generalization.

-

Digest

We show that fine-tuning with AdamW performs substantially better than SGD on modern Vision Transformer and ConvNeXt models. We find that large gaps in performance between SGD and AdamW occur when the fine-tuning gradients in the first "embedding" layer are much larger than in the rest of the model. Our analysis suggests an easy fix that works consistently across datasets and models: merely freezing the embedding layer (less than 1\% of the parameters) leads to SGD performing competitively with AdamW while using less memory.

-

What Images are More Memorable to Machines? [paper] [code]

- Junlin Han, Huangying Zhan, Jie Hong, Pengfei Fang, Hongdong Li, Lars Petersson, Ian Reid.

- Key Word: Self-Supervised Memorization Quantification.

-

Digest

This paper studies the problem of measuring and predicting how memorable an image is to pattern recognition machines, as a path to explore machine intelligence. Firstly, we propose a self-supervised machine memory quantification pipeline, dubbed ``MachineMem measurer'', to collect machine memorability scores of images. Similar to humans, machines also tend to memorize certain kinds of images, whereas the types of images that machines and humans memorialize are different.

-

Harmonizing the object recognition strategies of deep neural networks with humans. [paper] [code]

- Thomas Fel, Ivan Felipe, Drew Linsley, Thomas Serre.

- Key Word: Interpretation; Neural Harmonizer; Psychophysics.

-

Digest

Across 84 different DNNs trained on ImageNet and three independent datasets measuring the where and the how of human visual strategies for object recognition on those images, we find a systematic trade-off between DNN categorization accuracy and alignment with human visual strategies for object recognition. State-of-the-art DNNs are progressively becoming less aligned with humans as their accuracy improves. We rectify this growing issue with our neural harmonizer: a general-purpose training routine that both aligns DNN and human visual strategies and improves categorization accuracy.

-

Pruning's Effect on Generalization Through the Lens of Training and Regularization. [paper]

- Tian Jin, Michael Carbin, Daniel M. Roy, Jonathan Frankle, Gintare Karolina Dziugaite.

- Key Word: Pruning; Regularization.

-

Digest

We show that size reduction cannot fully account for the generalization-improving effect of standard pruning algorithms. Instead, we find that pruning leads to better training at specific sparsities, improving the training loss over the dense model. We find that pruning also leads to additional regularization at other sparsities, reducing the accuracy degradation due to noisy examples over the dense model. Pruning extends model training time and reduces model size. These two factors improve training and add regularization respectively. We empirically demonstrate that both factors are essential to fully explaining pruning's impact on generalization.

-

What does a deep neural network confidently perceive? The effective dimension of high certainty class manifolds and their low confidence boundaries. [paper] [code]

- Stanislav Fort, Ekin Dogus Cubuk, Surya Ganguli, Samuel S. Schoenholz.

- Key Word: Class Manifold; Linear Region; Out-of-Distribution Generalization.

-

Digest

Deep neural network classifiers partition input space into high confidence regions for each class. The geometry of these class manifolds (CMs) is widely studied and intimately related to model performance; for example, the margin depends on CM boundaries. We exploit the notions of Gaussian width and Gordon's escape theorem to tractably estimate the effective dimension of CMs and their boundaries through tomographic intersections with random affine subspaces of varying dimension. We show several connections between the dimension of CMs, generalization, and robustness.

-

In What Ways Are Deep Neural Networks Invariant and How Should We Measure This? [paper]

- Henry Kvinge, Tegan H. Emerson, Grayson Jorgenson, Scott Vasquez, Timothy Doster, Jesse D. Lew. NeurIPS 2022

- Key Word: Invariance and Equivariance.

-

Digest

We explore the nature of invariance and equivariance of deep learning models with the goal of better understanding the ways in which they actually capture these concepts on a formal level. We introduce a family of invariance and equivariance metrics that allows us to quantify these properties in a way that disentangles them from other metrics such as loss or accuracy.

-

Relative representations enable zero-shot latent space communication. [paper]

- Luca Moschella, Valentino Maiorca, Marco Fumero, Antonio Norelli, Francesco Locatello, Emanuele Rodolà.

- Key Word: Representation Similarity; Model stitching.

-

Digest

Neural networks embed the geometric structure of a data manifold lying in a high-dimensional space into latent representations. Ideally, the distribution of the data points in the latent space should depend only on the task, the data, the loss, and other architecture-specific constraints. However, factors such as the random weights initialization, training hyperparameters, or other sources of randomness in the training phase may induce incoherent latent spaces that hinder any form of reuse. Nevertheless, we empirically observe that, under the same data and modeling choices, distinct latent spaces typically differ by an unknown quasi-isometric transformation: that is, in each space, the distances between the encodings do not change. In this work, we propose to adopt pairwise similarities as an alternative data representation, that can be used to enforce the desired invariance without any additional training.

-

Minimalistic Unsupervised Learning with the Sparse Manifold Transform. [paper]

- Yubei Chen, Zeyu Yun, Yi Ma, Bruno Olshausen, Yann LeCun.

- Key Word: Self-Supervision; Sparse Manifold Transform.

-

Digest

We describe a minimalistic and interpretable method for unsupervised learning, without resorting to data augmentation, hyperparameter tuning, or other engineering designs, that achieves performance close to the SOTA SSL methods. Our approach leverages the sparse manifold transform, which unifies sparse coding, manifold learning, and slow feature analysis. With a one-layer deterministic sparse manifold transform, one can achieve 99.3% KNN top-1 accuracy on MNIST, 81.1% KNN top-1 accuracy on CIFAR-10 and 53.2% on CIFAR-100.

-

A Review of Sparse Expert Models in Deep Learning. [paper]

- William Fedus, Jeff Dean, Barret Zoph.

- Key Word: Mixture-of-Experts.

-

Digest

Sparse expert models are a thirty-year old concept re-emerging as a popular architecture in deep learning. This class of architecture encompasses Mixture-of-Experts, Switch Transformers, Routing Networks, BASE layers, and others, all with the unifying idea that each example is acted on by a subset of the parameters. By doing so, the degree of sparsity decouples the parameter count from the compute per example allowing for extremely large, but efficient models. The resulting models have demonstrated significant improvements across diverse domains such as natural language processing, computer vision, and speech recognition. We review the concept of sparse expert models, provide a basic description of the common algorithms, contextualize the advances in the deep learning era, and conclude by highlighting areas for future work.

-

A Data-Based Perspective on Transfer Learning. [paper] [code]

- Saachi Jain, Hadi Salman, Alaa Khaddaj, Eric Wong, Sung Min Park, Aleksander Madry.

- Key Word: Transfer Learning; Influence Function; Data Leakage.

-

Digest

It is commonly believed that in transfer learning including more pre-training data translates into better performance. However, recent evidence suggests that removing data from the source dataset can actually help too. In this work, we take a closer look at the role of the source dataset's composition in transfer learning and present a framework for probing its impact on downstream performance. Our framework gives rise to new capabilities such as pinpointing transfer learning brittleness as well as detecting pathologies such as data-leakage and the presence of misleading examples in the source dataset.

-

When Does Re-initialization Work? [paper]

- Sheheryar Zaidi, Tudor Berariu, Hyunjik Kim, Jörg Bornschein, Claudia Clopath, Yee Whye Teh, Razvan Pascanu.

- Key Word: Re-initialization; Regularization.

-

Digest

We conduct an extensive empirical comparison of standard training with a selection of re-initialization methods to answer this question, training over 15,000 models on a variety of image classification benchmarks. We first establish that such methods are consistently beneficial for generalization in the absence of any other regularization. However, when deployed alongside other carefully tuned regularization techniques, re-initialization methods offer little to no added benefit for generalization, although optimal generalization performance becomes less sensitive to the choice of learning rate and weight decay hyperparameters. To investigate the impact of re-initialization methods on noisy data, we also consider learning under label noise. Surprisingly, in this case, re-initialization significantly improves upon standard training, even in the presence of other carefully tuned regularization techniques.

-

How You Start Matters for Generalization. [paper]

- Sameera Ramasinghe, Lachlan MacDonald, Moshiur Farazi, Hemanth Sartachandran, Simon Lucey.

- Key Word: Implicit regularization; Fourier Spectrum.

-

Digest

We promote a shift of focus towards initialization rather than neural architecture or (stochastic) gradient descent to explain this implicit regularization. Through a Fourier lens, we derive a general result for the spectral bias of neural networks and show that the generalization of neural networks is heavily tied to their initialization. Further, we empirically solidify the developed theoretical insights using practical, deep networks.

-

Rethinking the Role of Demonstrations: What Makes In-Context Learning Work? [paper] [code]

- Sewon Min, Xinxi Lyu, Ari Holtzman, Mikel Artetxe, Mike Lewis, Hannaneh Hajishirzi, Luke Zettlemoyer.

- Key Word: Natural Language Processing; In-Context Learning.

-

Digest

We show that ground truth demonstrations are in fact not required -- randomly replacing labels in the demonstrations barely hurts performance, consistently over 12 different models including GPT-3. Instead, we find that other aspects of the demonstrations are the key drivers of end task performance, including the fact that they provide a few examples of (1) the label space, (2) the distribution of the input text, and (3) the overall format of the sequence.

-

Masked Autoencoders Are Scalable Vision Learners. [paper] [code]

- Kaiming He, Xinlei Chen, Saining Xie, Yanghao Li, Piotr Dollár, Ross Girshick. CVPR 2022

- Key Word: Self-Supervision; Autoencoders.

-

Digest

This paper shows that masked autoencoders (MAE) are scalable self-supervised learners for computer vision. Our MAE approach is simple: we mask random patches of the input image and reconstruct the missing pixels. It is based on two core designs. First, we develop an asymmetric encoder-decoder architecture, with an encoder that operates only on the visible subset of patches (without mask tokens), along with a lightweight decoder that reconstructs the original image from the latent representation and mask tokens. Second, we find that masking a high proportion of the input image, e.g., 75%, yields a nontrivial and meaningful self-supervisory task.

-

Learning in High Dimension Always Amounts to Extrapolation. [paper]

- Randall Balestriero, Jerome Pesenti, Yann LeCun.

- Key Word: Interpolation and Extrapolation.

-

Digest

The notion of interpolation and extrapolation is fundamental in various fields from deep learning to function approximation. Interpolation occurs for a sample x whenever this sample falls inside or on the boundary of the given dataset's convex hull. Extrapolation occurs when x falls outside of that convex hull. One fundamental (mis)conception is that state-of-the-art algorithms work so well because of their ability to correctly interpolate training data. A second (mis)conception is that interpolation happens throughout tasks and datasets, in fact, many intuitions and theories rely on that assumption. We empirically and theoretically argue against those two points and demonstrate that on any high-dimensional (>100) dataset, interpolation almost surely never happens.

-

Understanding Dataset Difficulty with V-Usable Information. [paper] [code]

- Kawin Ethayarajh, Yejin Choi, Swabha Swayamdipta. ICML 2022

- Key Word: Dataset Difficulty Measures; Information Theory.

-

Digest

Estimating the difficulty of a dataset typically involves comparing state-of-the-art models to humans; the bigger the performance gap, the harder the dataset is said to be. However, this comparison provides little understanding of how difficult each instance in a given distribution is, or what attributes make the dataset difficult for a given model. To address these questions, we frame dataset difficulty -- w.r.t. a model V -- as the lack of V-usable information (Xu et al., 2019), where a lower value indicates a more difficult dataset for V. We further introduce pointwise V-information (PVI) for measuring the difficulty of individual instances w.r.t. a given distribution.

-

Exploring the Limits of Large Scale Pre-training. [paper]

- Samira Abnar, Mostafa Dehghani, Behnam Neyshabur, Hanie Sedghi. ICLR 2022

- Key Word: Pre-training.

-

Digest

We investigate more than 4800 experiments on Vision Transformers, MLP-Mixers and ResNets with number of parameters ranging from ten million to ten billion, trained on the largest scale of available image data (JFT, ImageNet21K) and evaluated on more than 20 downstream image recognition tasks. We propose a model for downstream performance that reflects the saturation phenomena and captures the nonlinear relationship in performance of upstream and downstream tasks.

-

Stochastic Training is Not Necessary for Generalization. [paper] [code]

- Jonas Geiping, Micah Goldblum, Phillip E. Pope, Michael Moeller, Tom Goldstein. ICLR 2022

- Key Word: Stochastic Gradient Descent; Regularization.

-

Digest

It is widely believed that the implicit regularization of SGD is fundamental to the impressive generalization behavior we observe in neural networks. In this work, we demonstrate that non-stochastic full-batch training can achieve comparably strong performance to SGD on CIFAR-10 using modern architectures. To this end, we show that the implicit regularization of SGD can be completely replaced with explicit regularization even when comparing against a strong and well-researched baseline.

-

Pointer Value Retrieval: A new benchmark for understanding the limits of neural network generalization. [paper]

- Chiyuan Zhang, Maithra Raghu, Jon Kleinberg, Samy Bengio.

- Key Word: Out-of-Distribution Generalization.

-

Digest

In this paper we introduce a novel benchmark, Pointer Value Retrieval (PVR) tasks, that explore the limits of neural network generalization. We demonstrate that this task structure provides a rich testbed for understanding generalization, with our empirical study showing large variations in neural network performance based on dataset size, task complexity and model architecture.

-

What can linear interpolation of neural network loss landscapes tell us? [paper]

- Tiffany Vlaar, Jonathan Frankle. ICML 2022

- Key Word: Linear Interpolation; Loss Landscapes.

-

Digest

We put inferences of this kind to the test, systematically evaluating how linear interpolation and final performance vary when altering the data, choice of initialization, and other optimizer and architecture design choices. Further, we use linear interpolation to study the role played by individual layers and substructures of the network. We find that certain layers are more sensitive to the choice of initialization, but that the shape of the linear path is not indicative of the changes in test accuracy of the model.

-

Can Vision Transformers Learn without Natural Images? [paper] [code]

- Kodai Nakashima, Hirokatsu Kataoka, Asato Matsumoto, Kenji Iwata, Nakamasa Inoue. AAAI 2022

- Key Word: Formula-driven Supervised Learning; Vision Transformer.

-

Digest

We pre-train ViT without any image collections and annotation labor. We experimentally verify that our proposed framework partially outperforms sophisticated Self-Supervised Learning (SSL) methods like SimCLRv2 and MoCov2 without using any natural images in the pre-training phase. Moreover, although the ViT pre-trained without natural images produces some different visualizations from ImageNet pre-trained ViT, it can interpret natural image datasets to a large extent.

-

The Low-Rank Simplicity Bias in Deep Networks. [paper]

- Minyoung Huh, Hossein Mobahi, Richard Zhang, Brian Cheung, Pulkit Agrawal, Phillip Isola.

- Key Word: Low-Rank Embedding; Inductive Bias.

-

Digest

We make a series of empirical observations that investigate and extend the hypothesis that deeper networks are inductively biased to find solutions with lower effective rank embeddings. We conjecture that this bias exists because the volume of functions that maps to low effective rank embedding increases with depth. We show empirically that our claim holds true on finite width linear and non-linear models on practical learning paradigms and show that on natural data, these are often the solutions that generalize well.

-

Gradient Descent on Neural Networks Typically Occurs at the Edge of Stability. [paper] [code]

- Jeremy M. Cohen, Simran Kaur, Yuanzhi Li, J. Zico Kolter, Ameet Talwalkar. ICLR 2021

- Key Word: Edge of Stability.

-

Digest

We empirically demonstrate that full-batch gradient descent on neural network training objectives typically operates in a regime we call the Edge of Stability. In this regime, the maximum eigenvalue of the training loss Hessian hovers just above the numerical value 2/(step size), and the training loss behaves non-monotonically over short timescales, yet consistently decreases over long timescales. Since this behavior is inconsistent with several widespread presumptions in the field of optimization, our findings raise questions as to whether these presumptions are relevant to neural network training.

-

Pre-training without Natural Images. [paper] [code]

- Hirokatsu Kataoka, Kazushige Okayasu, Asato Matsumoto, Eisuke Yamagata, Ryosuke Yamada, Nakamasa Inoue, Akio Nakamura, Yutaka Satoh. ACCV 2020

- Key Word: Formula-driven Supervised Learning.

-

Digest

The paper proposes a novel concept, Formula-driven Supervised Learning. We automatically generate image patterns and their category labels by assigning fractals, which are based on a natural law existing in the background knowledge of the real world. Theoretically, the use of automatically generated images instead of natural images in the pre-training phase allows us to generate an infinite scale dataset of labeled images. Although the models pre-trained with the proposed Fractal DataBase (FractalDB), a database without natural images, does not necessarily outperform models pre-trained with human annotated datasets at all settings, we are able to partially surpass the accuracy of ImageNet/Places pre-trained models.

-

When Do Curricula Work? [paper] [code]

- Xiaoxia Wu, Ethan Dyer, Behnam Neyshabur. ICLR 2021

- Key Word: Curriculum Learning.

-

Digest

We set out to investigate the relative benefits of ordered learning. We first investigate the implicit curricula resulting from architectural and optimization bias and find that samples are learned in a highly consistent order. Next, to quantify the benefit of explicit curricula, we conduct extensive experiments over thousands of orderings spanning three kinds of learning: curriculum, anti-curriculum, and random-curriculum -- in which the size of the training dataset is dynamically increased over time, but the examples are randomly ordered.

-

In Search of Robust Measures of Generalization. [paper] [code]

- Gintare Karolina Dziugaite, Alexandre Drouin, Brady Neal, Nitarshan Rajkumar, Ethan Caballero, Linbo Wang, Ioannis Mitliagkas, Daniel M. Roy. NeurIPS 2020

- Key Word: Generalization Measures.

-

Digest

One of the principal scientific challenges in deep learning is explaining generalization, i.e., why the particular way the community now trains networks to achieve small training error also leads to small error on held-out data from the same population. It is widely appreciated that some worst-case theories -- such as those based on the VC dimension of the class of predictors induced by modern neural network architectures -- are unable to explain empirical performance. A large volume of work aims to close this gap, primarily by developing bounds on generalization error, optimization error, and excess risk. When evaluated empirically, however, most of these bounds are numerically vacuous. Focusing on generalization bounds, this work addresses the question of how to evaluate such bounds empirically.

-

The Deep Bootstrap Framework: Good Online Learners are Good Offline Generalizers. [paper] [code]

- Preetum Nakkiran, Behnam Neyshabur, Hanie Sedghi. ICLR 2021

- Key Word: Online Learning; Finite-Sample Deviations.

-

Digest

We propose a new framework for reasoning about generalization in deep learning. The core idea is to couple the Real World, where optimizers take stochastic gradient steps on the empirical loss, to an Ideal World, where optimizers take steps on the population loss. This leads to an alternate decomposition of test error into: (1) the Ideal World test error plus (2) the gap between the two worlds. If the gap (2) is universally small, this reduces the problem of generalization in offline learning to the problem of optimization in online learning.

-

Characterising Bias in Compressed Models. [paper]

- Sara Hooker, Nyalleng Moorosi, Gregory Clark, Samy Bengio, Emily Denton.

- Key Word: Pruning; Fairness.

-

Digest

The popularity and widespread use of pruning and quantization is driven by the severe resource constraints of deploying deep neural networks to environments with strict latency, memory and energy requirements. These techniques achieve high levels of compression with negligible impact on top-line metrics (top-1 and top-5 accuracy). However, overall accuracy hides disproportionately high errors on a small subset of examples; we call this subset Compression Identified Exemplars (CIE).

-

Dataset Cartography: Mapping and Diagnosing Datasets with Training Dynamics. [paper] [code]

- Swabha Swayamdipta, Roy Schwartz, Nicholas Lourie, Yizhong Wang, Hannaneh Hajishirzi, Noah A. Smith, Yejin Choi. EMNLP 2020

- Key Word: Training Dynamics; Data Map; Curriculum Learning.

-

Digest

Large datasets have become commonplace in NLP research. However, the increased emphasis on data quantity has made it challenging to assess the quality of data. We introduce Data Maps---a model-based tool to characterize and diagnose datasets. We leverage a largely ignored source of information: the behavior of the model on individual instances during training (training dynamics) for building data maps.

-

What is being transferred in transfer learning? [paper] [code]

- Behnam Neyshabur, Hanie Sedghi, Chiyuan Zhang. NeurIPS 2020

- Key Word: Transfer Learning.

-

Digest

We provide new tools and analyses to address these fundamental questions. Through a series of analyses on transferring to block-shuffled images, we separate the effect of feature reuse from learning low-level statistics of data and show that some benefit of transfer learning comes from the latter. We present that when training from pre-trained weights, the model stays in the same basin in the loss landscape and different instances of such model are similar in feature space and close in parameter space.

-

Deep Isometric Learning for Visual Recognition. [paper] [code]

- Haozhi Qi, Chong You, Xiaolong Wang, Yi Ma, Jitendra Malik. ICML 2020

- Key Word: Isometric Networks.

-

Digest

This paper shows that deep vanilla ConvNets without normalization nor skip connections can also be trained to achieve surprisingly good performance on standard image recognition benchmarks. This is achieved by enforcing the convolution kernels to be near isometric during initialization and training, as well as by using a variant of ReLU that is shifted towards being isometric.

-

On the Generalization Benefit of Noise in Stochastic Gradient Descent. [paper]

- Samuel L. Smith, Erich Elsen, Soham De. ICML 2020

- Key Word: Stochastic Gradient Descent.

-

Digest

In this paper, we perform carefully designed experiments and rigorous hyperparameter sweeps on a range of popular models, which verify that small or moderately large batch sizes can substantially outperform very large batches on the test set. This occurs even when both models are trained for the same number of iterations and large batches achieve smaller training losses.

-

Do CNNs Encode Data Augmentations? [paper]

- Eddie Yan, Yanping Huang.

- Key Word: Data Augmentations.

-

Digest

Surprisingly, neural network features not only predict data augmentation transformations, but they predict many transformations with high accuracy. After validating that neural networks encode features corresponding to augmentation transformations, we show that these features are primarily encoded in the early layers of modern CNNs.

-

Do We Need Zero Training Loss After Achieving Zero Training Error? [paper] [code]

- Takashi Ishida, Ikko Yamane, Tomoya Sakai, Gang Niu, Masashi Sugiyama. ICML 2020

- Key Word: Regularization.

-

Digest

Our approach makes the loss float around the flooding level by doing mini-batched gradient descent as usual but gradient ascent if the training loss is below the flooding level. This can be implemented with one line of code, and is compatible with any stochastic optimizer and other regularizers. We experimentally show that flooding improves performance and as a byproduct, induces a double descent curve of the test loss.

-

Understanding Why Neural Networks Generalize Well Through GSNR of Parameters. [paper]

- Jinlong Liu, Guoqing Jiang, Yunzhi Bai, Ting Chen, Huayan Wang. ICLR 2020

- Key Word: Generalization Indicators.

-

Digest

In this paper, we provide a novel perspective on these issues using the gradient signal to noise ratio (GSNR) of parameters during training process of DNNs. The GSNR of a parameter is defined as the ratio between its gradient's squared mean and variance, over the data distribution.

-

Angular Visual Hardness. [paper]

- Beidi Chen, Weiyang Liu, Zhiding Yu, Jan Kautz, Anshumali Shrivastava, Animesh Garg, Anima Anandkumar. ICML 2020

- Key Word: Calibration; Example Hardness Measures.

-

Digest

We propose a novel measure for CNN models known as Angular Visual Hardness. Our comprehensive empirical studies show that AVH can serve as an indicator of generalization abilities of neural networks, and improving SOTA accuracy entails improving accuracy on hard example

-

Fantastic Generalization Measures and Where to Find Them. [paper] [code]

- Yiding Jiang, Behnam Neyshabur, Hossein Mobahi, Dilip Krishnan, Samy Bengio. ICLR 2020

- Key Word: Complexity Measures; Spurious Correlations.

-

Digest

We present the first large scale study of generalization in deep networks. We investigate more then 40 complexity measures taken from both theoretical bounds and empirical studies. We train over 10,000 convolutional networks by systematically varying commonly used hyperparameters. Hoping to uncover potentially causal relationships between each measure and generalization, we analyze carefully controlled experiments and show surprising failures of some measures as well as promising measures for further research.

-

Truth or Backpropaganda? An Empirical Investigation of Deep Learning Theory. [paper] [code]

- Micah Goldblum, Jonas Geiping, Avi Schwarzschild, Michael Moeller, Tom Goldstein. ICLR 2020

- Key Word: Local Minima.

-

Digest

The authors take a closer look at widely held beliefs about neural networks. Using a mix of analysis and experiment, they shed some light on the ways these assumptions break down.

-

Rapid Learning or Feature Reuse? Towards Understanding the Effectiveness of MAML. [paper] [code]

- Aniruddh Raghu, Maithra Raghu, Samy Bengio, Oriol Vinyals. ICLR 2020

- Key Word: Meta Learning.

-

Digest

Despite MAML's popularity, a fundamental open question remains -- is the effectiveness of MAML due to the meta-initialization being primed for rapid learning (large, efficient changes in the representations) or due to feature reuse, with the meta initialization already containing high quality features? We investigate this question, via ablation studies and analysis of the latent representations, finding that feature reuse is the dominant factor.

-

Finding the Needle in the Haystack with Convolutions: on the benefits of architectural bias. [paper] [code]

- Stéphane d'Ascoli, Levent Sagun, Joan Bruna, Giulio Biroli. NeurIPS 2019

- Key Word: Architectural Bias.

-

Digest

In particular, Convolutional Neural Networks (CNNs) are known to perform much better than Fully-Connected Networks (FCNs) on spatially structured data: the architectural structure of CNNs benefits from prior knowledge on the features of the data, for instance their translation invariance. The aim of this work is to understand this fact through the lens of dynamics in the loss landscape.

-

Adversarial Training Can Hurt Generalization. [paper]

- Aditi Raghunathan, Sang Michael Xie, Fanny Yang, John C. Duchi, Percy Liang.

- Key Word: Adversarial Examples.

-

Digest

While adversarial training can improve robust accuracy (against an adversary), it sometimes hurts standard accuracy (when there is no adversary). Previous work has studied this tradeoff between standard and robust accuracy, but only in the setting where no predictor performs well on both objectives in the infinite data limit. In this paper, we show that even when the optimal predictor with infinite data performs well on both objectives, a tradeoff can still manifest itself with finite data.

-

Bad Global Minima Exist and SGD Can Reach Them. [paper] [code]

- Shengchao Liu, Dimitris Papailiopoulos, Dimitris Achlioptas. NeurIPS 2020

- Key Word: Stochastic Gradient Descent.

-

Digest

Several works have aimed to explain why overparameterized neural networks generalize well when trained by Stochastic Gradient Descent (SGD). The consensus explanation that has emerged credits the randomized nature of SGD for the bias of the training process towards low-complexity models and, thus, for implicit regularization. We take a careful look at this explanation in the context of image classification with common deep neural network architectures. We find that if we do not regularize explicitly, then SGD can be easily made to converge to poorly-generalizing, high-complexity models: all it takes is to first train on a random labeling on the data, before switching to properly training with the correct labels.

-

Deep ReLU Networks Have Surprisingly Few Activation Patterns. [paper]

- Boris Hanin, David Rolnick. NeurIPS 2019

-

Digest

In this paper, we show that the average number of activation patterns for ReLU networks at initialization is bounded by the total number of neurons raised to the input dimension. We show empirically that this bound, which is independent of the depth, is tight both at initialization and during training, even on memorization tasks that should maximize the number of activation patterns.

-

Sensitivity of Deep Convolutional Networks to Gabor Noise. [paper] [code]

- Kenneth T. Co, Luis Muñoz-González, Emil C. Lupu.

- Key Word: Robustness.

-

Digest

Deep Convolutional Networks (DCNs) have been shown to be sensitive to Universal Adversarial Perturbations (UAPs): input-agnostic perturbations that fool a model on large portions of a dataset. These UAPs exhibit interesting visual patterns, but this phenomena is, as yet, poorly understood. Our work shows that visually similar procedural noise patterns also act as UAPs. In particular, we demonstrate that different DCN architectures are sensitive to Gabor noise patterns. This behaviour, its causes, and implications deserve further in-depth study.

-

Rethinking the Usage of Batch Normalization and Dropout in the Training of Deep Neural Networks. [paper]

- Guangyong Chen, Pengfei Chen, Yujun Shi, Chang-Yu Hsieh, Benben Liao, Shengyu Zhang.

- Key Word: Batch Normalization; Dropout.

-

Digest

Our work is based on an excellent idea that whitening the inputs of neural networks can achieve a fast convergence speed. Given the well-known fact that independent components must be whitened, we introduce a novel Independent-Component (IC) layer before each weight layer, whose inputs would be made more independent.

-

A critical analysis of self-supervision, or what we can learn from a single image. [paper] [code]

- Yuki M. Asano, Christian Rupprecht, Andrea Vedaldi. ICLR 2020

- Key Word: Self-Supervision.

-

Digest

We show that three different and representative methods, BiGAN, RotNet and DeepCluster, can learn the first few layers of a convolutional network from a single image as well as using millions of images and manual labels, provided that strong data augmentation is used. However, for deeper layers the gap with manual supervision cannot be closed even if millions of unlabelled images are used for training.

-

Approximating CNNs with Bag-of-local-Features models works surprisingly well on ImageNet. [paper] [code]

- Wieland Brendel, Matthias Bethge. ICLR 2019

- Key Word: Bag-of-Features.

-

Digest

Our model, a simple variant of the ResNet-50 architecture called BagNet, classifies an image based on the occurrences of small local image features without taking into account their spatial ordering. This strategy is closely related to the bag-of-feature (BoF) models popular before the onset of deep learning and reaches a surprisingly high accuracy on ImageNet.

-

Transfusion: Understanding Transfer Learning for Medical Imaging. [paper] [code]

- Maithra Raghu, Chiyuan Zhang, Jon Kleinberg, Samy Bengio. NeurIPS 2019

- Key Word: Transfer Learning; Medical Imaging.

-

Digest

we explore properties of transfer learning for medical imaging. A performance evaluation on two large scale medical imaging tasks shows that surprisingly, transfer offers little benefit to performance, and simple, lightweight models can perform comparably to ImageNet architectures.

-

Identity Crisis: Memorization and Generalization under Extreme Overparameterization. [paper]

- Chiyuan Zhang, Samy Bengio, Moritz Hardt, Michael C. Mozer, Yoram Singer. ICLR 2020

- Key Word: Memorization.

-

Digest

We study the interplay between memorization and generalization of overparameterized networks in the extreme case of a single training example and an identity-mapping task.

-

Are All Layers Created Equal? [paper]

- Chiyuan Zhang, Samy Bengio, Yoram Singer. JMLR

- Key Word: Robustness.

-

Digest

We show that the layers can be categorized as either "ambient" or "critical". Resetting the ambient layers to their initial values has no negative consequence, and in many cases they barely change throughout training. On the contrary, resetting the critical layers completely destroys the predictor and the performance drops to chance.

-

Why ReLU networks yield high-confidence predictions far away from the training data and how to mitigate the problem. [paper] [code]

- Matthias Hein, Maksym Andriushchenko, Julian Bitterwolf. CVPR 2019

- Key Word: ReLU.

-

Digest

Classifiers used in the wild, in particular for safety-critical systems, should not only have good generalization properties but also should know when they don't know, in particular make low confidence predictions far away from the training data. We show that ReLU type neural networks which yield a piecewise linear classifier function fail in this regard as they produce almost always high confidence predictions far away from the training data.

-

An Empirical Study of Example Forgetting during Deep Neural Network Learning. [paper] [code]

- Mariya Toneva, Alessandro Sordoni, Remi Tachet des Combes, Adam Trischler, Yoshua Bengio, Geoffrey J. Gordon. ICLR 2019

- Key Word: Curriculum Learning; Sample Weighting; Example Forgetting.

-

Digest

We define a 'forgetting event' to have occurred when an individual training example transitions from being classified correctly to incorrectly over the course of learning. Across several benchmark data sets, we find that: (i) certain examples are forgotten with high frequency, and some not at all; (ii) a data set's (un)forgettable examples generalize across neural architectures; and (iii) based on forgetting dynamics, a significant fraction of examples can be omitted from the training data set while still maintaining state-of-the-art generalization performance.

-

On Implicit Filter Level Sparsity in Convolutional Neural Networks. [paper]

- Dushyant Mehta, Kwang In Kim, Christian Theobalt. CVPR 2019

- Key Word: Regularization; Sparsification.

-

Digest

We investigate filter level sparsity that emerges in convolutional neural networks (CNNs) which employ Batch Normalization and ReLU activation, and are trained with adaptive gradient descent techniques and L2 regularization or weight decay. We conduct an extensive experimental study casting our initial findings into hypotheses and conclusions about the mechanisms underlying the emergent filter level sparsity. This study allows new insight into the performance gap obeserved between adapative and non-adaptive gradient descent methods in practice.

-

Challenging Common Assumptions in the Unsupervised Learning of Disentangled Representations. [paper] [code]

- Francesco Locatello, Stefan Bauer, Mario Lucic, Gunnar Rätsch, Sylvain Gelly, Bernhard Schölkopf, Olivier Bachem. ICML 2019

- Key Word: Disentanglement.

-

Digest

Our results suggest that future work on disentanglement learning should be explicit about the role of inductive biases and (implicit) supervision, investigate concrete benefits of enforcing disentanglement of the learned representations, and consider a reproducible experimental setup covering several data sets.

-

Insights on representational similarity in neural networks with canonical correlation. [paper] [code]

- Ari S. Morcos, Maithra Raghu, Samy Bengio. NeurIPS 2018

- Key Word: Representational Similarity.

-

Digest

Comparing representations in neural networks is fundamentally difficult as the structure of representations varies greatly, even across groups of networks trained on identical tasks, and over the course of training. Here, we develop projection weighted CCA (Canonical Correlation Analysis) as a tool for understanding neural networks, building off of SVCCA.

-

Layer rotation: a surprisingly powerful indicator of generalization in deep networks? [paper] [code]

- Simon Carbonnelle, Christophe De Vleeschouwer.

- Key Word: Weight Evolution.

-

Digest

Our work presents extensive empirical evidence that layer rotation, i.e. the evolution across training of the cosine distance between each layer's weight vector and its initialization, constitutes an impressively consistent indicator of generalization performance. In particular, larger cosine distances between final and initial weights of each layer consistently translate into better generalization performance of the final model.

-

Sensitivity and Generalization in Neural Networks: an Empirical Study. [paper]

- Roman Novak, Yasaman Bahri, Daniel A. Abolafia, Jeffrey Pennington, Jascha Sohl-Dickstein. ICLR 2018

- Key Word: Sensitivity.

-

Digest

In this work, we investigate this tension between complexity and generalization through an extensive empirical exploration of two natural metrics of complexity related to sensitivity to input perturbations. We find that trained neural networks are more robust to input perturbations in the vicinity of the training data manifold, as measured by the norm of the input-output Jacobian of the network, and that it correlates well with generalization.

-

Deep Image Prior. [paper] [code]

- Dmitry Ulyanov, Andrea Vedaldi, Victor Lempitsky.

- Key Word: Low-Level Vision.

-

Digest

In this paper, we show that, on the contrary, the structure of a generator network is sufficient to capture a great deal of low-level image statistics prior to any learning. In order to do so, we show that a randomly-initialized neural network can be used as a handcrafted prior with excellent results in standard inverse problems such as denoising, super-resolution, and inpainting.

-

Critical Learning Periods in Deep Neural Networks. [paper]

- Alessandro Achille, Matteo Rovere, Stefano Soatto. ICLR 2019

- Key Word: Memorization.

-

Digest

Our findings indicate that the early transient is critical in determining the final solution of the optimization associated with training an artificial neural network. In particular, the effects of sensory deficits during a critical period cannot be overcome, no matter how much additional training is performed.

-

A Closer Look at Memorization in Deep Networks. [paper]

- Devansh Arpit, Stanisław Jastrzębski, Nicolas Ballas, David Krueger, Emmanuel Bengio, Maxinder S. Kanwal, Tegan Maharaj, Asja Fischer, Aaron Courville, Yoshua Bengio, Simon Lacoste-Julien. ICML 2017

- Key Word: Memorization.

-

Digest

In our experiments, we expose qualitative differences in gradient-based optimization of deep neural networks (DNNs) on noise vs. real data. We also demonstrate that for appropriately tuned explicit regularization (e.g., dropout) we can degrade DNN training performance on noise datasets without compromising generalization on real data.

- Understanding deep learning requires rethinking generalization. [paper]

- Chiyuan Zhang, Samy Bengio, Moritz Hardt, Benjamin Recht, Oriol Vinyals. ICLR 2017

- Key Word: Memorization.

-

Digest

Through extensive systematic experiments, we show how these traditional approaches fail to explain why large neural networks generalize well in practice. Specifically, our experiments establish that state-of-the-art convolutional networks for image classification trained with stochastic gradient methods easily fit a random labeling of the training data.

-

Formation of Representations in Neural Networks. [paper]

- Liu Ziyin, Isaac Chuang, Tomer Galanti, Tomaso Poggio.

- Key Word: Canonical Representation Hypothesis; Neural Representation.

-

Digest

This paper proposes the Canonical Representation Hypothesis (CRH), which posits that during neural network training, a set of six alignment relations universally governs the formation of representations in hidden layers. Specifically, it suggests that the latent representations (R), weights (W), and neuron gradients (G) become mutually aligned, leading to compact representations that are invariant to task-irrelevant transformations. The paper also introduces the Polynomial Alignment Hypothesis (PAH), which arises when CRH is broken, resulting in power-law relations between R, W, and G. The authors suggest that balancing gradient noise and regularization is key to the emergence of these canonical representations and propose that these hypotheses could unify major deep learning phenomena like neural collapse and the neural feature ansatz.

-

Neural Collapse Meets Differential Privacy: Curious Behaviors of NoisyGD with Near-perfect Representation Learning. [paper]

- Chendi Wang, Yuqing Zhu, Weijie J. Su, Yu-Xiang Wang.

- key Word: Neural Collapse; Differential Privacy.

-

Digest

The study by De et al. (2022) explores the impact of large-scale representation learning on differentially private (DP) learning, focusing on the phenomenon of Neural Collapse (NC) in deep learning and transfer learning. The research establishes an error bound within the NC framework, evaluates feature quality, reveals the lesser robustness of DP fine-tuning, and suggests strategies to enhance its robustness, with empirical evidence supporting these findings.

-

Average gradient outer product as a mechanism for deep neural collapse. [paper]

- Daniel Beaglehole, Peter Súkeník, Marco Mondelli, Mikhail Belkin.

- Key Word: Neural Collapse; Average Gradient Outer Product.

-

Digest

This paper investigates the phenomenon of Deep Neural Collapse (DNC), where the final layers of Deep Neural Networks (DNNs) exhibit a highly structured representation of data. The study presents significant evidence that DNC primarily occurs through the process of deep feature learning, facilitated by the average gradient outer product (AGOP). This approach marks a departure from previous explanations that relied on feature-agnostic models. The authors highlight the role of the right singular vectors and values of the network weights in reducing within-class variability, a key aspect of DNC. They establish a link between this singular structure and the AGOP, further demonstrating experimentally and theoretically that AGOP can induce DNC even in randomly initialized neural networks. The paper also discusses Deep Recursive Feature Machines, a conceptual method representing AGOP feature learning in convolutional neural networks, and shows its capability to exhibit DNC.

-

Pushing Boundaries: Mixup's Influence on Neural Collapse. [paper]

- Quinn Fisher, Haoming Meng, Vardan Papyan.

- Key Word: Mixup; Neural Collapse.

-

Digest

The abstract investigates "Mixup," a technique enhancing deep neural network robustness by blending training data and labels, focusing on its success through geometric configurations of network activations. It finds that mixup leads to a unique alignment of last-layer activations that challenges prior expectations, with mixed examples of the same class aligning with the classifier and different classes marking distinct boundaries. This unexpected behavior suggests mixup affects deeper network layers in a novel way, diverging from simple convex combinations of class features. The study connects these findings to improved model calibration and supports them with a theoretical analysis, highlighting the role of a specific geometric pattern (simplex equiangular tight frame) in optimizing last-layer features for better performance.

-

Are Neurons Actually Collapsed? On the Fine-Grained Structure in Neural Representations. [paper]

- Yongyi Yang, Jacob Steinhardt, Wei Hu. ICML 2023

- Key Word: Neural Collapse.

-

Digest

The paper challenges the notion of "Neural Collapse" in well-trained neural networks, arguing that while the last-layer representations may appear to collapse, there is still fine-grained structure present in the representations that captures the intrinsic structure of the input distribution.

-

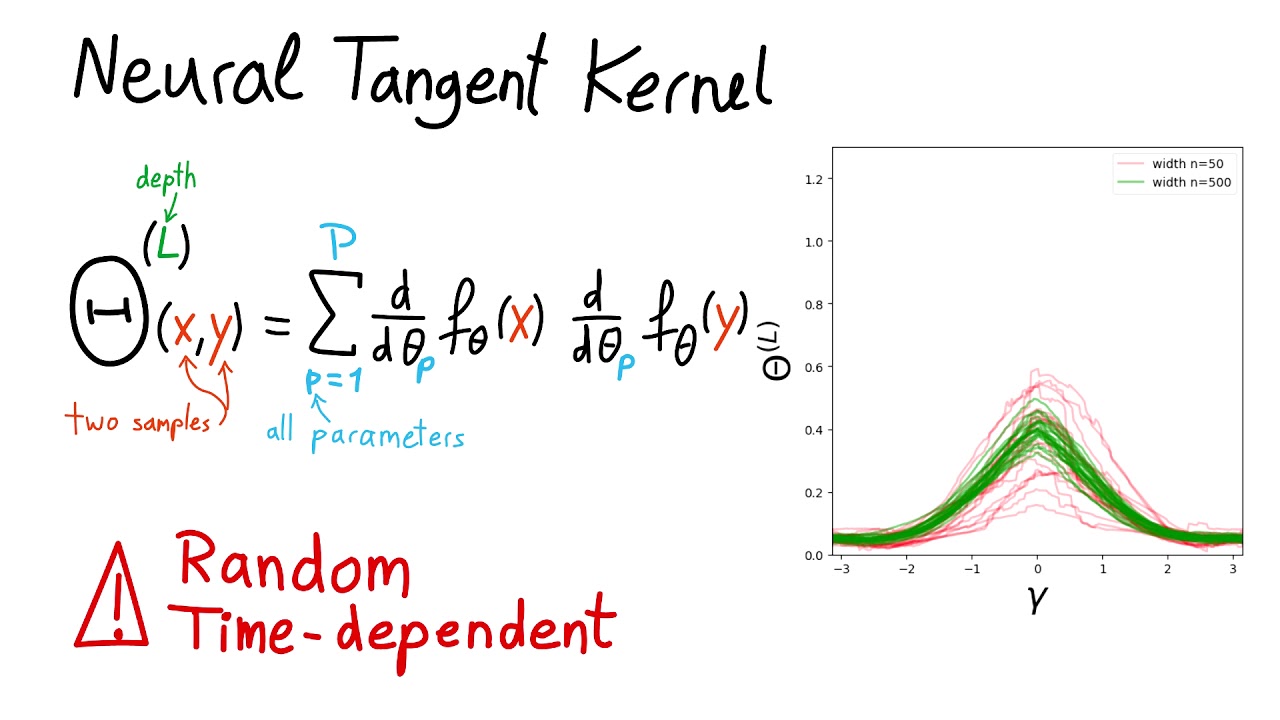

Neural (Tangent Kernel) Collapse. [paper]

- Mariia Seleznova, Dana Weitzner, Raja Giryes, Gitta Kutyniok, Hung-Hsu Chou.

- Key Word: Neural Collapse; Neural Tangent Kernel.

-

Digest

This paper investigates how the Neural Tangent Kernel (NTK), which tracks how deep neural networks (DNNs) change during training, and the Neural Collapse (NC) phenomenon, which refers to the symmetry and structure in the last-layer features of trained classification DNNs, are related. They assume that the empirical NTK has a block structure that matches the class labels, meaning that samples of the same class are more correlated than samples of different classes. They show how this assumption leads to the dynamics of DNNs trained with mean squared (MSE) loss and the emergence of NC in DNNs with block-structured NTK. They support their theory with large-scale experiments on three DNN architectures and three datasets.

-

Neural Collapse Inspired Feature-Classifier Alignment for Few-Shot Class Incremental Learning. [paper] [code]

- Yibo Yang, Haobo Yuan, Xiangtai Li, Zhouchen Lin, Philip Torr, Dacheng Tao. ICLR 2023

- Key Word: Few-Shot Class Incremental Learning; Neural Collapse.

-

Digest

We deal with this misalignment dilemma in FSCIL inspired by the recently discovered phenomenon named neural collapse, which reveals that the last-layer features of the same class will collapse into a vertex, and the vertices of all classes are aligned with the classifier prototypes, which are formed as a simplex equiangular tight frame (ETF). It corresponds to an optimal geometric structure for classification due to the maximized Fisher Discriminant Ratio.

-

Neural Collapse in Deep Linear Network: From Balanced to Imbalanced Data. [paper]

- Hien Dang, Tan Nguyen, Tho Tran, Hung Tran, Nhat Ho.

- Key Word: Neural Collapse; Imbalanced Learning.

-

Digest

We take a step further and prove the Neural Collapse occurrence for deep linear network for the popular mean squared error (MSE) and cross entropy (CE) loss. Furthermore, we extend our research to imbalanced data for MSE loss and present the first geometric analysis for Neural Collapse under this setting.

-

Principled and Efficient Transfer Learning of Deep Models via Neural Collapse. [paper]

- Xiao Li, Sheng Liu, Jinxin Zhou, Xinyu Lu, Carlos Fernandez-Granda, Zhihui Zhu, Qing Qu.

- Key Word: Neural Collapse; Transfer Learning.

-

Digest

This work delves into the mystery of transfer learning through an intriguing phenomenon termed neural collapse (NC), where the last-layer features and classifiers of learned deep networks satisfy: (i) the within-class variability of the features collapses to zero, and (ii) the between-class feature means are maximally and equally separated. Through the lens of NC, our findings for transfer learning are the following: (i) when pre-training models, preventing intra-class variability collapse (to a certain extent) better preserves the intrinsic structures of the input data, so that it leads to better model transferability; (ii) when fine-tuning models on downstream tasks, obtaining features with more NC on downstream data results in better test accuracy on the given task.

-

Perturbation Analysis of Neural Collapse. [paper]

- Tom Tirer, Haoxiang Huang, Jonathan Niles-Weed.

- Key Word: Neural Collapse.

-

Digest

We propose a richer model that can capture this phenomenon by forcing the features to stay in the vicinity of a predefined features matrix (e.g., intermediate features). We explore the model in the small vicinity case via perturbation analysis and establish results that cannot be obtained by the previously studied models.

-

Imbalance Trouble: Revisiting Neural-Collapse Geometry. [paper]

- Christos Thrampoulidis, Ganesh R. Kini, Vala Vakilian, Tina Behnia.

- Key Word: Neural Collapse; Class Imbalance.

-

Digest

Neural Collapse refers to the remarkable structural properties characterizing the geometry of class embeddings and classifier weights, found by deep nets when trained beyond zero training error. However, this characterization only holds for balanced data. Here we thus ask whether it can be made invariant to class imbalances. Towards this end, we adopt the unconstrained-features model (UFM), a recent theoretical model for studying neural collapse, and introduce Simplex-Encoded-Labels Interpolation (SELI) as an invariant characterization of the neural collapse phenomenon.

-

Neural Collapse: A Review on Modelling Principles and Generalization. [paper]

- Vignesh Kothapalli, Ebrahim Rasromani, Vasudev Awatramani.

- Key Word: Neural Collapse.

-

Digest

We analyse the principles which aid in modelling such a phenomena from the ground up and show how they can build a common understanding of the recently proposed models that try to explain NC. We hope that our analysis presents a multifaceted perspective on modelling NC and aids in forming connections with the generalization capabilities of neural networks. Finally, we conclude by discussing the avenues for further research and propose potential research problems.

-

Do We Really Need a Learnable Classifier at the End of Deep Neural Network? [paper]

- Yibo Yang, Liang Xie, Shixiang Chen, Xiangtai Li, Zhouchen Lin, Dacheng Tao.

- Key Word: Neural Collapse.

-

Digest

We study the potential of training a network with the last-layer linear classifier randomly initialized as a simplex ETF and fixed during training. This practice enjoys theoretical merits under the layer-peeled analytical framework. We further develop a simple loss function specifically for the ETF classifier. Its advantage gets verified by both theoretical and experimental results.

-