This is an Instant-NGP renderer implemented using Taichi, written entirely in Python. No CUDA! This repository only implemented the rendering part of the NGP but is more simple and has a lesser amount of code compared to the original (Instant-NGP and tiny-cuda-nn).

Clone this repository and install the required package:

git clone https://github.com/Linyou/taichi-ngp-renderer.git

python -m pip install -r requirement.txtThis repository only implemented the forward part of the Instant-NGP, which include:

- Rays intersection with bounding box:

ray_intersect() - Ray marching strategic:

raymarching_test_kernel() - Spherical harmonics encoding for ray direction:

dir_encode() - Hash table encoding for 3d coordinate:

hash_encode() - Fully Fused MLP using shared memory:

sigma_layer(),rgb_layer() - Volume rendering:

composite_test()

However, there are some differences compared to the original:

- Taichi is currently missing the

frexp()method, so I have to use a hard-coded scale of 0.5. I will update the code once Taichi supports this function.

- Instead of having a single kernel like tiny-cuda-nn, this repo use separated kernel

sigma_layer()andrgb_layer()because the shared memory size that Taichi currently allow is48KBas issue #6385 points out, it could be improved in the future. - In the tiny-cuda-nn, they use TensorCore for

float16multiplication, which is not an accessible feature for Taichi, so I directly convert all the data toti.float16to speed up the computation.

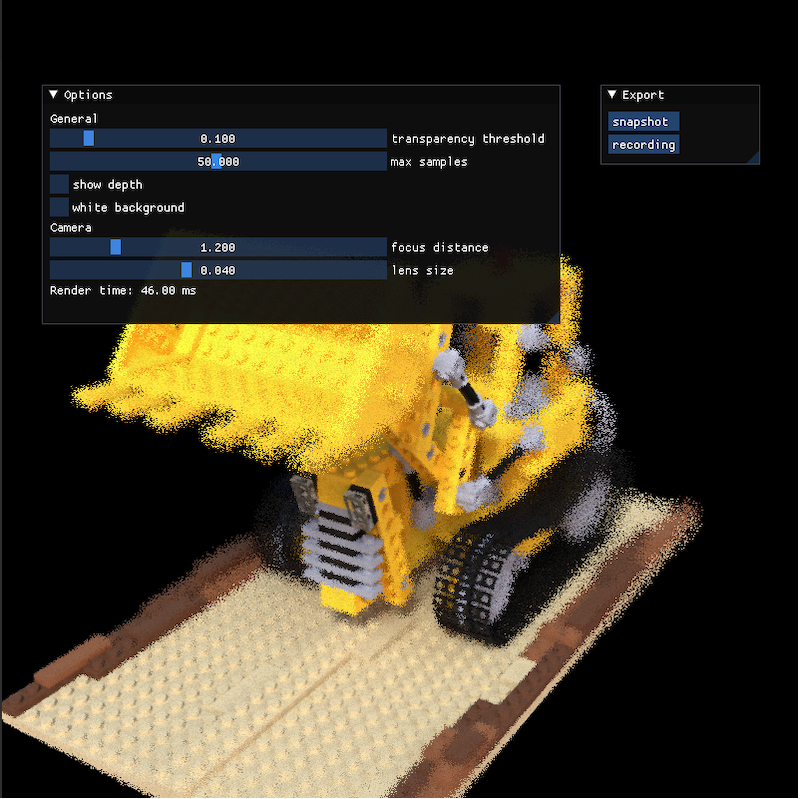

This code supports real-time rendering GUI interactions with less than 1GB VRAM. Here are the functionality that the GUI offers:

- Camera:

- keyboard and mouse control

- DoF

- Rendering:

- different resolution

- the number of samples for each ray

- transparency threshold (Stop ray marching)

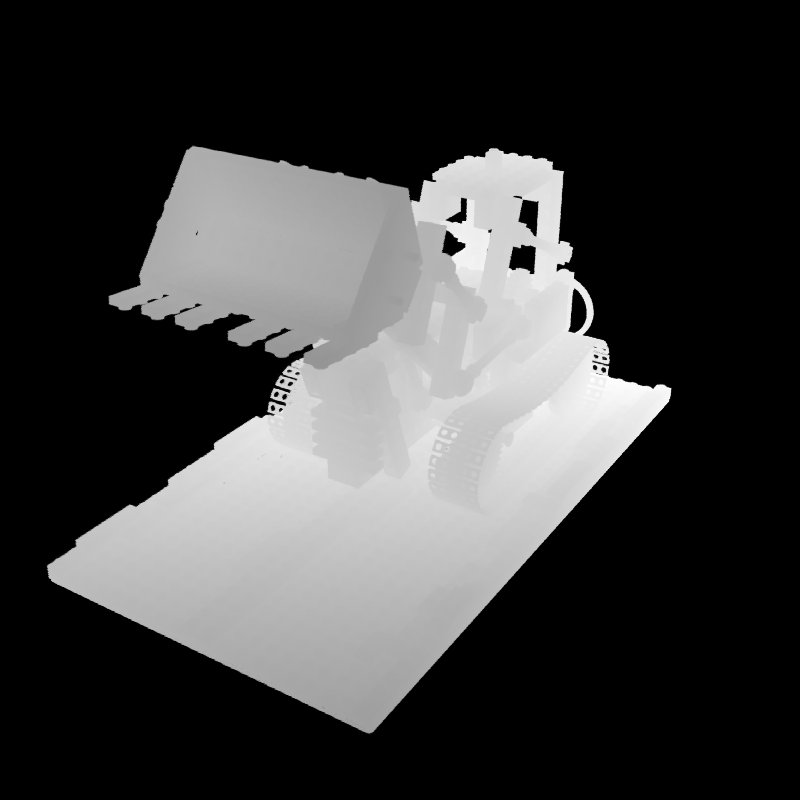

- show depth

- Export:

- Snapshot

- Video recording (Required ffmpeg)

the GUI is running up to 66 fps on a 3090 GPU at 800

$\times$ 800 resolution (default pose).

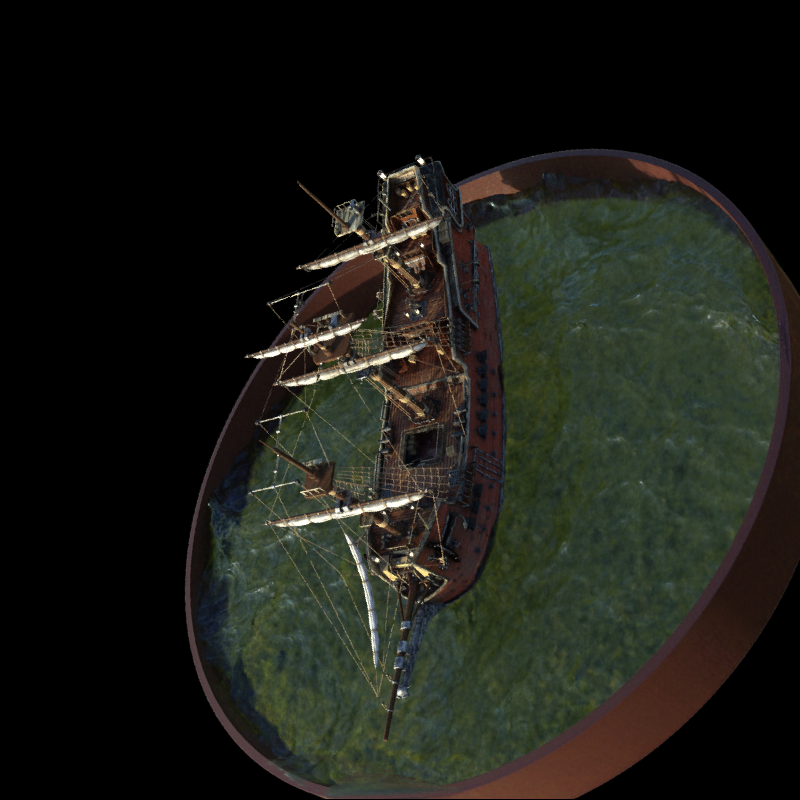

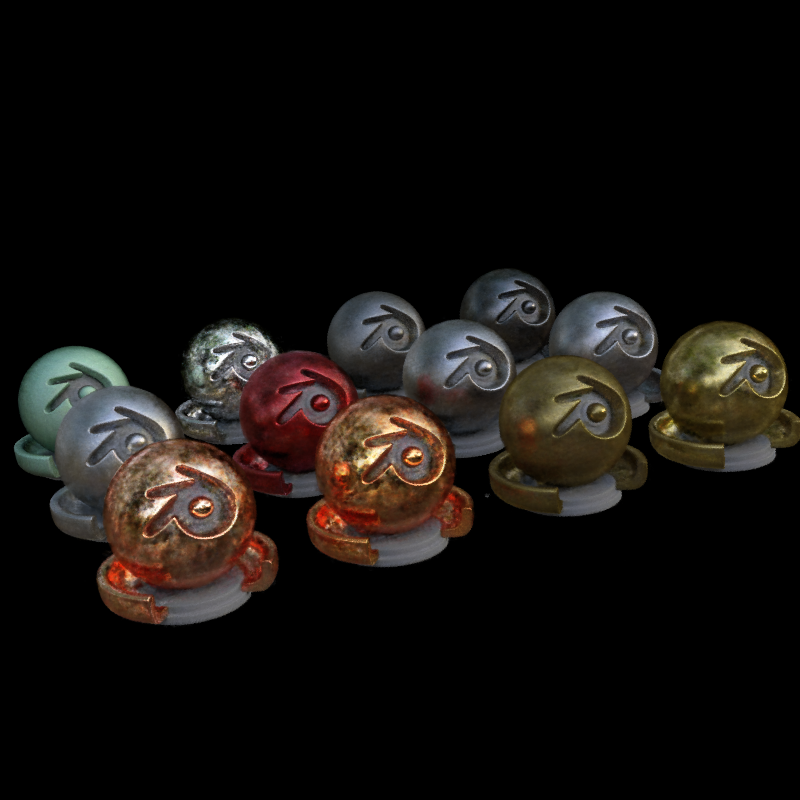

Run python taichi_ngp.py --gui --scene lego to start the GUI. This repository provided eight pre-trained NeRF synthesis scenes: Lego, Ship, Mic, Materials, Hotdog, Ficus, Drums, Chair

Running python taichi_ngp.py --gui --scene <name> will automatically download pre-trained model <name> in the ./npy_file folder. Please check out the argument parameters in taichi_ngp.py for more options.

You can train a new scene with ngp_pl and save the pytorch model to numpy using np.save(). After that, use the --model_path argument to specify the model file.

Many thanks to the incredible projects that open-source to the community, including:

- Support Vulkan backend

- Refactor to separate modules

- Support real scenes

...