- My notes from UC San Diego MicroMasters Program, Algorithms and Data Structures and all the readings that I made in parallel (see references below)

- My anki flashcards:

- Anki flashcards package: 34 cards

- Install Anki

- Prerequisites

- Algorithm Design and Techniques

- Data Structures Fundamentals

- Graph Algorithms

- NP-Complete Problem

- String Processing and Pattern Matching Algorithms

- Dynamic Programming: Applications In Machine Learning and Genomics

- Problem Solving Patterns

- References

Proof by Induction

- It allows to prove a statement about an arbitrary number n by:

- 1st proving it's true when n is 1 and then

- assuming it's true for n = k and showing it's true for n = k + 1

- For more details

Proofs by contradiction

- It allow to prove a proposition is valid (true) by showing that assuming the proposition to be false leads to a contradiction

- For more details

Logarithms

- See this

Iterated logarithm

- It's symbolized: log∗(n):

- It's the number of times the logarithm function needs to be applied to n before the result is ≤ 1

-

Log*(n) = 0 if n ≤ 1 = 1 + Log* (Log (n)) if n > 1 -

n Log*(n) n = 1 0 n = 2 1 n ∈ {3, 4} 2 n ∈ {5,..., 16} 3 n ∈ {17, ..., 65536} 4 n ∈ {65537,..., 2^65536} 5

Recursion

- Stack optimization and Tail Recursion:

-

It's is a recursion where the recursive call is the final instruction in the recursion function

-

There should be only one recursive call in the function

-

It's exempted from the system stack overhead

-

Since the recursive call is the last instruction, we can skip the entire chain of recursive calls returning and return straight to the original caller

-

This means that we don't need a call stack at all for all of the recursive calls, which saves us space

-

Example of non tail recursion: ` def sum_non_tail_recursion(ls): if len(ls) == 0: return 0

//# not a tail recursion because it does some computation after the recursive call returned. return ls[0] + sum_non_tail_recursion(ls[1:])`

-

Example of a tail recursion: ` def sum_tail_recursion(ls): def helper(ls, acc): if len(ls) == 0: return acc //# this is a tail recursion because the final instruction is a recursive call. return helper(ls[1:], ls[0] + acc)

return helper(ls, 0)`

-

- Backtracking:

- Unfold Recursion:

- It's the process of converting a recursion algorithm to non-recursion one

- It's usually required because of

- Risk of Stackoverflow

- Efficiency: memory consumption + additional cost of function calls + sometimes duplicate calculations

- Complexity: some recursive solution could be difficult to read. For example, nested recursion

- We usually use a data structure of stack or queue, which replaces the role of the system call stack during the process of recursion

- For more details:

- To Get Started

- Tail Recursion

- Geeks of Geeks Tail Recursion

- Geeks of Geeks Tail Recursion Elimination

- Python Tail Recusion

- Unfold Recursion

T(n)

- It's the number of lines of code executed by an algorithm

Permutation and Combination

- This is required for time and space analysis for backtracking (recursive) problems

- Permutation: P(n,k):

P(n,k) = n! / (n - k)!- We care about the different

kitems that are choosen and the order they're chosen:123is different from321 - E.g. Number of different numbers of 3 digits without repitited digit (leading 0 are included)

P(10,3) = 10! / 7! = 10 x 9 x 8 = 720

- Combination: C(n,k):

C(n,k) = n! / k! * (n - k)!- We only care about the

kitems that are choosen out ofn:123is similar than321and231 - In other words, all permutations of a group of

kitems form the same combination - Therefore, combination of

kitems out ofnis the number of permutations (n, k) divided by all permutations (k, k):C(n, k) = P(n, k) / P(k, k) - E.g. Number of different numbers of 3 digits with increasing digits (leading 0 are included): 123, 234

C(10,3) = 10! / 3 ! 7! = 10 x 3 x 4 = 120

- For more details:

- Khan Academy: Counting, permutations, and combinations

Test Cases

- Boundary values

- Biased/Degenerate tests cases

- They're particular in some sense

- See example for each data structure below

- Randomly generated cases and large dataset:

- It's to check random values to catch up cases we didn't think about

- It's also to check how long it takes to process a large dataset

- Implement our program as a function solve(dataset)

- Implement an additional procedure generate() that produces a random/large dataset

- E.g., if an input to a problem is a sequence of integers of length 1 ≤ n ≤ 10^5, then:

- Generate a sequence of length 10^5,

- Pass it to our solve() function, and

- Ensure our algorithm outputs the result quickly: we could measure the duration time

- Stress testing:

- Implement a slow but simple and correct algorithm

- Check that both programs produce the same result (this is not applicable to problems where the output is not unique)

- Generate random test cases as well as biased tests cases

- When dealing with numbers:

- Scalability: think about number size: Int. Long, ... or should we store them in a string ?

- Think about number precision?

- If there is any division: division by 0?

- Integers Biased cases: Prime/Composite numbers; Even/Odd numbers; positive/negative numbers

- When dealing with String:

- Scalability:

- Size of the string? Could the whole string be read to the memory?

- Size of each character (related to encoding)? E.g. For ASCII, 1 character = 1B

- Biased/Degenerate tests:

- Empty string

- A strings that contains a sequence of a single letter (“aaaaaaa”) or 2 letters ("abbaabaa") as opposed to those composed of all possible Latin letters

- Encoding (ASCII, UTF-8, UTF-16)?

- Special characters

- Scalability:

- When dealing with arrays/lists:

- Scalability:

- Size of the array? Could the whole array be read to the memory?

- Type of the array items? Range ot the values? small range? large numbers?

- Biased/Degenerate tests:

- It's empty

- It's contains duplicates

- It contains same elements: min value only (0 for integers), max value only (2^32 for integers), any specific value

- It contains only small numbers or a small range of large numbers

- It contains a few elements: 1, 2

- It contains many elements: 10^6

- Sorted/unsorted array

- Scalability:

- When dealing with Trees:

- Biased/Degenerate tests: a tree which consists of a linked list, binary trees, stars

- When dealing with Graphs:

- Biased/Degenerate tests: a graph which consists of a linked list, a tree, a disconnected graph, a complete graph, a bipartite graph

- It contains loops (it's not a simple graph)

- It contains parallel edges (multiple edges between same vertices)

- It's a directed or undirected graph

Big O vs. Big-Ω vs. Big-Θ

- Big-Ω (Omega):

- Big-O:

- Big-Θ (Theta):

- A function f grows at same rate as a function g

- If f = Ω(g) and f = O(g)

- E.g., f(n) = n^2 + n = Θ(n^2) because n^2 ≤ f(n) ≤ n^2 for k ≥ 1

- It's used in the industry as Big-O

- Small-o:

- A function f is o(g) if f(n)/g(n) → 0 as n → ∞

- f grows slower than g

- It's NOT used in the industry

- For more details

- Khan Academy: Asymptotic Notation

Time Complexity

- It describes the rate of increase of an algorithm

- It describes how an algorithm scales when input grows

- Big-O notation is used

- It's also called Asymptotic runtime:

- It's only asymptotic

- It tells us about what happens when we put really big inputs into the algorithm

- It doesn't tell us anything about how long it takes

- Its hidden constants:

- They're usually moderately small, and therefore, we have something useful

- They're could be big

- Sometimes an algorithm A with worse Big-O runtime than algorithm B:

- Algorithm A is worse asymptotically on very large inputs than algorithm B does

- But algorithm A is better for all practical sizes and very large inputs couldn't be stored

- E.g., O(1), O(n), O(n^2) , O(Log n), O(n log n), O(2^n)

- Data structure and theirs related algorithms time complexity

Space Complexity

- It describes the amount of memory - or space - required for an algorithm

- It useful to compare the performance of algorithms

- Input size from which an algorithm will experience unsufficient memory (RAM) and start using Disk lookups

- Big O notation and concept are used

Big-O Rules

- Drop the constants: we'll use O(n) instead of O(2 n)

- Drop the Non-Dominant Terms: we'll use O(n^2) instead of O(n^2 + n) or O(n) instead of O(n + log n)

- Multi-Part Algorithms - Add: O(A+B)

- When an algorithm is in the form

- Do A,

- When you're done, Do B

- Multi-Part Algorithms - Multiply: O(A*B)

- When an algorithm is in the form:

- Do B for each time you do A

- Amortized Time

- When the worst case happens once a while

- But once it happens, it won't happen again for so long that the cost is "amortized"

- E.g., insert in a dynamic resizing array (an array list):

- It's implemented with an array

- When the array hits its capacity, it will create a new array with double the capacity and copy all the elements over to the new array

- Insert time complexity in expected case (the array isn't full): O(1)

- Insert time complexity in worst case (the array is full): O(n)

- Insert time complexity for n inserts: O(n) (for n expected cases) + O(w * n) (w worst cases): O((w+1)n) = O(n)

- The amortization time for each insertion (the time complexity for n inserts divided by n): O(1)

- Log n runtimes: O(log n)

- It when the number of elements in a problem space is halved each time (or divided by n)

- E.g. Dichotomic search: search in a sorted array

- The base of Log(n) isn't import

- E.g. O(Log2 n) = O(Log3 n) = O(Log n)

- Recursive Runtimes, a recursive algorithm usually is defined by:

- Its depth: n and the number of times each recursive call branches (itself).

- Time complexity: O(branchesNbr^n)

- Space complexity: O(n)

- E.g., Fibonacci Recursive time complexity: O(2^n)

- E.g., Fibonacci Space complexity: O(n): because only O(N) nodes exist at any given time

- The base of an Exponent:

- Log(8^n) is completely different than Log(2^n)

Algorithm Design

- Reading problem statement:

- The problem statement specifies the input-output format, the constraints for the input data as well as time and memory limits

- Our goal is to implement a fast program that solves the problem and works within the time and memory limits

- Question inputs:

- String: Encoding (ASCII, UTF-8, UTF-16)?, Special characters?

- Number: Size (Int. Long, ...)? Precision, Rounding?

- Build your intuition:

- In progress

- Designing an algorithm:

- When the problem statement is clear, start designing an algorithm and

- Don’t forget to prove that it works correctly

- Don't forget to estimate its expected running time:

- E.g.

-

Time Complexity: O(n^2) O(n log n) Machine ops: 10^9 10^9 n: 10^5 10^5 Estimated Time: > 10s (10^10/10^9) < 1 ms (10^5*log(10^5)/10^9)

- Implementing an algorithm:

- After you developed an algorithm, start implementing it in a programming language

- Testing and debugging your program

- Testing is the art of revealing bugs

- 1st start with simple test cases:

- Small dataset

- Make sure our program produces correct results

- 2nd check degenerate cases: see test cases section above

- 3rd check boundary values: see test cases section above

- 4th check randomly generated cases

- 5th check large dataset: see test cases section above

- 6th finish with stress testing: see test cases section above

General Approaches

- Tournament approach:

- To find the kth largest number in an array, compare each paire of 2 elements together

- compare(elem 0, elem 1), compare(elem 2, elem 3)...

- O(n + log(n) − 2)

- Euclidean Algorithm

Greedy Algorithms

- Greedy Strategy:

- 1. Make a greedy choice

- 2. Prove that it is a safe choice

- 3. Reduce to a subproblem

- 4. Solve the subproblem (Iterate)

- E.g. Problem, Queue of Patients:

- n patients have come to the doctor’s office at same time

- Ti is the time needed for treatment of the i-th patient

- They can be treated in any order

- Output: Arrange the patients in such a queue that the total waiting time is minimized

- E.g. Solution:

- Make a greedy choice: choose the patient (Pi) with the smallest treatment time (with the minimum Ti)

- Prove that it's a safe choice

- Reduce to a smaller problem: remove Pi from the queue

- Iterate: Treat all the remaining patients in such order as to minimize their total waiting time as if there wasn't 1st patient

- Subproblem

- It's a similar problem of smaller size

- Minimum total waiting time for n patients = (n − 1) · T min + minimum total waiting time for n − 1 patients without T min

- Min total waiting time for n = 4 partients: (15, 10, 25, 20) = (4 - 1) * 10 + Min total waiting time for (15, 25, 20)

- Safe Choice:

- It's a greedy choice which there's an optimal solution consistent with this 1st choice

- It requires to prove that a greedy choice is safe

- E.g. Queue of Patients:

- If we prove that there's an optimal solution that starts with treating a patient with the minimum treatment time

- Therefore such a choice is a safe choice

- However, if we choose a patient with the maximum treatment time, there's not an optimal solution that starts with it

- Therefore such a choice isn't a safe choice

- E.g. Fractional Knapsack (or Backpack) Problem:

- N items with total weight and total value (Wi, Vi)

- A Backpack with a capacity W

- Goal: Maximize value ($) while limiting total weight (kg)

- It's possible to take fraction of items

- Item 1: (6, $30), Item 2 (3, $14), Item 3 (4, $16), Item 4 (2, $9)

- Knapsack capacity: 10

- Value per Unit: Item 1: $5; Item2: $4.66; Item3: $4; Item4: $4.5

- Solution: 6 * $5 + 3 * $4.666 + 1 * $4.5 (fraction of item4) = $48.5

Divide and Conquer

- Divide: Break into non-overlapping subproblems of the same type

- Conquer:

- Solve subproblems: each one indepently of the others

- Combine results

- Implementation: it's often implemented with a recursive algorithm

- Calculate its Time Complexity:

- Define a corresponding recurrence relation, T

- It's an equation recursively defining a sequence of values

- For Linear Search T(n) = T(n - 1) + c; T(0) = c

- c is the runtime for a constant amount of work: checking high vs. low indexes; if A[low] == key); preparing the parameters for the recursive call

- T(0) is the runtime for the base case of the recursion (empty array): checking high vs. low indexes, returning not found

- For Binary Search T(n) = T(n/2) + c; T(0) = c

- Determine worst-case runtime, T(n) from the recurrence relation

- Look at the recursion tree

- For Linear Search T(n) = T(n - 1) + c = T(n - 2) + 2 * c = n * c = T(n) = Θ(n)

- For Binary Search T(n) = T(n/2) + c = T(n/2^2) + 2 * c = T(n/2^3) + 3 * c = Θ(log2 n) = Θ(log n)

- We could use the Master Theorem for divide-and-conquer recurrences:

- Express the time complexity as:

T(n) = a T(n/b) + n^d - If

a > b^d,T(n) = O(n^log_b(a)): Split problem + combine results work is small than subproblems work - If

a = b^d,T(n) = O(n^d logn) - If

a < b^d,T(n) = O(n^d): Split problem + combine results work is outweights the subproblems work - The conditions for each case correspond to the intuition of whether the work to split problems and combine results outweighs the work of subproblems

- Express the time complexity as:

- We could also compute it as below:

- Let's take the example of a binary search in an sorted array:

-

Level: level in the recursion tree #Problems: the number of problems at a given recursion level Work: the running time at each level T(n) = Sum of all Works(i = 0 .. log n) = log_2_n * O(c) = O(c * log_2_n) For integers, T(n) = O(log_2_n) since the running time to compare 2 integers is O(1) For strings, T(n) = O(|S| log_2_n) |S| is the length of the string that we're searching

level | Recursion Tree | # Problems | Level_Work = #_Problem * Level_T(n) 0 | n | 1 | 1 * O(c) is the running time for the comparison array[mid] == our_value 1 | n/2 | 1 | 1 * O(c) 2 | n/4 | 1 | 1 * O(c) ... | ... | ... | ... i | n/2**i | 1 | 1 * O(c) ... | ... | ... | ... log_2_n | 1 | 1 | 1 * O(c)

- Define a corresponding recurrence relation, T

- Optionally, create iterative solution

- It allows to save space

- For more details:

- Binary Search

- Merge Sort

- Quick Sort

- It's more efficient in practice than Merge Sort

- Average Time Complexity: O(n log n)

- Time Complexity in the worst case: O(n^2)

- Course Material

- Quick Sort on khanacademy

- Deterministic and Randomized Quicksort

- 3 way partition Quick Sort

- Quick Sort Tail Recursion

- Geeks of Geeks Quick Sort Recursive Tail Elimination

- [Quick Sort with deterministic pivot selection heuristic]:

- The pivot could be the median of the 1st, middle, and last element

- If the recursion depth exceeds a certain threshold c log n, the algorithm switches to heap sort

- It's a simple but heuristic approach:: it's not guaranteed to be optimal

- The time complexity is: O(n log n) in the worst case

Dynamic Programming

- It's Frequently used for optimization problems: finding best way to do something

- It's typically used when brute-force solution is to enumerate all possibilities:

- May not know which subproblems to solve, so we solve many or all!

- Reduce number of possibilities by:

- Finding optimal solutions to subproblems

- Avoiding non-optimal subproblems (when possible)

- Frequently gives a polynomial algorithm for brute force exponential one

- It's like Divide and Conquer:

- General design technique

- Uses solutions to subproblems to solve larger problems

- Difference: Dynamic Programming subproblems typically overlap

- It's an alternative for Recursive algorithms:

- Recursive algorithms may be not efficient: they could do a compute several times

- E.g. Money change problem MinCoin(40 cents) in Tanzania:

- MinCoin(40s) = 1 + Min( MinCoin(40c - 1c), MinCoin(40c - 5c), MinCoin(40c - 10c), MinCoin(40c - 20c), MinCoin(40c - 25c))

- MinCoin(20c) is computed at least 4 times: MinCoin(40c - 1c), MinCoin(40c - 5c), MinCoin(40c - 10c), MinCoin(40c - 20c)

- It's an alternative for Greedy Algorithms:

- When there is not a safe choice

- E.g.1, Money change problem MinCoin(40 cents) in US:

- US coins <<< 40c: 1c, 5c, 10c, 25c

- A Greedy choice: take the max coin such that coin <<< 40c

- Result: 3 coins: 40c = 1 * 25c + 1 * 10c + 1 * 5c

- Here this choice is safe

- E.g.2, Money change problem MinCoin(40 cents) in Tanzania:

- Tanzanian coins <<< 40c: 1c, 5c, 10c, 20c, 25c

- A greedy choice: take the max coin such that the coin <<< 40c

- Result: 3 coins: 40c = 1 * 25c + 1 * 10c + 1 * 5c

- Here this choice isn't safe: 40c = 2 * 20c

- Steps:

- Express a solution mathematically

- Cut and Paste Trick Dynamic Programming:

- Cut and paste proof: optimal solution to problem must use optimal solution to subproblem: otherwise we could remove suboptimal solution to subproblem and replace it with a better solution, which is a contradiction

- For more details

- Express a solution recursively

- Either develop a bottom up algorithm:

- Find a bottom up algorithm to find the optimal value

- Find a bottom up algorithm to construct the solution

- Or develop a memoized recursive algorithm

- Express a solution mathematically

- Alignment game (String Comparison):

- Remove all symbols from 2 strings in such a way that the number of points is maximized:

- Remove the 1st symbol from both strings: 1 point if the symbols match; 0 if they don't

- Remove the 1st symbol from one of the strings: 0 point

- E.g.,:

-

A T G T T A T A => A T - G T T A T A A T C G T C C => A T C G T - C - C +1+1 +1+1 = +4

-

- Sequence Alignment:

- It's a 2-row matrix

- 1st row: symbols of the 1st string (in order) interspersed by "-"

- 2nd row: symbols of the 2nd string (in order) interspersed by "-"

- E.g.:

-

A T - G T T A T C A T C G T - C - C ^-Del ^--Insert. - Alignment score:

- Premium (+1) for every match

- Penalty (-μ) for every mismatch

- Penatly (-σ) for every indel (insertion/deletion)

- E.g.:

- A T - G T T A T A A T C G T - C - C +1+1-1+1+1-1-0-1+0 = +1

- Optimal alignment:

- Input: 2 strings, mismatch penatly μ, and indel penalty σ

- Output: An alignment of the strings maximizing the score

- Common Subsequence: Matches in an alignment of 2 strings form their common subsequence

- E.g.

-

A T - G T T A T C A T C G T - C - C AT G T (ATGT) is a common subsequence

- Longest common subsequence:

- Input: 2 strings

- Output: A longest common subsequence of these strings

- It corresponds to highest alignment score with μ = σ = 0 (maximizing the score of an alignment)

- Edit distance

- Input: 2 strings

- Output: the minimum number of operations (insertions, deletions, and substitutions of symbols) to transform one string into another

- It corresponds to the minimum number of mismatches and indels in an alignment of 2 strings (among all possible alignments)

- E.g.:

-

E D I - T I N G - - D I S T A N C E ^-Del ^-Ins.----^ - Minimizing edit distance = Maximizing Alignment score

- Let D(i,j) be the edit distance of an i-prefix A[1... i] and a j-prefix B[1.... j]

- D(i,j) = MIN(D(i,j-1) + 1, D(i-1,j) + 1, D(i-1,j-1) + 1) if A[i] <> B[j] OR

- D(i,j) = MIN(D(i,j-1) + 1, D(i-1,j) + 1, D(i-1,j-1)) if A[i] = B[j]

- **Reconstructing an Optimal Alignment:

- It could be done by backtracking pointers that are stored in the edit distance computation matrix

- Discrete Knapsack problem:

-

N items with total weight Wi (Kg) and total value Vi ($)

-

A Backpack with a capacity W

-

Each item is either taken or not

-

Goal: Maximize value ($) while limiting total weight (kg)

-

Discrete Knapsack with unlimited repetitions quantities:

- Input: Weights (W1,..., Wn) and values (V1,..., Vn) of n items; total weight W (Vi’s, Wi’s, and W are non-negative integers)

- Output: The maximum value of items whose weight doesn't exceed W

- Each item can be used any number of times

- Recursive Relation: max-value(w) = max { val(w - wi) + vi} i: wi < w

-

E.g. Item 1 (6, $30), Item 2 (3, $14), Item 3 (4, $16), Item 4 (2, $9) Knapsack capacity: 10 Solution: 6 ($30) + 2 ($9) + 2 ($9) = $48 W : 0 1 2 3 4 5 6 7 8 9 10 max value: 0 0 9 14 18 23 30 32 39 44 48 max-val(W = 2) = val(2) + max-val(0) = 9 max-val(W = 3) = max(val(3) + max-val(0), val(2) + max-val(1)) = 14 max-val(W = 4) = max(val(3) + max-val(1), val(4) + max-val(0), val(2) + max-val(2)) = 14 max-val(W = 5) = max(val(3) + max-val(2), val(4) + max-val(1), val(2) + max-val(3)) = 23 max-val(W = 6) = max(val(6) + max-val(0), val(3) + max-val(3), val(4) + max-val(2), val(2) + max-val(4)) = 30 max-val(W = 7) = max(val(6) + max-val(1), val(3) + max-val(4), val(4) + max-val(2), val(2) + max-val(4)) = 32 max-val(W = 8) = max(val(6) + max-val(2), val(3) + max-val(5), val(4) + max-val(4), val(2) + max-val(6)) = 39 max-val(W = 9) = max(val(6) + max-val(3), val(3) + max-val(6), val(4) + max-val(5), val(2) + max-val(7)) = 44 max-val(W = 10) = max(val(6) + max-val(4), val(3) + max-val(7), val(4) + max-val(6), val(2) + max-val(8)) = 48 Greedy Algorithm doesn't work: 6 ($30) + -

Discret_Knapsack_With_Repitions(W): max_value(0) = 0 for w in range(1, W): max_value(w) = 0 for i in range(len(Weights) - 1): if wi ≤ w: candidate_max_val = max_value(w − wi) + vi if candidate_max_val > max_value(w): max_value(w ) = candidate_max_val return max_value(W)

-

Discrete Knapsack without repititions (one of each item):

- Input: Weights (W1,..., Wn) and values (V1,..., Vn) of n items; total weight W (Vi’s, Wi’s, and W are non-negative integers)

- Output: The maximum value of items whose weight doesn't exceed W

- Each item can be used at most once

- Recursive Relation: max_value (w, i) = max{ max_value(w - wi, i - 1) + vi, max_value(w, i - 1)}

-

E.g. Item 1 (6, $30), Item 2 (3, $14), Item 3 (4, $16), Item 4 (2, $9) Knapsack capacity: 10 Solution: 6 ($30) + 4 ($16) = $46 i/W: 0 1 2 3 4 5 6 7 8 9 10 0 0 0 0 0 0 0 0 0 0 0 0 1(6) 0 0 0 0 0 0 30 30 30 30 30 2(3) 0 0 0 14 14 14 30 30 30 44 44 3(4) 0 0 0 14 16 16 30 30 30 44 46 4(2) 0 0 9 14 16 23 30 30 39 44 46 -

Discret_Knapsack_Without_Repitions(W) initialize all value(0, j) = 0 initialize all value(w , 0) = 0 for i from 1 to n: for w from 1 to W : value(w , i) = value(w , i − 1) if wi ≤ w: val = value(w - wi, i - 1) + vi if value(w, i) < val: value(w , i) = val return value(W , n)

-

Greedy Algorithm fails:

- Item1 (6, $30), Item2 (3, $14), Item3 (4, $16), Item4 (2, $9)

- Value per Unit: Item 1: $5; Item2: $4.66; Item3: $4; Item4: $4.5

- 6 ($30) + 3 ($14) = 9 items ($44)

- taking an element of maximum value per unit of weight is not safe!

-

Running time: O(nW)

- It's called pseudo polynomial, but not just polynomial

- The catch is that the input size is proportional to logW, rather than W

- To further illustrate this, consider the following two scenarios:

-

1. The input consists of m objects (say, integers) 2. The input is an integer m They look similar, but there is a dramatic difference. Assume that we have an algorithm that loops for m iterations. Then, in the 1st. case it is a polynomial time algorithm (in fact, even linear time), whereas in the 2nd. case it's an exponential time algorithm. This is because we always measure the running time in terms of the input size. In the 1st. case the input size is proportional to m, but in the 2nd. case it's proportional to logm. Indeed, a file containing just a number “100000” occupies about 7 bytes on your disc while a file containing a sequence of 100000 zeroes (separated by spaces) occupies about 200000 bytes (or 200 KB). Hence, in the 1st. case the running time of the algorithm is O(size), whereas in the 2nd. case the running time is O(2size).

-Let’s also consider the same issue from a slightly different angle:

-

Assume that we have a file containing a single integer 74145970345617824751. If we treat it as a sequence of m=20 digits, then an algorithm working in time O(m) will be extremely fast in practice. If, on the other hand, we treat it as an integer m=74145970345617824751, then an algorithm making m iterations will work for: 74145970345617824751109/(10^9 * 60 * 60 * 24 * 365) ≈ 2351 years assuming that the underlying machine performs 10^9 operations per second

-

- Related Problems:

- House Robber

- Interactive Puzzle: Number of Paths

- Interactive Puzzle: Two Rocks Game

- Interactive Puzzle: Three Rocks Game

- Interactive Puzzle: Primitive Calculator

- Money Change II

- Money Change III

- Primitive Calculator

- Computing the Edit Distance Between 2 Strings

- Longest Common Subsequence of 2 Sequences I

- Longest Common Subsequence of 2 Sequences II

- Longest Common Subsequence of 3 Sequences

- Global Alignment of 2 sequences

- Manhattan Tourist Problem - Length of a Longest Path in a Grid

- Maximum Amount of Gold

- Cutting a Rod

- Partitioning Souvenirs

- Maximizing the Value of an Arithmetic Expression

- Longest Path in a DAG

- LC-309. Best Time to Buy and Sell Stock with Cooldown

- For more details:

- Course material

- Advanced dynamic programming lecture notes by Jeff Erickson

- How Do We Compare Biological Sequences? by Phillip Compeau and Pavel Pevzner

- Money change problem: Greedy vs. Recursive vs. Dynamic Programming

- Geeksforgeeks Dynamic Programming

- Dynamic Programming

- Leetcode Post: Dynamic Programming Patterns

- For more details:

- Visualization: DP Making Change

- Visualization: Edit Distance calculator

- Visualization: DP Longest Common Subsequence

- DP for competitive Programmer's Core Skills

- Why is the knapsack problem pseudo-polynomial?

Arrays

- It's a contiguous area of memory

- It's consisting of equal-size elements indexed by contiguous integers

- 1-D Array: accessing array[i] consists of accessing the memory address: array_addr + elem_size × (i − first_index)

- 2-D Array:

- It could be laid out in Row-Major order:

- Its 2nd index (column) changes most rapidly

- Its elements are laid out as follow: (1,1), (1,2), (1,3), ..., (2,1), (2,2),...

- Accessing [i][j] consists of accessing the memory address: array_addr + elem_size × [row_lenth * (i − 1st_row_index) + (j − 1st_column_index)]

- It could be laid out in Column-Major order:

- Its 1st index (row) changes most rapidly

- Its elements are laid out as follow: (1,1), (2,1), (2,1), ..., (1,2), (2,2),...

- Accessing [i][j] consists of accessing the memory address: array_addr + elem_size × [column_lenth * (j − 1st_column_index) + (i − 1st_row_index)]

- It could be laid out in Row-Major order:

- Time Complexity and Operations:

-

Read Remove Add Beginning: O(1) O(n) O(n) End: O(1) O(1) O(1) Middle: O(1) O(n) O(n)

-

- Programming Languages:

- Python: there is no static array data structure

- For more details:

Linked Lists

- Singly-Linked List

-

APIs Time (wout tail) Time (w tail) Description PushFront(Key): O(1) Aadd to front Key TopFront(): O(1) Return front item PopFront(): O(1) Remove front item PushBack(Key): O(n) O(1) Add to back Key TopBack(): O(n) O(1) Return back item PopBack(): O(n) Remove back item Boolean Find(Key): O(n) Is key in list? Erase(Key): O(n) Remove key from list Boolean Empty(): O(1) Empty list? AddBefore(Node, Key): O(n) Adds key before node AddAfter(Node, Key): O(1) Adds key after node

-

- Doubly-Linked List:

- Its node consists of a key, a pointer to the next node and a pointer to the previous node

-

APIs Time (wout tail) Time (w tail) PushFront(Key): O(1) Key TopFront(): O(1) PopFront(): O(1) PushBack(Key): O(n) O(1) Key TopBack(): O(n) O(1) PopBack(): O(n) O(1) Boolean Find(Key): O(n) Erase(Key): O(n) Boolean Empty(): O(1) AddBefore(Node, Key): O(1) AddAfter(Node, Key): O(1)

- Related Problems:

- For more details:

Stacks

- LIFO: Last-In First-Out

- It could be implemented with an array:

- We should keep track of the latestest element pushed index which is different from its capacity

len(array) -

Push(key): if max-index + 1 < len(array): max-index += 1 array[max-index] = key -

Top(): if max-index ≥ 0: return array[max-index] -

Pop(): if max-index ≥ 0: value = array[max-index] max-index -= 1 return value -

Empty(): return max-index == -1

- We should keep track of the latestest element pushed index which is different from its capacity

- It could be implemented with a Singly-Linked-List:

-

Push(key): list.PushFront(Key) -

Top(): return list.TopFront() -

Pop(): return list.PopFront() -

Empty(): return list.Empty()

-

-

Time Complexity: Array Imp. Singly-Linked List Comment Push(key): Θ(1) Θ(1) Key Top(): Θ(1) Θ(1) Key Pop(): Θ(1) Θ(1) Space Complexity: Θ(n) Θ(2 * n) = O(n) Linked-List uses more space because of the pointers - Programming Languages:

- Python:

Listcollections.dequequeue.LifoQueue- For more details

- Python:

- Related Problems:

- For more details:

- UC San Diego Course

- Visualization: Implementation with an Array

- Visualization: Implementation with a Linked List

Queues

- It could be implemented with an array:

- We should have a circular array

- We should keep track of the latestest inserted element index (we'll use it for reads):

read-index - We should keep track of the most recent inserted element index(we'll use it for writes):

write-index - Initially:

read-index == write-index == 0 -

Empty(): return (read-index == write-index) -

Full(): return (read-index == write-index + 1) -

Enqueue(key): if Not Full(): array[write-index] = key write-index = write-index + 1 if write-index < len(array) - 1 else 0 -

Dequeue(): if Not Empty(): value = array[read-index] read-index = read-index + 1 if read-index < len(array) - 1 else 0 return value

- It could be implemented with a Doubly-Linked-List with a tail:

- The list head will be used for reads

- The list writes will be used for writes

-

Empty(): return list.Empty() -

Enqueue(key): list.PushBack(Key) -

Dequeue(): list.PopFront()

-

Time Complexity: Array Imp. Singly-Linked List Comment Push(key): Θ(1) Θ(1) Key Top(): Θ(1) Θ(1) Key Pop(): Θ(1) Θ(1) Space Complexity: Θ(n) Θ(2 * n) = O(n) Linked-List uses more space because of the pointers - Programming Languages:

- Python:

Listcollections.dequeclassqueue.Queueclass- More details

- Python:

- Related Problems:

- For more details:

- UC San Diego Course

- Visualization: Implementation with an Array

- Visualization: Implementation with a Linked List

Trees

- It is empty, or a node with a key, and a list of child trees

- Terminology:

- A Root: top node in the tree

- A child has a line down directly from a parent

- An Ancestor is a parent, or a parent of parent, etc.

- Descendant is a child, or a child of child, etc.

- A Sibling is sharing the same parent

- A Leaf is a node without children

- An Interior node is a node that isn't a leaf

- An Edge is a link between two nodes

- A Level:

- 1 + number of edges between a tree root and a node

- E.g., The root node is level 1

- A Height:

- It's the maximum depth of subtree node and its farthest leaf

- It could be calculated by counting the number of nodes or edges

- A Forest is a collection of trees

- Walking a Tree:

- Depth-First (DFS): To traverse one sub-tree before exploring a sibling sub-tree

- Breadth-First (BFS): To traverse all nodes at one level before progressing to the next level

- A Binary Tree:

- It's a tree where each node has 0, 1, or 2 children

- DFS types:

- In Order Traversal of a node: InOrderTraversal of its Left child; Visit node; InOrderTraversal of its Right child

- Pre Order Traversal of a node: Visit node; PreOrderTraversal of its Left child; PreOrderTraversal of its Right child

- Post Order Traversal of a node: PostOrderTraversal of its Left child; PostOrderTraversal of its Right child; Visit node

- A Complete Binary Tree:

- It's a binary tree in which all its levels are filled except possibly the last one which is filled from left to right

- Its height is Low: it's at most O(log n) (n is nbr of nodes)

- It could be stored effeciently as an array

- A Full Binary Tree:

- It's also called Proper Binary Tree or 2-tree

- It's a tree in which every node other than the leaves has 2 children

- Its height is Low: it's equal to log n

- It could be stored effeciently as an array

- Related Problems:

- For more details:

Dynamic Arrays

- It's also known as Resizable array

- It's a solution for limitations of static arrays and dynamically-allocated arrays (see below):

- It can be resized at runtime

- It stores (implementation):

- Arr: dynamically-allocated array

- Capacity: size of the dynamically-allocated array

- Size: number of elements currently in the array

- When an element is added to the end of the array and array's size and capacity are equal:

- It allocates a new array

- New Capacity = Previous Capacity x 2

- Copy all elements from old array to new array

- Insert new element

- New Size = Old Size + 1

- Free old array space

- Time Complexity and Operations:

-

Time Complexity Get(i): O(1) Set(i, val): O(1) PushBack(val): O(1) or O(n): O(n) when size = capacity; O(1) otherwise (amortized analysis) Remove(i): O(1) Size(): O(1)

-

- Programming Languages:

- Python: list (the only kind of array)

- C++: vector

- Java: ArrayList

- Static array:

- it's static!

- It requires to know its size at compile time

- Problem: we might not know max size when declaring an array

- Dynamically-allocated arrays:

- int *my_array = new int[ size ]

- It requires to know its size at runtime

- Problem: we might not know max size when allocating an array

- More details:

Amortized Analysis

- Amortized cost:

- Given a sequence of n operations,

- The amortized cost is: Cost(n operations) / n

- Methods to calculate amortized cost:

- The Aggregate method:

- It calculates amortized cost based on amortized cost definition

- E.g. Dynamic Array: n calls to PushBack

- Let ci = cost of i-th insertion

-

_ i - 1 if i - 1 is a power of 2 / ci = 1 + | \ _ 0 otherwise n ⌊log 2 (n−1)⌋ Amortized Cost = ∑ ci / n = (n + ∑ 2^j ) / n = O(n)/n = O(1)

- The Banker's Method: it consists of:

- Charging extra for each cheap operation

- Saving the extra charge as tokens in our data structure (conceptually)

- Using the tokens to pay for expensive operations

- It is like an amortizing loan

- E.g. Dynamic Array: n calls to PushBack:

-

- Charge 3 for each insertion:

- Use 1 token to pay the cost for insertion;

- Place 1 token on the newly-inserted element

- Plase 1 token on the capacity / 2 elements prior

-

- When Resize is needed:

- Use 1 token To pay for moving the existing elements (all token in the array will dispear)

- When all old array elements are moved to the new array, insert new element (go to 1)

- The Physicist's Method: it consists of:

- Defining a *potential function, Φ which maps states of the data structure to integers:

Φ(h0 ) = 0Φ(ht ) ≥ 0

- Defining a *potential function, Φ which maps states of the data structure to integers:

- Amortized cost for operation t:

ct + Φ(ht) − Φ(ht−1) - Choose Φ so that:

- if ct is small, the potential increases

- if ct is large, the potential decreases by the same scale

- The sum of the amortized costs is:

-

n n Φ(hn) − Φ(h0) + ∑ ci ≥ ∑ ci i=0 i=0 - E.g. Dynamic Array: n calls to PushBack:

- Let

Φ(h) = 2 × size − capacityΦ(h0) = 2 × 0 − 0 = 0Φ(hi) = 2 × size − capacity > 0(since size > capacity/2)

- Calculating Amortized cost for operation i (adding element i):

ci + Φ(hi) − Φ(hi−1):- Without resize:

-

ci = 1; Φ(hi) = 2 * (k + 1) - c Φ(hi-1) = 2 * k - 2 - c ci + Φ(hi) − Φ(hi−1) = 1 + 2 * k - c - 2 * k + 2 + c = +3 - With resize:

-

ci = k + 1; Φ(hi) = 2 * (k + 1) - 2 * k = 2 since there is a resize, the array capacity is doubled Φ(hi-1) = 2 * k - k = k since before the resize, the array capacity is equal to the array size ci + Φ(hi) − Φ(hi−1) = k + 1 + 2 - k = +3

- The Aggregate method:

- Related Problems:

- More details:

Priority Queues: Max/Min Heap

- Max Heap:

- It's a binary tree where the value of each node is at least the values of its children

- For each edge of the tree, the value of the parent is at least the value of the child

- Min Heap:

- It's a binary tree where the value of each node is at most the values of its children

- Implementation, Time Complexity and Operations:

- An efficient implementation is a Complete Binary Tree in an Array

-

Operations 0-based index 1-based index array Parent(i): ⌊ i / 2 ⌋ ⌊ i / 2 ⌋ Leftchild(i): 2 * i + 1 2 * i Rightchild(i): 2 * i + 2 2 * i + 1 -

Time Complexity Comment GetMax(): O(1) or GetMin() ExtractMax(): O(log n) n is the nodes # (or ExtractMin) Insert(i): O(log n) SiftUp(i): O(log n) SiftDown(i): O(log n) ChangePriority(i): O(log n) Remove(i): O(log n)

- Programming Languages:

- Python:

- Lib/heapq.py

- Description

- Git

- C++:

- Java:

- Python:

- For more details:

- UC San Diego Course: Overview & Naive Implementations

- UC San Diego Course: Binary Heaps

Priority Queues: Heap Sort

- It's an improvement of selection sort:

- Instead of simply scanning the rest of the array to find the maximum value

- It uses the heap data structure

- In place algorithm to sort an array (A) with a Heap Sort:

- Step 1: Turn the array A[] into a heap by permuting its elements

- We repair the heap property going from bottom to top

- Initially, the heap property is satisfied in all the leaves (i.e., subtrees of depth 0)

- We then start repairing the heap property in all subtrees of depth 1

- When we reach the root, the heap property is satisfied in the whole tree

-

BuildHeap(A[1 ... n]) for i from ⌊n/2⌋ downto 1: SiftDown(i)- Space Complexity: O(1) (In place algorithm)

- Time Complexity: O(n)

-

Height Nodes # T(SiftDown) T(BuildHeap) 1 1 log(n) 1 * log(n) 2 2 log(n) - 1 2 * [log(n) - 1] ... ... ... ... log(n) - 2 ≤ n/8 3 n/8 * 3 log(n) - 1 ≤ n/4 2 n/4 * 2 log(n) ≤ n/2 1 n/2 * 1 T(BuildHeap) = n/2 * 1 + n/4 * 2 + n/8 * 3 + ... + 1 * log(n) = n [1/2 + 2/4 + 2/8 + ... + log(n)/2^log(n)] < n Σ i/2^i (i goes to the infinity) = n * 2 = O(n) i=1

- Step 2: Sort the Heap

-

HeapSort(A[1 . . . n]) BuildHeap(A) repeat (n − 1) times: swap A[1] and A[size] size = size − 1 SiftDown(1)- Space Complexity: O(1) (In place algorithm)

- Time Complexity: O(n long n)

- Step 1: Turn the array A[] into a heap by permuting its elements

- Use cases:

- It's used for external sort when we need to sort huge files that don’t fit into memory of our computer

- In opposite of QuickSort which is usually used in practice because typically it is faster

- IntraSort algorithm:

- It's a sorting algorithm

- It 1st runs QuickSort algorithm (Avergae Running time: O(n log n); Worst Running time: O(n^2))

- If it turns out to be slow (the recursion depths exceed c log n, for some constant c),

- Then it stops the current call to QuickSort algorithm and switches to HeapSort algorithm (Guaranteed Running time: O(n log n))

- It's a QuickSort algorithm with worst running time: O(n log n)

- Partial Sorting:

- Input: An array A[1 . . . n], an integer k: 1 ≤ k ≤ n

- Output: The last k elements of a sorted version of A

-

PartialSort(A[1 . . . n], k) BuildHeap(A) for i from 1 to k: print(A.ExtractMax()) - BuildHeap Running Time: O(n)

- Printing: the last k elements of a sorted version of A: O(k * log n)

- Running time: O(n + k log n)

- if k = O(n / log n) => Running time = O(n)

- E.g. Printing the last 102 elements of a sorted version of an array of 1024 elements:

- It takes a linear time

- if n = 1024 = 2^10 then k = 2^10 / log 2^10 = 1024 / 10 = 102

- It's used for external sort when we need to sort huge files that don’t fit into memory of our computer

- Related problems:

- For more details:

- UC San Diego Course: Overview & Naive Implementations

- UC San Diego Course: Binary Heaps

Priority Queues: d-ary Heap

- In a d-ary heap nodes on all levels have exactly d children except for possibly the last one

- Its height is about: Log_d n

- Implementation, Time Complexity and Operations:

- An efficient implementation is a Complete D-ary Tree in an Array

-

Operations: 0-based index 1-based index array Parent(i): ⌊ i / d ⌋ ⌊ i / d ⌋ 1st child(i): d * i + 1 d * i 2nd child(i): d * i + 2 d * i + 1 ... ... ... d-th child(i): d * i + d d * i + d - 1 -

Time Complexity Comment GetMax(): O(1) or GetMin() ExtractMax(): O(d * Log_d n) See running time of SiftDown Insert(i): O(Log_d n) SiftUp(i): O(Log_d n) On each level, there is only 1 comparison: child vs. parent SiftDown(i): O(d * Log_d n) On each level, there are d comparisons among d children ChangePriority(i): O(d * Log_d n) Remove(i): O(d * Log_d n)

Disjoint Sets

- It's also called Union-Find data structure

- It's a data structure that keeps track of a set of elements partitioned into a number of disjoint (non-overlapping) subsets

- A 1st efficient implementation is Union by Rank Heuristic:

- It consists of a Tree in 2 Arrays

- Each set is a rooted tree

- The ID of a set is the root of the tree

- Array 1: Parent[1 ... n], Parent[i] is the parent of i, or i if it is the root

- Array 2: Rank[1 ... n], Rank[i] = height of subtree which root is i, rank of the tree's root = 0

- MakeSet(i):

- It creates a singleton set {i}

- It consists of a tree with a single node: parent[i] = i

- Find(i):

- It returns the ID of the set that is containing i

- It consists of the root of the tree where i belongs

- Union(i, j):

- It merges 2 sets containing i and j

- It consists of merging 2 trees

- For effenciency purposes, it must keep the resulting tree as shallow as possible

- It hang the shorter tree under the root of the longer one (we'll use rank array here)

- The resulted tree height = the longer tree height if the 2 trees height are different

- The resulted tree height = the height of one of the trees + 1 if the 2 trees height are equal:

-

Time Complexity MakeSet(x): O(1) Find(x): O(tree height) = O(log n) Union(x, y): O(tree height) = O(log n)

- A 2nd more efficient implementation is Path Compression Heuristic:

- We keep the same data structure as the Union by rank heuristic implementation

- When finding the root of a tree for a particular node i, reattach each node from the traversed path to the root

- From an initially empty disjoint set, we make a sequence of m operations including n calls to MakeSet:

- The total running time is O(m log∗(n))

- The Amortized time of a single operation is: O(log∗(n))

-

Time Complexity MakeSet(x): O(1) Find(x): O(log*(n)) = O(1) if n ≤ 2^65536 Union(x, y): O(log*(n)) = O(1) if n ≤ 2^65536 - For more details about log*(n), see Prerequisites

- Programming Languages:

- Python:

- C++:

- Java:

- Use Cases:

- Keep track of the connected compoents of an undirected graph

- To determine whether 2 vertices belong to the same component

- To determine whether adding an edge between 2 vertices would result in a cycle

- Kruskal's algorithm:

- It's used to find the minimum spanning tree of a graph

- For more details

- In a maze (a grid with walls): Is a given cell B reachable from another given cell A?

- Build disjoint sets where each non-wall cell represent a singleton set for each cell c in maze: if c isn't a wall MakeSet(c)

- Modify disjoint sets above so that if a path between A and B exists, then A and B are in the same set for each cell c in maze: for each neighbor n of c: Union(c, n)

- Check is a path between A and B exists: IsReachable(A, B) return Find(A) = Find(B)

- Building a Network:

- Keep track of the connected compoents of an undirected graph

- Related Problems:

- For more details:

- UC San Diego Course: Overview & Naive Implementations

- UC San Diego Course: Efficient Implementations

- Tutorial

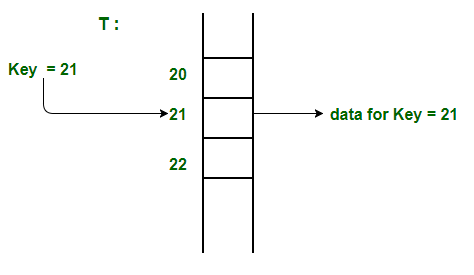

Hashing: Introduction

- A Hash Function:

- It's a function that maps a set of keys from U to a set of integers: 0, 1, ..., m − 1

- In other words, it's a function such that for any key k from U and any integer m > 0, a function

h(k) : U → {0, 1, ... , m − 1} - A key Universe, U

- It's the set U of all possible keys

|U|is the universe size

- A hash Cardinality:

- It's

m - It's the # of different values of the hash function

- It's also the size of the table where keys will be stored

- It's

- A Collision

- It happens when

h(k1) = h(k2)andk1 != k2

- It happens when

- Direct Addressing:

- It's the simplest form of hashing

- It's a data structure that has the capability of mapping records to their corresponding keys using arrays

- Its records are placed using their key values directly as indexes

- It doesn't use a Hashing Function:

-

Time Complexity GetDate(key): O(1) Insert(key, data): O(1) Delete(key): O(1) -

Space Complexity Direct Addressing Table: O(|U|) even if |U| <<< actual size

-

- Limitations:

- It requires to know the maximum key value (|U|) of the direct addressing table

- It's practically useful only if the key maximum value is very less

- It causes a waste of memory space if there is a significant difference between the key maximum value and records #

- E.g., Phone Book:

- Problem: Retrieving a name by phone number

- Key: Phone number

- Data: Name

- Local phone #: 10 digits

- Maximum key value: 999-999-9999 = 10^10

- It requires to store the phone book as an array of size 10^10

- Each cell store a phone number as a long data type: 8 bytes + a name of 12 size long (12 bytes): 20 bytes

- The size of the array will be then: 20 * 10^10 = 2 * 10^11 = 2 * 2^36.541209044 = 2^30 * 2^8.541209044 = 373 GB

- It requires 373 GB of memory!

- What about international #: 15 digits

- It would require a huge array size

- It would take 7 PB to store one phone book

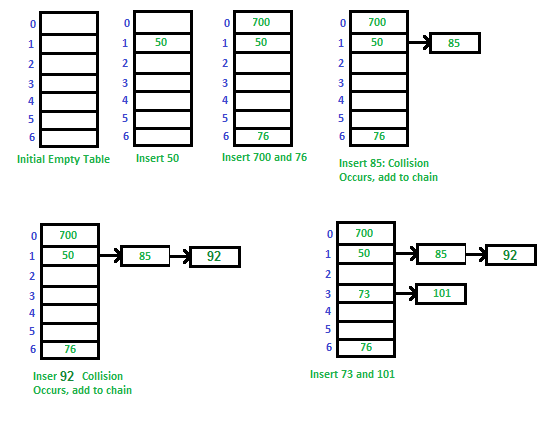

- Chaining:

- It's an implementation technique used to solve collision issues:

- It uses an array

- Each value of the hash function maps a Slot in the array

- Each element of the array is a doubly-linked list of pairs (key, value)

- In case of a collision for 2 different keys, their pairs are stored in a linked list of the corresponding slot

nis the number of keys stored in the array:n ≤ |U|cis the length of the longest chain in the array:c ≥ n / m- The question is how to come up with a hash function so that the space is optimized (m is small) and the running time is efficient (c is small)

- Space worst case:

c = n: all values are stored in the same slot - Space best case:

c = n / m: keys are evenly distributed among all the array cells - See Universal Familly

- Load Factor, α:

α = n / m- If α is too small (

α <<< 1), there isn't lot of collisions but the cells of the array are empty: we're wasting space - If α > 1, there is at least 1 collision

- If α is too big, there are a lot of collisions, c is too long and the operations will be too slow

- It's an implementation technique used to solve collision issues:

- Open Addressing:

- It's an implementation technique used to solve collisions issue

- The Principle of Built-in Hash Table: the typical design of built-in hash table is:

- Each hash table slot contains an array to store all the values in the same bucket initially.

- If there are too many values in the same slot, these values will be maintained in a height-balanced binary search tree instead.

- The average time complexity of both insertion and search is still O(1).

- The worst case time complexity is O(logN) for both insertion and search by using height-balanced BST

- For more details:

- UC San Diego Course: Introduction to Hashing

- UC San Diego Course: Hash Function

- Geeks for Geeks: Hashing: Introduction

- Geeks for Geeks: Direct Address Table

- Geeks for Geeks: Hashing: Chaining

- Geeks for Geeks: Hashing: Open Addressing

- Geeks for Geeks: Address Calculation Sort using Hashing

Hashing: Universal Family

- It's a collection of hash functions such that:

H = { h : U → {0, 1, 2, ... , m − 1} }- For any 2 keys

x, y ∈ U, x != ythe probability of collisionPr[h(x) = h(y)] ≤ 1 / m - It means that a collision for any fixed pair of different keys happens for no more than 1 / m of all hash functions h ∈ H

- All hash functions in H are deterministic

- How Randomization works:

- To Select a random function h from the family H:

- It's the only place where we use randomization

- This randomly chosen function is deterministic

- To use this Fixed h function throughout the algorithm:

- to put keys into the hash table,

- to search for keys in the hash table, and

- to remove the keys from the hash table

- Then, the average length of the longest chain

c = O(1 + α) - Then, the average running time of hash table operations is

O(1 + α)

- To Select a random function h from the family H:

- Choosing Hash Table Size:

- Ideally, load factor 0.5 < α < 1:

- if α is very small (α ≤ 0.5), a lot of the cells of the hash table are empty (at least a half)

- If α > 1, there is at least one collision

- If α is too big, there're a lot of collisions, c is too long and the hash table operations are too slow

- To Use O(m) = O(n/α) = O(n) memory to store n keys

- Operations will run in time O(1 + α) = O(1) on average

- Ideally, load factor 0.5 < α < 1:

- For more details:

- UC San Diego Course: Hash Function

Hashing: Dynamic Hash table

- Dynamic Hash table:

- It's good when the number of keys n is unknown in advance

- It resizes the hash table when α becomes too large (same idea as dynamic arrays)

- It chooses new hash function and rehash all the objects

- Let's choose to Keep the load factor below 0.9 (

α ≤ 0.9);-

Rehash(T): loadFactor = T.numberOfKeys / T.size if loadFactor > 0.9: Create Tnew of size 2 × T.size Choose hnew with cardinality Tnew.size For each object in T: Insert object in Tnew using hnew T = Tnew, h = hnew - The result of the rehash method is a new hash table wit an α == 0.5

- We should call Rehash after each operation with the hash table

- Single rehashing takes O(n) time,

- Amortized running time of each operation with hash table is: O(1) on average, because rehashing will be rare

-

- For more details:

- UC San Diego Course: Hash Function

Hashing: Universal Family for integers

- It's defined as follow:

-

Hp = { hab(x) = [(a * x + b) mod p] mod m } for all a, b p is a fixed prime > |U|, 1 ≤ a ≤ p − 1, 0 ≤ b ≤ p − 1 - Question: How to choose p so that mod p operation = O(1)

- H is a universal family for the set of integers between 0 and p − 1:

|H| = p * (p - 1):- There're (p - 1) possible values for a

- There're p possible values for b

- Collision Probability:

- if for any 2 keys x, y ∈ U, x != y:

Pr[h(x) = h(y)] ≤ 1 / m

- if for any 2 keys x, y ∈ U, x != y:

- How to choose a hashing function for integers:

- Identify the universe size: |U|

- Choose a prime number p > |U|

- Choose hash table size:

- Choose m = O(n)

- So that 0.5 < α < 1

- See Universal Family description

- Choose random hash function from universal family Hp:

- Choose random a ∈ [1, p − 1]

- Choose random b ∈ [0, p − 1]

- For more details:

- UC San Diego Course: Hash Function

Hashing: Polynomial Hashing for string

- It convert all its character S[i] to integer:

- It uses ASCII, Unicode

- It uses all the characters in the hash function because otherwise there will be many collisions

- E.g., if S[0] is not used, then h(“aa”) = h(“ba”) = ··· = h(“za”)

- It uses Polynomial Hashing:

-

|S| Pp = { hx(S) = ∑ S[i] * x^i mod p } for all x i = 0 p a fixed prime, |S| the length of the string S x (the multiplier) is fixed: 1 ≤ x ≤ p − 1 - Pp is a universal family:

|Pp| = p - 1:- There're (p - 1) possible values for x

-

PolyHash(S, p, x) hash = 0 for i from |S| − 1 down to 0: hash = (hash * x + S[i]) mod p return hash E.g. |S| = 3 hash = 0 hash = S[2] mod p hash = S[1] + S[2] * x mod p hash = S[0] + S[1] * x + S[2] * x^2 mod p - How to store strings in a hash table:

- 1st, apply random hx from Pp

- 2nd, hash the resulting value again using universal family for integers, hab from Hp

hm(S) = hab(hx(S)) mod m- Collision Probability:

- For any 2 different strings s1 and s2 of length at most L + 1,

- if we choose h from Pp at random (by selecting a random x ∈ [1, p − 1]),

- The probability of collision:

Pr[h(s1) = h(s2)] ≤ 1/m + L/p - If p > m * L,

Pr[h(s1) = h(s2)] ≤ O(1/m)

- How to choose a hashing function for strings:

- Identify the max length of the strings: L + 1

- Choose a hash table size:

- Choose m = O(n)

- So that 0.5 < α < 1

- See Universal Family description

- Choose a prime number such that

p > m * L - Choose a random hash function for integers from universal family Hp:

- Choose a random a ∈ [1, p − 1]

- Choose a random b ∈ [0, p − 1]

- Choose a random hash function for strings from universal family Pp

- Choose a random x ∈ [1, p − 1]

- E.g., Phone Book 2:

- Problem: Design a data structure to store phone book contacts: names of people along with their phone numbers

- The following operation must be fast: Call person by name

- Solution: To implement Map from names to phone numbers

- Use Cases:

- Rabin-Karp's Algorithm uses Polynomial Hashing to find patterns in strings

- Java class String method hashCode:

- It uses Polynomial Hashing

- It uses x = 31

- It avoids the (mod p) operator for technical reasons

- For more details:

- UC San Diego Course: Hash Function

Hashing: Maps

- It's a data structure that maps keys from set S of objects to set V of values:

- It's implemented with chaining technique

chain (key, value) ← Array[hash(key)]

- It has the following methods:

-

Time Complexity Comment HasKey(key): Θ(c + 1) = O(1 + α) Return if object exists in the map Get(key): Θ(c + 1) = O(1 + α) Return object if it exists else null Set(key, value): Θ(c + 1) = O(1 + α) Update object's value if object exists else insert new pair (object, value) If n = 0: Θ(c + 1) = Θ(1) If n != 0: Θ(c + 1) = Θ(c) Maps hash function is universal: c = n/m = α Space Complexity Θ(m + n) Array size (m) + n pairs (object, value)

-

- E.g., Phone Book:

- Problem: Retrieving a name by phone number

- Hash Function:

- Select hash function h of cardinality m, let say, 1 000 (small enough)

- For any set of phone # P, a function h : P → {0, 1, . . . , 999}

- h(phoneNumber)

- A Map:

- Create an array Chains of size m, 1000

- Chains[i] is a doubly-linked list of pairs (name, phoneNumber)

- Pair(name, phoneNumber) goes into a chain at position h(phoneNumber) in the array Chains

- To look up name by phone number, go to the chain corresponding to phone number and look through all pairs

- To add a contact, create a pair (name, phoneNumber) and insert it into the corresponding chain

- To remove a contact, go to the corresponding chain, find the pair (name, phoneNumber) and remove it from the chain

- Programming Languages:

- Python: dict

- C++: unordered_map

- Java: HashMap

- For more details:

- UC San Diego Course: Hash Function

Hashing: Sets

- It's a data structure that implements the mathematical concept of a finite Set:

- It's usually used to test whether elements belong to set of values (see methods below)

- It's implemented with chaining technique:

- It could be implemented with a map from S to V = {true}; the chain pair: (object, true); It's costly: "true" value doesn't add any information

- It's actually implemented To store just objects instead of pairs in the chains

- It has the following methods:

-

Time Complexity Comment Add(object): Θ(c + 1) = O(1 + α) Add object to the set if it does exit else nothing Remove(object): Θ(c + 1) = O(1 + α) Remove object from the set if it does exist else nothing Find(object): Θ(c + 1) = O(1 + α) Return True if object does exist in the set else False If n = 0: Θ(c + 1) = Θ(1) If n != 0: Θ(c + 1) = Θ(c) Sets hash function is universal: c = n/m = α Space Complexity Θ(m + n) Array size (m) + n objects

-

- Programming Languages:

- Python: set

- C++: unordered_set

- Java: HashSet

- For more details:

- UC San Diego Course: Hash Function

Binary Search Tree (BST)

- It's a binary tree data stucture with the property below:

- Let's X a node in the tree

- X’s key is larger than the key of any descendent of its left child, and

- X's key is smaller than the key of any descendent of its right child

- Implementation, Time Complexity and Operations:

- Time Complexity: O(height)

-

Operations: Description: Find(k, R): Return the node with key k in the tree R, if exists the place in the tree where k would fit, otherwise Next(N): Return the node in the tree with the next largest key the LeftDescendant(N.Right), if N has a right child the RightAncestor(N), otherwise LeftDescendant(N): RightAncestor(N): RangeSearch(k1, k2, R): Return a list of nodes with key between k1 and k2 Insert(k, R): Insert node with key k to the tree Delete(N): Removes node N from the tree: It finds N N.Parent = N.Left, if N.Right is Null, Replace N by X, promote X.Right otherwise

- Balanced BST:

- The height of a balanced BST is at most: O(log n)

- Each subtree is half the size of its parent

- Insertion and deletion operations can destroy balance

- Insertion and deletion operations need to rebalance

- Related problems:

- For more details:

- UC San Diego Course: BST Basic Operations

- UC San Diego Course: Balance

AVL Tree

- It's a Balanced BST:

- It keeps balanced by maintaining the following AVL property:

- For all nodes N,

|N.Left.Height − N.Right.Height| ≤ 1

- It keeps balanced by maintaining the following AVL property:

- Implementation, Time Complexity and Operations:

- Time Complexity: O(log n)

- Insertion and deletion operations can destroy balance:

- They can modify height of nodes on insertion/deletion path

- They need to rebalance the tree in order to maintain the AVL property

- Steps to follow for an insertion:

-

1- Perform standard BST insert

-

2- Starting from w, travel up and find the first unbalanced node

-

3- Re-balance the tree by performing appropriate rotations on the subtree rooted with z

-

Let w be the newly inserted node z be the first unbalanced node, y be the child of z that comes on the path from w to z x be the grandchild of z that comes on the path from w to z -

There can be 4 possible cases that needs to be handled as x, y and z can be arranged in 4 ways:

-

Cas 1: Left Left Case: z y / \ / \ y T4 Right Rotate (z) x z / \ - - - - - - - - -> / \ / \ x T3 T1 T2 T3 T4 / \ T1 T2 -

Cas 2: Left Right Case: z z x / \ / \ / \ y T4 Left Rotate (y) x T4 Right Rotate(z) y z / \ - - - - - - - - -> / \ - - - - - - - -> / \ / \ T1 x y T3 T1 T2 T3 T4 / \ / \ T2 T3 T1 T2 -

Cas 3: Right Right Case: z y / \ / \ T1 y Left Rotate(z) z x / \ - - - - - - - -> / \ / \ T2 x T1 T2 T3 T4 / \ T3 T4 -

Cas 4: Right Left Case: z z x / \ / \ / \ T1 y Right Rotate (y) T1 x Left Rotate(z) z y / \ - - - - - - - - -> / \ - - - - - - - -> / \ / \ x T4 T2 y T1 T2 T3 T4 / \ / \ T2 T3 T3 T4

-

- Steps to follow for a Deletion:

-

(1) Perform standard BST delete

-

(2) Travel up and find the 1st unbalanced node

-

(3) Re-balance the tree by performing appropriate rotations

-

Let w be the newly inserted node z be the 1st unbalanced node y be the larger height child of z x be the larger height child of y Note that the definitions of x and y are different from insertion -

There can be 4 possible cases:

-

Cas 1: Left Left Case: z y / \ / \ y T4 Right Rotate (z) x z / \ - - - - - - - - -> / \ / \ x T3 T1 T2 T3 T4 / \ T1 T2 -

Cas 2: Left Right Case: z z x / \ / \ / \ y T4 Left Rotate (y) x T4 Right Rotate(z) y z / \ - - - - - - - - -> / \ - - - - - - - -> / \ / \ T1 x y T3 T1 T2 T3 T4 / \ / \ T2 T3 T1 T2 -

Cas 3: Right Right Case: z y / \ / \ T1 y Left Rotate(z) z x / \ - - - - - - - -> / \ / \ T2 x T1 T2 T3 T4 / \ T3 T4 -

Cas 4: Right Left Case: z z x / \ / \ / \ T1 y Right Rotate (y) T1 x Left Rotate(z) z y / \ - - - - - - - - -> / \ - - - - - - - -> / \ / \ x T4 T2 y T1 T2 T3 T4 / \ / \ T2 T3 T3 T4

-

- Steps to follow for a Merge operation:

- Input: Roots R1 and R2 of trees with all keys in R1’s tree smaller than those in R2’s

- Output: The root of a new tree with all the elements of both trees

- (1) Go down side of the tree with the bigger height until reaching the subtree with height equal to slowest height

- (2) Merge the trees

- (2.a) Get new root Ri by removing largest element of left subtree (Ri)

- There can be 3 possible cases:

-

Cas 1: R1.Height = R2.Height = h R1 R2 R1' z R2 z(h+1) / \ / \ / \ / \ / \ T3 T4 (+) T5 T6 Delete(z) T3' T4' (+) T5 T6 Merge R1'(h-1) R2(h) / \ - - - - - -> - - - - -> / \ / \ T1 ... Rebalance T3' T4' T5 T6 / \ h-1 ≤ R1'.height ≤ h AVL property maintained T2 z -

Cas 2: R1.Height (h1) < R2.Height (h2): R1 R2 R1(h1) R2'(h1) / \ / \ / \ / \ T3 T4 (+) T5 T6 Find R2' in T5 T3 T4 (+) T7 T8 Merge - - - - - - - -> - - - - -> Go to Case 1 R2'.height = h1 -

Cas 3: R1.Height (h1) > R2.Height (h2): R1 R2 R1'(h2) R2(h1) / \ / \ / \ / \ T3 T4 (+) T5 T6 Find R1' in T4 T1 T2 (+) T5 T6 Merge - - - - - - - -> - - - - -> Go to Case 1 R1'.height = h2

- Steps to follow for a Split:

- Related Problems:

- Use Cases:

- For more details:

- UC San Diego Course: AVL tree

- UC San Diego Course: AVL Tree implementation

- UC San Diego Course: Split and Merge operations

- Geeks for Geeks: AVL tree insertion

- Geeks for Geeks: AVL tree deletion

- Visualization: AVL Tree

Splay Tree

- For more details:

- UC San Diego Course: Splay Trees Introduction

- UC San Diego Course: Splay Tree Implementation

- UC San Diego Course: Splay Tree Analysis

- Visualization: Splay Tree

Graphs: Basics

- It's a collection of

- V vertices, and

- E edges

- Each edge connects a pair of vertices

- A collection of undirected edges forms an Undirected graph

- A collection of directed edges forms an Directed graph

- A Loop connect a vertex to itself

- Multiple edges between same vertices

- A Simple graph

- It's a graph

- It doesn't have loops

- It doesn't have multiple edges between same vertices

- The degree a vertex:

- It's also called valency of a vertex

- It's the number of edges that are incident to the vertex

- Implementation, Time Complexity and Operations:

- Edge List:

- It consists of storing the graph as a list of edges

- Each edge is a pair of vertices,

- E.g., Edges List: (A, B) ——> (A, C ) ——> (A, D) ——> (C , D)

- Adjacency Matrix:

- Matrix[i,j] = 1 if there is an edge, 0 if there is not

- E.g. Undirected Directed A B C D A B C D A 0 1 1 1 A 0 1 1 1 B 1 0 0 0 B 0 0 0 0 C 1 0 0 1 C 0 1 0 1 D 1 0 1 0 D 0 1 0 0

- Adjacency List:

- Each vertex keeps a list of adjacent vertices (neighbors)

- E.g.

Vertices: Neighbors (Undirected) Neighbors (Directed)

A B -> C -> D B -> C -> D

B A

C A -> D B D A -> C B

-

Time Complexity Edge List Adjacency Matrix Adjacency List IsEdge(v1, v2): O(|E|) O(1) O(deg) ListAllEdge: O(|E|) O(|V|^2) O(|E|) ListNeighbors(ν): O(|E|) O(|V|) O(deg)

- Edge List:

- Density:

- A Dense Graph:

- It's a graph where a large fraction of pairs of vertices are connected by edges

- |E | ≈ |V|^2

- E.g., Routes between cities:

- It could be represented as a dense graph

- There is actually some transportation option that will get you between basically any pair of cities on the map

- What matter is not whether or not it's possible to get between 2 cities, but how hard it is to get between these cities

- A Sparse Graph:

- It's a graph where each vertex has only a few edges

- |E| ≈ |V|

- E.g. 1, we could represent the internet as a sparse graph,

- There are billions of web pages on the internet, but any given web page is only gonna have links to a few dozen others

- E.g. 2. social networks

- Asymptotique analysis depends on the Density of the graph

- A Dense Graph:

- Connected Components:

- A Graph vertices can be partitioned into Connected Components

- So that ν is reachable from w if and only if they are in the same connected component

- A Cycle in a graph:

- It's a sequence of vertices v1,..., vn so that

- (v1, v2 ),..., (vn−1, vn), (vn, v1) are all edges

- Cycle graphs cannot be linearly ordered (typologically ordered)

- E.g.

- Directed Graph:

- Streets with one-way roads

- Links between webpages

- Followers on social network

- Dependencies between tasks

- Directed Graph:

- For more details:

- UC San Diego Course: Basics

- UC San Diego Course: Representation

- Khanacademy Introduction to Graphs

Depth-First Search (DFS)

-

We will explore new edges in Depth First order

-

We will follow a long path forward, only backtracking when we hit a dead end

-

It doesn't matter if the graph is directed or undirected

-

Loop on all virtices: def DFS(G): mark unvisited all vertices ν ∈ G.V

for ν ∈ G.V: if not visited (ν): Explore(ν) -

Explore 1 path until hitting a dead end:

def Explore (v ): visited (v ) = true for (v , w) ∈ E: if not visited (w): Explore (w) -

Time complexity:

-

Implementation: Explore() DFS Adjacency List: O(degre) O(|V| + ∑ degre for all ν) = O(|V| + |E|) Adjacency Matrix: O(|V|) O(|V|^2) - Number of calls to explore:

- Each explored vertex is marked visited

- No vertex is explored after visited once

- Each vertex is explored exactly once

- Checking for neighbors: O(|E|)

- Each vertex checks each neighbor.

- Total number of neighbors over all vertices is O(|E|)

- We prefer adjacency list representation!

-

-

Space Complexity:

-

DFS Previsit and Postvisit Functions:

- Plain DFS just marks all vertices as visited

- We need to keep track of other data to be useful

- Augment functions to store additional information

-

def Explore (v ): visited(ν) = true previsit (ν) for (v , w) ∈ E: if not visited (w): Explore (w) postvisit (ν) - E.g., Clock:

- Keep track of order of visits

- Clock ticks at each pre-/post- visit

- Records previsit and postvisit times for each v

-

previsit (v ) pre (ν) ← clock clock ← clock + 1 -

postvisit (v ) post (v ) ← clock clock ← clock + 1 - It tells us about the execution of DFS

- For any μ, ν the intervals [ pre(μ), post(μ)] and [ pre(μ), post(μ)] are either nested or disjoint

- Nested: μ: [ 1, 6 ], ν [ 3, 4 ]: ν is reachable from μ

- Disjoint: μ [ 1, 6 ], ν [ 9, 11 ]: ν isn't reachable from μ

- Interleaved (isn't possible) μ[ 1, 6 ], ν [ 3, 8 ]

-

Related problems:

- Detect there is a cycle in a graph:

-

For more details:

- UC San Diego Course: Exploring Graphs

- Visualization: DFS

DAGs: Topological Sort

- A DAG:

- Directed Acyclic Graph

- It's a directed graph G without any cycle

- A source vertex is a vertex with no incoming edges

- A sink vertex is a vertex with no outgoing edges

- A Topological Sort:

- Find sink; Put at end of order; Remove from graph; Repeat

- It's the DFS algorithm

-

TopologicalSort (G ) DFS (G) Sort vertices by reverse post-order

- Related problems:

- For more details:

- UC San Diego Course: DAGs

- UC San Diego Course: Topological Sort

- Geeks for Geeks: topological sort with In-degree

- Visualization: Topological Sort using DFS

- Visualization: Topological sort using indegree array

Strongly Connected Components

- Connected vertices:

- 2 vertices ν, w in a directed graph are connected:

- if you can reach ν from w and can reach w from v

- Strongly connected graph: is a directed graph where every vertex is reachable from every other vertex

- Strongly connected components, SCC: It's a collection of subgraphs of an arbitrary directed graph that are strongly connected

- Metagraph:

- It's formed from all strongly connected components

- Each stromgly connected components is represented by a vertice

- The metagraph of a directed graph is always a DAG

- Sink Components

- It's a subgrph of a directed graph with no outgoing edges

- If ν is in a sink SCC,

explore (ν)finds this SCC

- Source Components

- It's a subgrph of a directed graph with no incoming edges

- The vertex with the largest postorder number is in a source component

- Reverse Graph, G^R

- It's a directed graph obtained from G by reversing the direction of all of its edges

- G^R and G have same SCCs

- Source components of G^R are sink components of G

- The vertex with largest postorder in G^R is in a sink SCC of G

- Find all SCCs of a directed graph G:

-

SCCs (G, Gr): Run DFS(Gr): for ν ∈ V in reverse postorder: if ν isn't visited: Explore(ν, G): vertices found are first SCC Mark visited vertices as new SCC - Time Complexity: O(|V| + |E|)

- It's essentially DFS on Gr and then on G

-

- Related Problems:

- For more details:

- UC San Diego Course: Strongly Connected Components I

- UC San Diego Course: Strongly Connected Components II

- Visualization: Strongly connected components

Paths in unweighted Graphs: Path and Distance (Basics)

- A Path:

- It's a collection of edges that connects 2 vertices μ, ν

- It could exist multiple paths linking same vertices

- Path length:

- L(P)

- It's the number of edges of a path

- Distance in unweighted graph

- It's between 2 nodes in a graph

- It's the length of the shortest possible path between these nodes

- For any pair of vertices μ, ν: Distance(μ, ν) in a directed graph is ≥ Distance(μ, ν) in the corresponding undirected graph

- Distance Layers:

- For a given vertex ν in a graph, it's a way of representing the graph by repositioning all its nodes from top to bottom with increasing distance from ν

- Layer 0: contains the vertex v

- Layer 1: contains all vertices which distance to ν is: 1

- ...

- E.g.:

-

G: Layers Distance Layers from A Distance Layers from C A — B — C 0 A C | | / \ D 1 B B D | | 2 C A | 3 D - In a Undirected graph, Edges are possible between same layer nodes or adjacent layers nodes

- In other words, there is no edge between nodes of a layer l and nodes of layers < l - 1 and layers > l + 1

- E.g. From example above:

-

Distance Layers from C: 0 C C / \ / | \ 1 B D => there is no edge => if there an edge => B A D | between A and C between A and C 2 A

- In an Directed graph, Edges are possible between same layer nodes, adjacent layers nodes and to all previous layers

- E.g.

- Edges between layer 3 and any previous layer 2, 1, 0 are possible: this doesn't change the distance between D and A

- Edges between layer 0 or 1 and layer 3 are still not possible: this does change the distance between D and A

-

Distance Layers from C: 0 D D ↙ ↘ ↙ ↘ 1 C E => there is no edge => if an edge => C E ↓ from C to A from C to A ↙ ↘ 2 B B A (A would be in layer 2) ↓ 3 A

- For more details:

- UC San Diego Course: Path and Distance

Breadth-First Search (BFS)

- To traverse all nodes at one layer before progressing the next layer

- Implementation, Time Complexity:

- Time Complexity: O(|V| + |E|)

-

BFS(G , S): for all μ ∈ V: dist[μ] ← ∞ dist[S] ← 0 Q ← {S} { queue containing just S} while Q is not empty: μ ← Dequeue (Q) for all (μ, ν) ∈ u.E: if dist[v ] = ∞ : Enqueue(Q, ν) dist[ν] ← dist[μ] + 1

- BSF Properties:

- A node is discovered when it's found for the 1st time and enqueued