Releases: kengz/SLM-Lab

upgrade plotly, replace orca with kaleido

What's Changed

Full Changelog: v4.2.3...v4.2.4

fix GPU installation and assignment issue

What's Changed

- Added Algorithms config files for VideoPinball-v0 game by @dd-iuonac in #488

- fix build for new RTX GPUs by @kengz and @Karl-Grantham in #496

- remove the

reinforce_pong.jsonspec to prevent confusion in #499

New Contributors

- @dd-iuonac made their first contribution in #488

- @Karl-Grantham for help with debugging #496

Full Changelog: v4.2.2...v4.2.3

Improve Installation / Colab notebook

Improve Installation Stability

🙌 Thanks to @Nickfagiano help with debugging.

- #487 update installation to work with MacOS BigSur

- #487 improve setup with Conda path guard

- #487 lock

atari-pyversion to 0.2.6 for safety

Google Colab/Jupyter

🙌 Thanks to @piosif97 for helping.

Windows setup

🙌 Thanks to @vladimirnitu and @steindaian for providing the PDF.

Update installation

Update installation

Dependencies and systems around SLM Lab has changed and caused some breakages. This release fixes these installation issues.

- #461, #476 update to

homebrew/cask(thanks @ben-e, @amjadmajid ) - #463 add pybullet to dependencies (thanks @rafapi)

- #483 fix missing install command in Arch Linux setup (thanks @sebimarkgraf)

- #485 update GitHub Actions CI to v2

- #485 fix demo spec to use strict json

Resume mode, Plotly and PyTorch update, OnPolicyCrossEntropy memory

Resume mode

- #455 adds

train@resume mode and refactors theenjoymode. See PR for detailed info.

train@ usage example

Specify train mode as train@{predir}, where {predir} is the data directory of the last training run, or simply use latest` to use the latest. e.g.:

python run_lab.py slm_lab/spec/benchmark/reinforce/reinforce_cartpole.json reinforce_cartpole train

# terminate run before its completion

# optionally edit the spec file in a past-future-consistent manner

# run resume with either of the commands:

python run_lab.py slm_lab/spec/benchmark/reinforce/reinforce_cartpole.json reinforce_cartpole train@latest

# or to use a specific run folder

python run_lab.py slm_lab/spec/benchmark/reinforce/reinforce_cartpole.json reinforce_cartpole train@data/reinforce_cartpole_2020_04_13_232521enjoy mode refactor

The train@ resume mode API allows for the enjoy mode to be refactored. Both share similar syntax. Continuing with the example above, to enjoy a train model, we now use:

python run_lab.py slm_lab/spec/benchmark/reinforce/reinforce_cartpole.json reinforce_cartpole enjoy@data/reinforce_cartpole_2020_04_13_232521/reinforce_cartpole_t0_s0_spec.jsonPlotly and PyTorch update

- #453 updates Plotly to 4.5.4 and PyTorch to 1.3.1.

- #454 explicitly shuts down Plotly orca server after plotting to prevent zombie processes

PPO batch size optimization

- #453 adds chunking to allow PPO to run on larger batch size by breaking up the forward loop.

New OnPolicyCrossEntropy memory

Discrete SAC benchmark update

Discrete SAC benchmark update

| Env. \ Alg. | DQN | DDQN+PER | A2C (GAE) | A2C (n-step) | PPO | SAC |

| Breakout | 80.88 | 182 | 377 | 398 | 443 | 3.51* |

| Pong | 18.48 | 20.5 | 19.31 | 19.56 | 20.58 | 19.87* |

| Seaquest | 1185 | 4405 | 1070 | 1684 | 1715 | 171* |

| Qbert | 5494 | 11426 | 12405 | 13590 | 13460 | 923* |

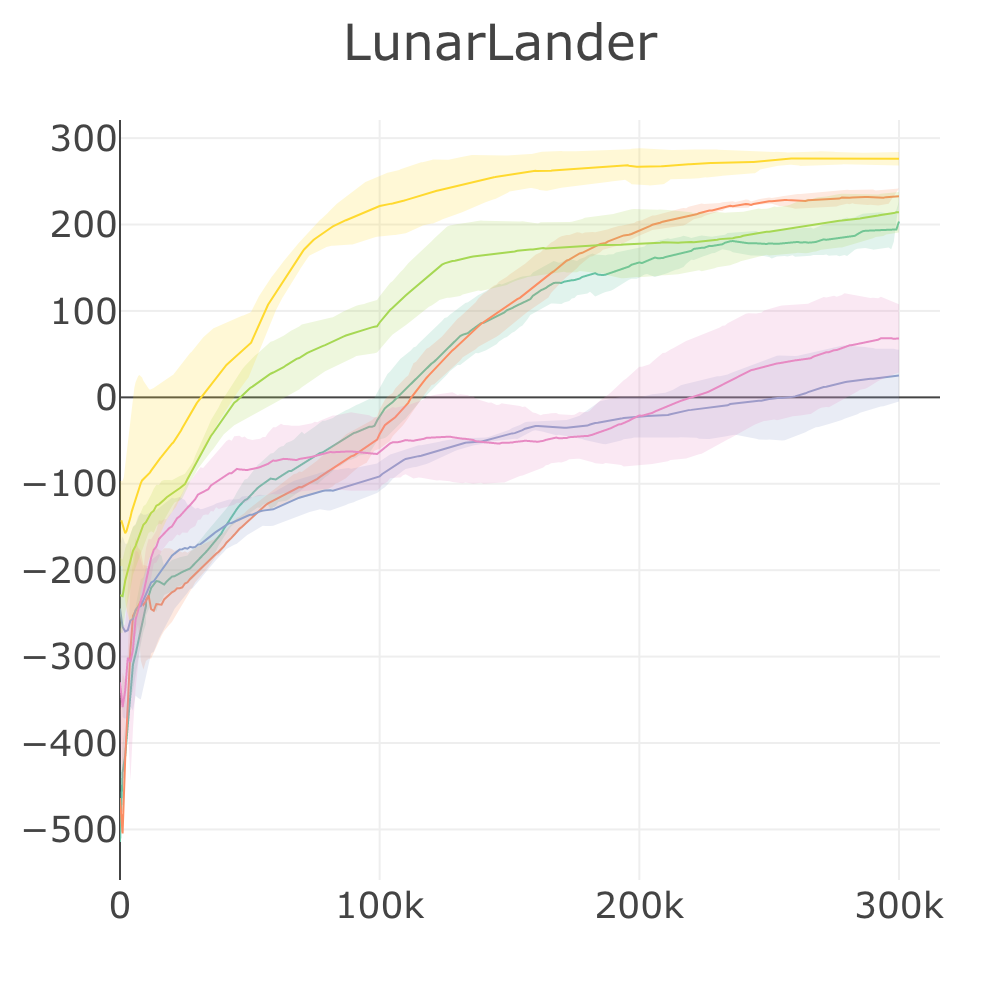

| LunarLander | 192 | 233 | 25.21 | 68.23 | 214 | 276 |

| UnityHallway | -0.32 | 0.27 | 0.08 | -0.96 | 0.73 | 0.01 |

| UnityPushBlock | 4.88 | 4.93 | 4.68 | 4.93 | 4.97 | -0.70 |

Episode score at the end of training attained by SLM Lab implementations on discrete-action control problems. Reported episode scores are the average over the last 100 checkpoints, and then averaged over 4 Sessions. A Random baseline with score averaged over 100 episodes is included. Results marked with

*were trained using the hybrid synchronous/asynchronous version of SAC to parallelize and speed up training time. For SAC, Breakout, Pong and Seaquest were trained for 2M frames instead of 10M frames.

For the full Atari benchmark, see Atari Benchmark

RAdam+Lookahead optim, TensorBoard, Full Benchmark Upload

This marks a stable release of SLM Lab with full benchmark results

RAdam+Lookahead optimizer

- Lookahead + RAdam optimizer significantly improves the performance of some RL algorithms (A2C (n-step), PPO) on continuous domain problems, but does not improve (A2C (GAE), SAC). #416

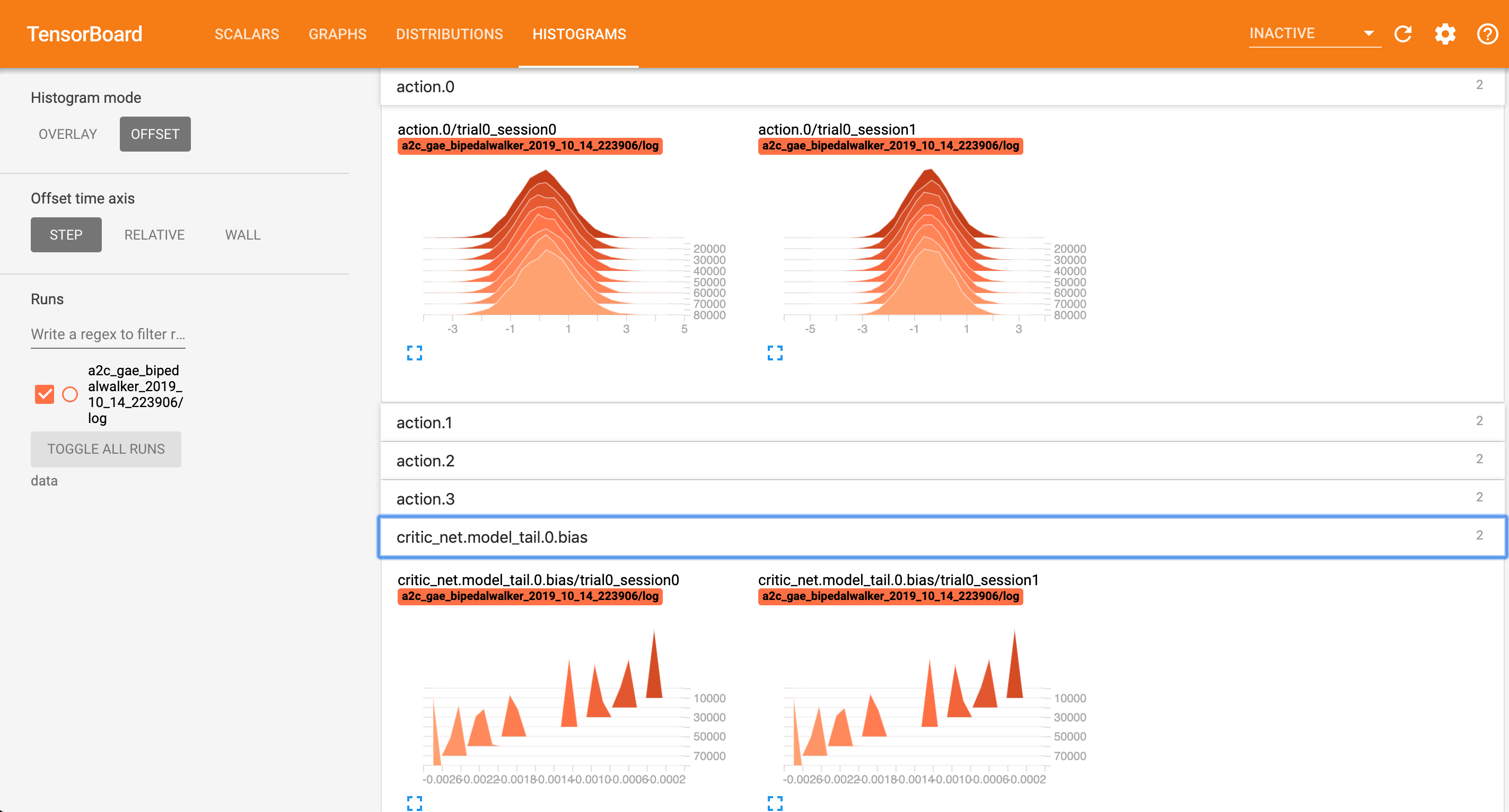

TensorBoard

- Add TensorBoard in body to auto-log summary variables, graph, network parameter histograms, action histogram. To launch TensorBoard, run

tensorboard --logdir=dataafter a session/trial is completed. Example screenshot:

Full Benchmark Upload

Plot Legend

Discrete Benchmark

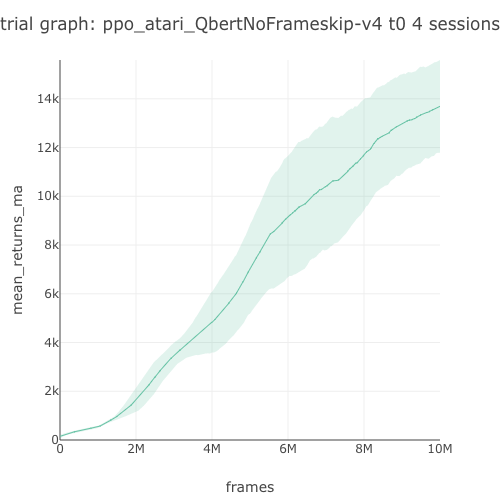

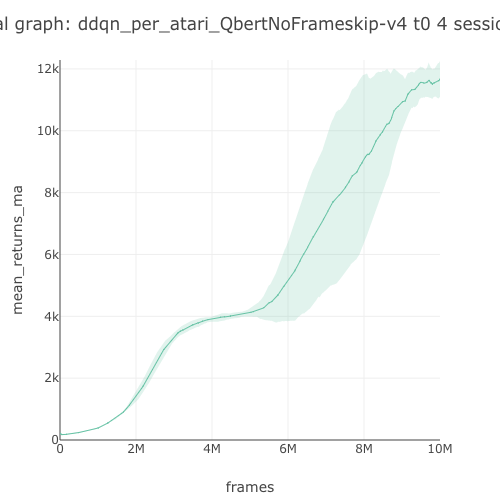

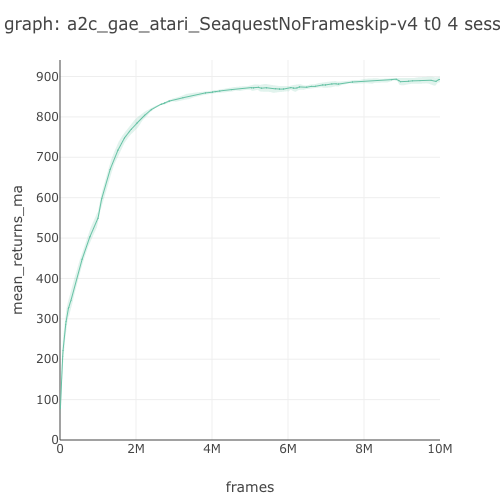

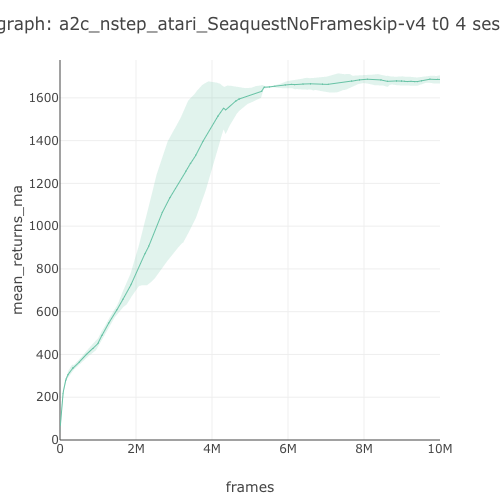

| Env. \ Alg. | DQN | DDQN+PER | A2C (GAE) | A2C (n-step) | PPO | SAC |

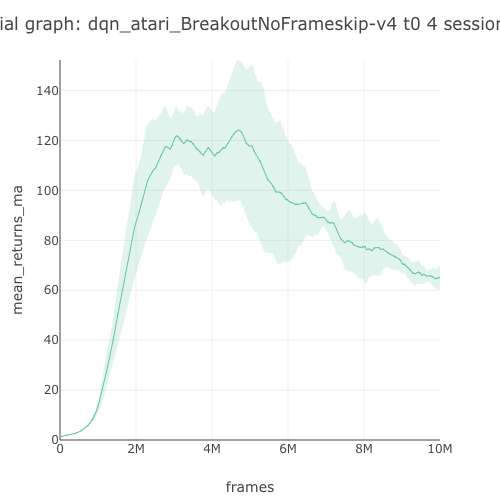

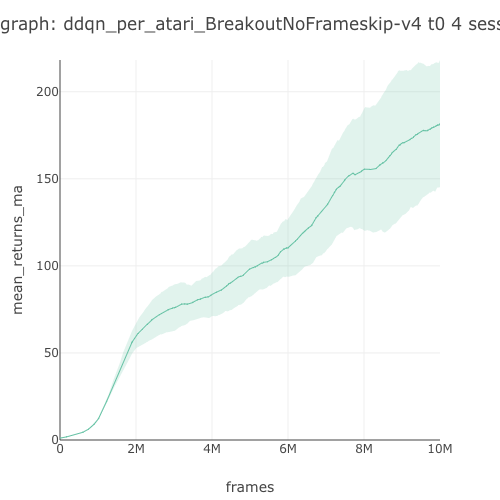

| Breakout | 80.88 | 182 | 377 | 398 | 443 | - |

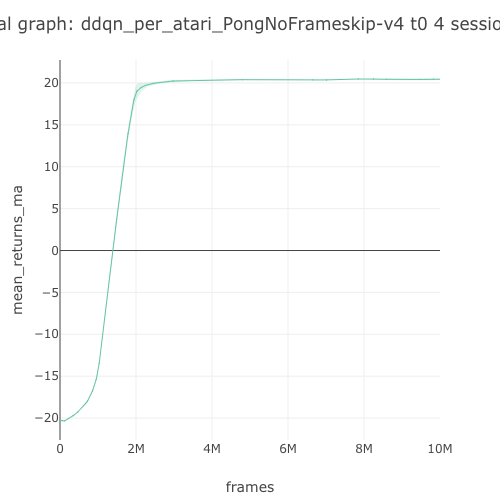

| Pong | 18.48 | 20.5 | 19.31 | 19.56 | 20.58 | 19.87* |

| Seaquest | 1185 | 4405 | 1070 | 1684 | 1715 | - |

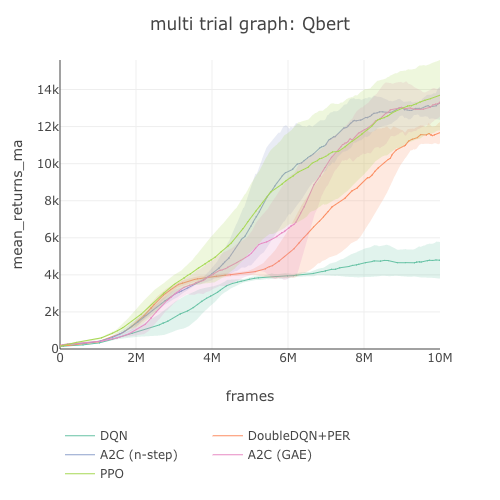

| Qbert | 5494 | 11426 | 12405 | 13590 | 13460 | 214* |

| LunarLander | 192 | 233 | 25.21 | 68.23 | 214 | 276 |

| UnityHallway | -0.32 | 0.27 | 0.08 | -0.96 | 0.73 | - |

| UnityPushBlock | 4.88 | 4.93 | 4.68 | 4.93 | 4.97 | - |

Episode score at the end of training attained by SLM Lab implementations on discrete-action control problems. Reported episode scores are the average over the last 100 checkpoints, and then averaged over 4 Sessions. Results marked with

*were trained using the hybrid synchronous/asynchronous version of SAC to parallelize and speed up training time.

For the full Atari benchmark, see Atari Benchmark

Continuous Benchmark

| Env. \ Alg. | A2C (GAE) | A2C (n-step) | PPO | SAC |

| RoboschoolAnt | 787 | 1396 | 1843 | 2915 |

| RoboschoolAtlasForwardWalk | 59.87 | 88.04 | 172 | 800 |

| RoboschoolHalfCheetah | 712 | 439 | 1960 | 2497 |

| RoboschoolHopper | 710 | 285 | 2042 | 2045 |

| RoboschoolInvertedDoublePendulum | 996 | 4410 | 8076 | 8085 |

| RoboschoolInvertedPendulum | 995 | 978 | 986 | 941 |

| RoboschoolReacher | 12.9 | 10.16 | 19.51 | 19.99 |

| RoboschoolWalker2d | 280 | 220 | 1660 | 1894 |

| RoboschoolHumanoid | 99.31 | 54.58 | 2388 | 2621* |

| RoboschoolHumanoidFlagrun | 73.57 | 178 | 2014 | 2056* |

| RoboschoolHumanoidFlagrunHarder | -429 | 253 | 680 | 280* |

| Unity3DBall | 33.48 | 53.46 | 78.24 | 98.44 |

| Unity3DBallHard | 62.92 | 71.92 | 91.41 | 97.06 |

Episode score at the end of training attained by SLM Lab implementations on continuous control problems. Reported episode scores are the average over the last 100 checkpoints, and then averaged over 4 Sessions. Results marked with

*require 50M-100M frames, so we use the hybrid synchronous/asynchronous version of SAC to parallelize and speed up training time.

Atari Benchmark

- Upload PR #427

- Dropbox data: DQN

- Dropbox data: DDQN+PER

- Dropbox data: A2C (GAE)

- Dropbox data: A2C (n-step)

- Dropbox data: PPO

- Dropbox data: all Atari graphs

| Env. \ Alg. | DQN | DDQN+PER | A2C (GAE) | A2C (n-step) | PPO |

| Adventure | -0.94 | -0.92 | -0.77 | -0.85 | -0.3 |

| AirRaid | 1876 | 3974 | 4202 | 3557 | 4028 |

| Alien | 822 | 1574 | 1519 | 1627 | 1413 |

| Amidar | 90.95 | 431 | 577 | 418 | 795 |

| Assault | 1392 | 2567 | 3366 | 3312 | 3619 |

| Asterix | 1253 | 6866 | 5559 | 5223 | 6132 |

| Asteroids | 439 | 426 | 2951 | 2147 | 2186 |

| Atlantis | 68679 | 644810 | 2747371 | 2259733 | 2148077 |

| BankHeist | 131 | 623 | 855 | 1170 | 1183 |

| BattleZone | 6564 | 6395 | 4336 | 4533 | 13649 |

BeamRider graph<img src="https://user-images.githubusercontent.com/8... |

v4.0.1: Soft Actor-Critic

This release adds a new algorithm: Soft Actor-Critic (SAC).

Soft Actor-Critic

-implement the original paper: "Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor" https://arxiv.org/abs/1801.01290 #398

- implement the improvement of SAC paper: "Soft Actor-Critic Algorithms and Applications" https://arxiv.org/abs/1812.05905 #399

- extend SAC to work directly for discrete environment using

GumbelSoftmaxdistribution (custom)

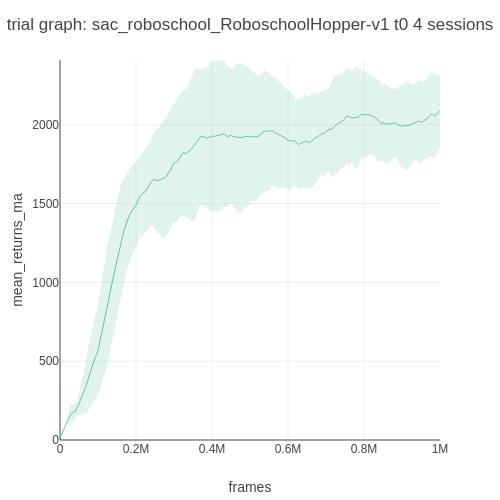

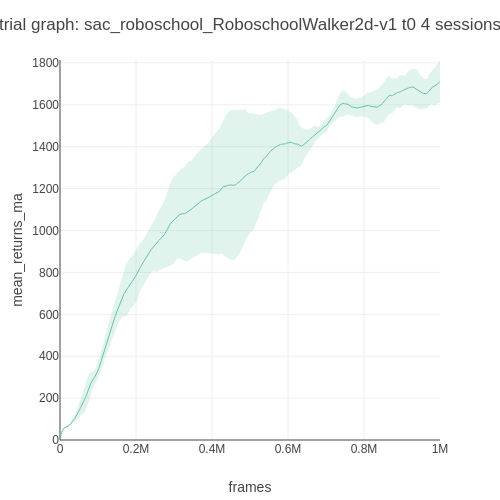

Roboschool (continuous control) Benchmark

Note that the Roboschool reward scales are different from MuJoCo's.

| Env. \ Alg. | SAC |

|---|---|

| RoboschoolAnt | 2451.55 |

| RoboschoolHalfCheetah | 2004.27 |

| RoboschoolHopper | 2090.52 |

| RoboschoolWalker2d | 1711.92 |

LunarLander (discrete control) Benchmark

|

|

| Trial graph | Moving average |

v4.0.0: Algorithm Benchmark, Analysis, API simplification

This release corrects and optimizes all the algorithms from benchmarking on Atari. New metrics are introduced. The lab's API is also redesigned for simplicity.

Benchmark

- full algorithm benchmark on 4 core Atari environments #396

- LunarLander benchmark #388 and BipedalWalker benchmark #377

This benchmark table is pulled from PR396. See the full benchmark results here.

| Env. \ Alg. | A2C (GAE) | A2C (n-step) | PPO | DQN | DDQN+PER |

|---|---|---|---|---|---|

| Breakout | 389.99 | 391.32 | 425.89 | 65.04 | 181.72 |

| Pong | 20.04 | 19.66 | 20.09 | 18.34 | 20.44 |

| Qbert | 13,328.32 | 13,259.19 | 13,691.89 | 4,787.79 | 11,673.52 |

| Seaquest | 892.68 | 1,686.08 | 1,583.04 | 1,118.50 | 3,751.34 |

Algorithms

- correct and optimize all algorithms with benchmarking #315 #327 #328 #361

- introduce "shared" and "synced" Hogwild modes for distributed training #337 #340

- streamline and optimize agent components too

Now, the full list of algorithms are:

- SARSA

- DQN, distributed-DQN

- Double-DQN, Dueling-DQN, PER-DQN

- REINFORCE

- A2C, A3C (N-step & GAE)

- PPO, distributed-PPO

- SIL (A2C, PPO)

All the algorithms can be ran in distributed mode also; which in some cases they have their special names (mentioned above)

Environments

- implement vector environments #302

- implement more environment wrappers for preprocessing. Some replay memories are retired. #303 #330 #331 #342

- make Lab Env wrapper interface identical to gym #304, #305, #306, #307

API

- all the Space objects (AgentSpace, EnvSpace, AEBSpace, InfoSpace) are retired, to opt for a much simpler interface. #335 #348

- major API simplification throughout

Analysis

- rework analysis, introduce new metrics: strength, sample efficiency, training efficiency, stability, consistency #347 #349

- fast evaluation using vectorized env for

rigorous_eval#390 , and using inference for fast eval #391

Search

Improve installation, functions, add out layer activation

Improve installation

- #288 split out yarn installation as extra step

Improve functions

- #283 #284 redesign fitness slightly

- #281 simplify PER sample index

- #287 #290 improve DQN polyak and network switching

- #291 refactor advantage functions

- #295 #296 refactor various utils, fix PyTorch inplace ops

Add out layer activation

- #300 add out layer activation