(2021) See your Python code do web browsing on your screen with GUI. I highly recommend working with Linux (including virtual machines) or MacOs.

Before you try to scrape any website, go through its robots.txt file. You can access it via domainname/robots.txt. There, you will see a list of pages allowed and disallowed for scraping. You should not violate any terms of service of any website you scrape.

cp .env.example .env

python3 -m venv env && \

source env/bin/activate

pip install -r requirements.txt

python3 manage.py migrateMake sure Chromedriver is installed and added to your environment Path.

# chromedriver_mac64

# chromedriver_win32

# See https://chromedriver.storage.googleapis.com

# for drivers list.

wget https://chromedriver.storage.googleapis.com/2.37/chromedriver_linux64.zip

unzip chromedriver_linux64.zip

sudo mv chromedriver /usr/local/bin/chromedriver

chromedriver --versionUpdate the command at crawl.py

alias py="python3"

py manage.py crawlIf you still need help installing and running the app check out the readme at https://github.com/kkamara/python-react-boilerplate which is the base system for this python-selenium app.

alias compose='docker-compose -f local.yml'

compose build

compose up

# Automated runs with Docker:

# compose up --build -d && python3 manage.py crawlpy manage.py shell -i ipythonpy manage.py show_urlsView the api collection here.

Admin creds are set in ./compose/local/django/start

export DJANGO_SUPERUSER_PASSWORD=secret

py manage.py createsuperuser \

--username admin_user \

--email admin@django-app.com \

--no-input \

--first_name Admin \

--last_name Userpy manage.py collectstaticMail environment credentials are at .env.

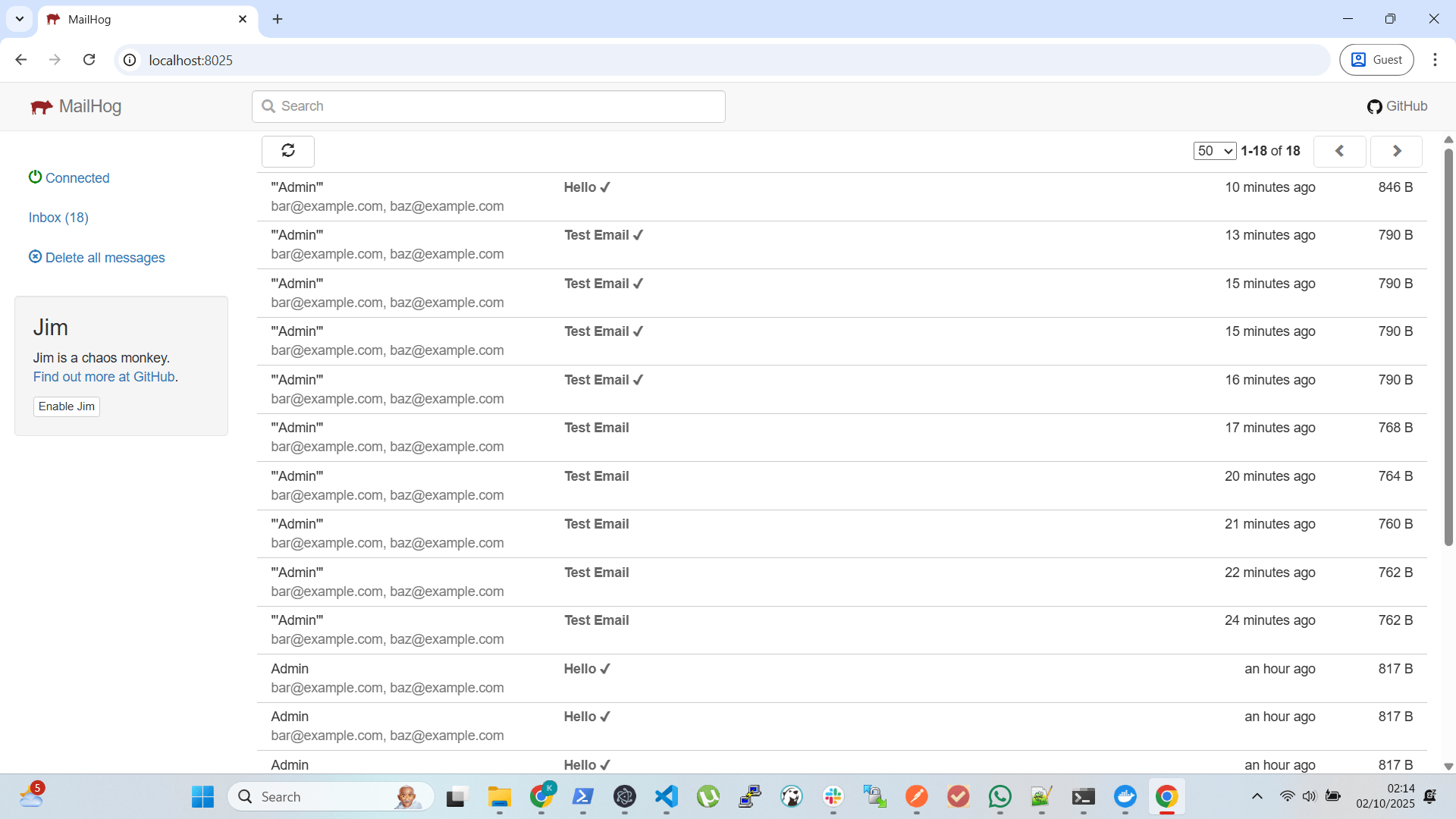

The mailhog docker image runs at http://localhost:8025.

See Python ReactJS Boilerplate.

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.

Please make sure to update tests as appropriate.