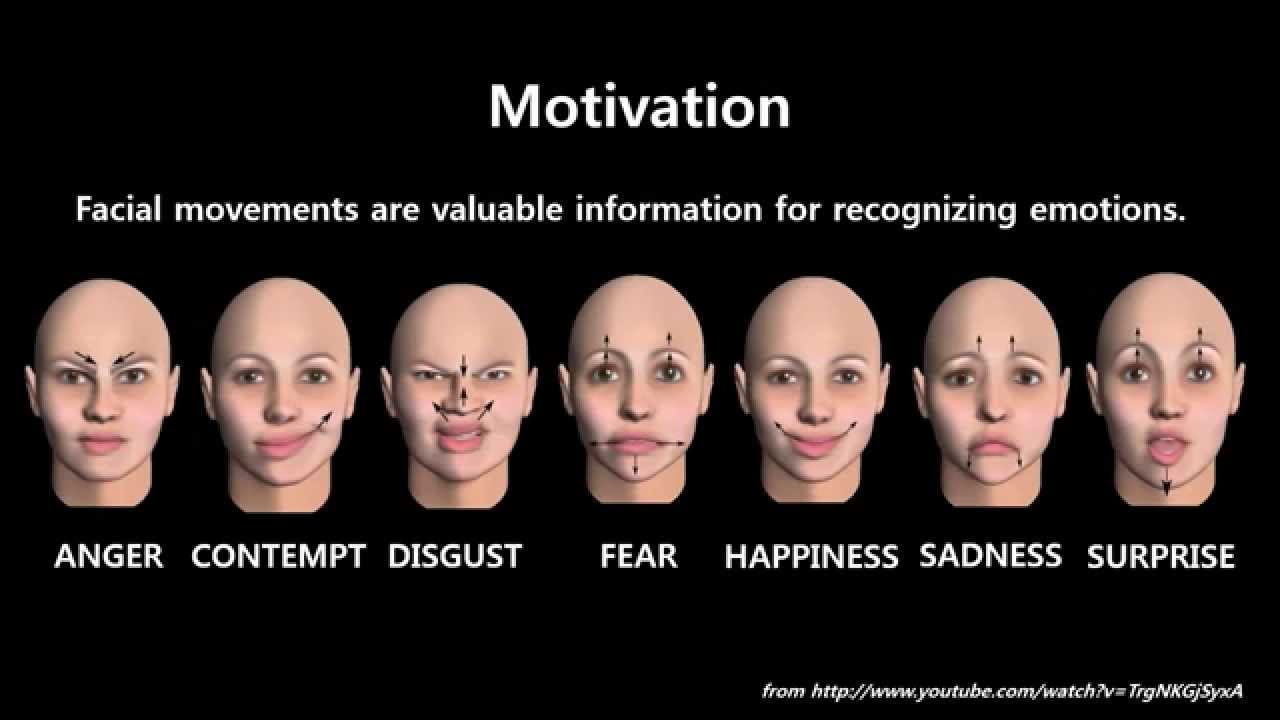

In this work, I will build and train a convolutional neural network (CNN) in Keras from scratch to recognize facial expressions. The data consists of 48x48 pixel grayscale images of faces. The objective is to classify each face based on the emotion shown in the facial expression into one of seven categories (0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral). I will use OpenCV to automatically detect faces in images and draw bounding boxes around them. Once you have trained, saved, and exported the CNN, I will directly serve the trained model predictions to a web interface and perform real-time facial expression recognition on video and image data.

Dataset from kaggle competition. The data consists of 48x48 pixel grayscale images of faces. The faces have been automatically registered so that the face is more or less centered and occupies about the same amount of space in each image. The task is to categorize each face based on the emotion shown in the facial expression in to one of seven categories (0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral).

-

Develop a facial expression recognition model in Keras

-

Build and train a convolutional neural network (CNN)

-

Deploy the trained model to a web interface with Flask

-

Apply the model to real-time video streams and image data

- Clone the repo

https://github.com/nqkhanh2002/Classify-Traffic-Signs-Using-Deep-Learning-for-Self-Driving-Cars.git

- Run the jupyter notebook Notebook will automatically download data to your device. During notebook execution, use the package installer for Python to install packages that you are missing.

- Edit parameter VideoCapture in camera.py to specific your video source. Edit any video in videos folder that you want to apply the model.

- Run file main.py to apply the model to real-time video streams and image data

- Facebook : Nguyễn Quốc Khánh

- Email : nqkdeveloper@gmail.com