Project Team members:-

- Warren Jasper (wjasper@ncsu.edu)

- Shivam Ghodke (sghodke@ncsu.edu)

Techstack:- OpenCV, Python, RaspberryPi, Picamera

To start with the project, follow these steps:

git clone https://github.com/wjasper/PingPongTracker.git

cd PingPongTracker

pip3 install -r requirements.txt #Recommend to install dependencies in virtual env

python3 main_linux.py # For Linux

# or

python3 main_non_linux.py # For non-Linux systems

| Description | Link |

|---|---|

| Portfolio website | shivam.foo |

| Important | Looking for entry level SWE roles, graduating @NC State in computer science in May 2025 |

| GitHub | nuttysunday |

| Blog Link | shivam.foo/blogs/ping-pong-ball-tracking-and-projected-distance-calculation-system-for-data-modeling |

| Video Link | Youtube Video |

| Linkedin Profile | |

| Resume | Resume |

| Twitter/X account |

You can watch an overview of the project by clicking the link below:

Video Link: Project Overview

Feel free to modify any part of it to better suit your needs!

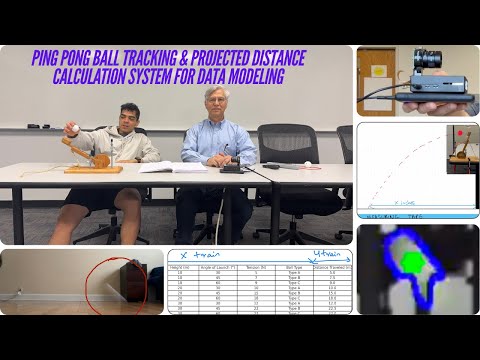

Calculating the distance at which the ball is going to have its first point of contact with the ground after being launched using a catapult.

The reason behind doing this project is for data modeling that is to collect training data so it could later be used by machine learning regression models.

Lay aluminium foil in the expected area where the ball is going to land for a particular configuration, launch the ball, identify the dent on the foil, and calculate the distance using the measuring tape placed parallel with the foil.

This is a tedious process that takes a lot of time. It is a two-man job, and it is not feasible when sampling 10,000 data points.

Using a Raspberry Pi with an HD camera mounted on it, powered by a power bank, can be operated using a remote desktop making the system very mobile. Place Raspberry Pi camera as shown in the picture below.

Place the Raspberry Pi camera as shown in the picture below:

Place a ruler scale, align the scale with green and red line, and get the value on the tape in inches respectively.

Here are the values:

- Green value (mid_value) = 112

- Red value (minimum_value) = 84

So after weeks of research, trials and errors, after losing all hope and were almost about to scrap the project, my professor came up with a brilliant idea. Background separations:- So what we do is take the the first frame, convert it to b&w, and for each frame, we take the absolute the difference with this bg_image.

First challenge, we faced was opencv library is not compatible with the Raspberry Pi camera, and thus have to use Picamera library to get camera object. Also we were getting very low fps, and had to increase it by trying out various Picamera techniques.

We initially wanted to do this in real-time, but because of hardware limitations, the Pi was not able to process the frames as soon as it received them. So we decided to use post-processing, that is first record the video, store the frames in a buffer, and process each frame one by one.

The second problem we faced was that the ball was moving at a very high pace, which was visible maybe for 1-3 frames, and the program was not able to do any object detection.

So we even tried using a plain dark color background, covered using a cloth, but nonetheless because of how small and fast the ball was, the program was not able to track it.

So after weeks of research, trials and errors, after losing all hope and almost about to scrap the project, my professor came up with a brilliant idea.

OpenCV is not compatible with Raspberry Pi for accessing the camera object, thus need to use PiCamera library.

Note there is one caveat here, when we are shooting the ball, there should be no movement in the background, that is the background could be cluttered, does not matter, but there should not be a moving object in the frame, because then it would be captured by the absolute difference.

When the ball travels at a faster pace, in a particular frame, it appears to have a deformed shape, more like a comet.

So no existing articles, blogs or openCV functions which detect objects helped us.

This article provided a good starting point, but it could not track the ball effectively due to its speed and the deformation of its shape, as it was only visible for 1-3 frames.

So that is where we decided to actually just detect the contours in the image subtracted, get the area of the contour and if it is 100px, and the aspect ratio 3, that is it is more of an oval shape than a boxier shape, then draw a circle around it, and start tracking its coordinates of the centre. As you can see in this particular frame, it is not a circle, but a deformed shape, which was quite challenging to track, but because of not tracking a circle, but tracking contours and having a threshold of area and aspect ratio, we were able to draw around the contour and mark a circle around it and get the centre coordinates.

Now that you understand how we obtain the center coordinates of the ball, the next step is calculating the actual distance from these coordinates. This is where the calibration part comes into play.

We have the (x, y) coordinates of the circle with respect to the OpenCV window.

Here, the values are:

- Green value (mid_value) = 112

- Red value (minimum_value) = 84

Using the above two images, we determine that:

- 640 pixels = 56 inches

- Therefore, each pixel = 0.0875 inches.

With the coordinates of the ball being (223) px, (263) px, we can calculate the distance as follows:

- Distance = (223 \times 0.0875 = 19.5125) inches.

Now, considering the vertical offset from the origin (the red value), we can determine the total distance from the origin:

- Total Distance = (84 + 19.5125 = 103.5125) inches.

As shown in the output screenshot above, this calculation provides an accurate distance measurement.